Arize AI vs Maxim: Best Arize AI Alternative for AI Agent Evaluation & Observability in 2025

TL;DR

Arize AI offers enterprise-grade ML observability with strong model monitoring capabilities, but teams building modern AI agents need more than just monitoring. Maxim AI provides a comprehensive end-to-end platform for agent simulation, evaluation, and observability with cross-functional collaboration at its core. While Arize excels at traditional ML monitoring, Maxim delivers faster iteration cycles with integrated experimentation, agent-specific tracing, and no-code evaluation workflows that empower both engineering and product teams.

Introduction

The AI observability landscape has evolved rapidly. While platforms like Arize AI pioneered ML monitoring for traditional machine learning models, the rise of AI agents and large language models (LLMs) demands a fundamentally different approach. Teams shipping production AI agents need more than post-deployment monitoring. They need integrated workflows spanning experimentation, simulation, evaluation, and observability.

Arize AI, founded in 2020, has established itself as a leader in AI observability with strong enterprise adoption. The platform offers both Arize AX (enterprise solution) and Arize Phoenix (open-source option) for tracing and monitoring. However, as AI systems become more agentic and non-deterministic, teams are seeking alternatives that address the complete AI development lifecycle.

Understanding Arize AI

Arize provides unified LLM observability and agent evaluation capabilities with comprehensive tracing tools for AI applications from development to production. The platform excels in several areas:

Core Capabilities

- Model Drift Detection: Continuously monitors feature and model drift across training, validation, and production environments

- LLM Tracing: OpenTelemetry-based instrumentation for tracking application runtime

- Evaluation Framework: LLM-as-a-Judge approach for benchmarking performance

- Data Quality Monitoring: Automated checks for missing, unexpected, or extreme values

- Performance Tracing: Tools to identify and troubleshoot model performance issues

Arize uses a "council of judges" approach combining multiple AI models with human-in-the-loop processes for monitoring and evaluation. The platform supports integration with popular frameworks including LlamaIndex, LangChain, Haystack, and DSPy.

Why Teams Seek Arize Alternatives

While Arize offers robust capabilities, modern AI teams face limitations that drive them toward alternatives:

1. Limited Pre-Production Workflows

Arize focuses primarily on post-deployment monitoring. Teams need integrated environments for prompt engineering, agent simulation, and pre-release testing. The platform lacks comprehensive experimentation tools that enable rapid iteration before production deployment.

2. Engineering-Heavy Configuration

Most Arize workflows require significant engineering effort. Product managers and QA teams struggle to run evaluations or analyze agent behavior without deep technical knowledge, creating bottlenecks in cross-functional collaboration.

3. Narrow Agent-Specific Features

Traditional ML monitoring capabilities don't fully address the unique challenges of AI agent evaluation, including multi-turn conversation tracking, tool call analysis, and reasoning path visibility that agentic systems demand.

4. Complex Data Curation

Creating high-quality evaluation datasets from production logs requires manual processes. Teams need streamlined workflows that automatically curate and enrich datasets for continuous improvement.

Maxim AI: A Comprehensive Alternative

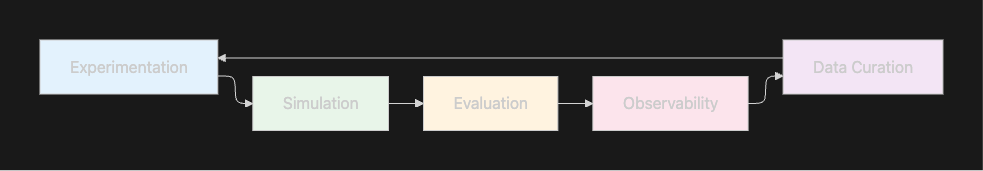

Maxim AI takes an end-to-end approach to AI quality, integrating experimentation, simulation, evaluation, and observability into a unified platform. Unlike monitoring-focused solutions, Maxim empowers teams to build reliable AI agents 5x faster through collaborative, full-lifecycle tooling.

Complete AI Development Lifecycle

1. Experimentation

Maxim's Playground++ enables rapid prompt engineering with:

- Version control for prompts directly from the UI

- Side-by-side comparison of outputs across different models and parameters

- Seamless database and RAG pipeline integration

- Cost and latency analysis for informed decision-making

2. Agent Simulation

AI-powered simulations test agents across hundreds of scenarios:

- Simulate customer interactions with diverse user personas

- Evaluate conversational trajectories and task completion

- Re-run simulations from any step for root cause analysis

- Identify failure patterns before production deployment

3. Unified Evaluation Framework

Maxim combines machine and human evaluations:

- Pre-built evaluators for common issues (hallucination, toxicity, relevance)

- Custom evaluators (AI, programmatic, statistical)

- Configurable at session, trace, or span level

- Visual comparison across prompt versions and model configurations

4. Production Observability

Real-time monitoring with agent-specific tracing:

- Distributed tracing for multi-agent workflows

- Automated online evaluations for quality assurance

- Custom dashboards across business-relevant dimensions

- Threshold-based alerts routed to Slack, PagerDuty, or OpsGenie

5. Data Engine

Seamless dataset management:

- Automatic curation from production logs

- Human-in-the-loop enrichment workflows

- Multi-modal dataset support (text, images, audio)

- Synthetic data generation for testing

Feature Comparison

| Feature | Arize AI | Maxim AI |

|---|---|---|

| Pre-Production Testing | Limited | Comprehensive simulation & experimentation |

| No-Code Evaluations | Engineering-heavy | Full UI-based workflows for product teams |

| Agent-Specific Tracing | General LLM tracing | Purpose-built for multi-agent systems |

| Custom Dashboards | Standard views | Flexible, dimension-based custom insights |

| Data Curation | Manual processes | Automated with human-in-the-loop |

| Prompt Management | Basic | Version control with A/B testing |

| Human Evaluations | Limited | Integrated workflow with review queues |

| Cross-Functional Collaboration | Developer-focused | Built for engineering + product + QA teams |

| OpenTelemetry Support | Yes | Yes (with forwarding to external platforms) |

| SDK Languages | Python, TypeScript | Python, TypeScript, Java, Go |

Key Differentiators

1. Cross-Functional Collaboration

While Arize caters primarily to ML engineers, Maxim's UX is designed for seamless collaboration between engineering, product, and QA teams. Product managers can define, run, and analyze evaluations independently without code, eliminating engineering bottlenecks.

2. Full-Stack Agent Support

Maxim addresses the complete AI agent lifecycle, from experimentation to production monitoring. Teams don't need separate tools for different stages, reducing context switching and integration complexity.

3. Flexible Evaluation Architecture

Maxim's evaluation framework supports granular configuration at any level:

- Session-level evaluations for overall conversation quality

- Trace-level metrics for individual agent interactions

- Span-level checks for specific model outputs or tool calls

This flexibility enables precise quality measurement for complex multi-agent systems.

4. Production-Driven Improvement

Maxim creates a closed-loop system where production insights directly fuel agent improvement. Failed traces automatically populate evaluation datasets, experimental changes deploy with observability intact, and human feedback continuously refines automated evaluators.

5. Enterprise-Ready Security

Both platforms offer robust security, but Maxim provides:

- In-VPC deployment options

- Custom SSO integration

- SOC 2 Type 2 compliance

- Role-based access controls

- Custom log retention policies

Real-World Impact

Companies switching from Arize to Maxim consistently report significant improvements:

Faster Iteration Cycles: Integrated experimentation and evaluation eliminate the need to context-switch between tools, enabling teams to iterate 5x faster on agent quality improvements.

Broader Team Participation: No-code evaluation workflows empower product and QA teams to contribute to quality assurance without engineering dependencies, accelerating release cycles.

Improved Agent Reliability: Pre-production simulation catches edge cases before deployment, while continuous online evaluation maintains quality at scale. Read how Thoughtful improved their AI reliability with Maxim.

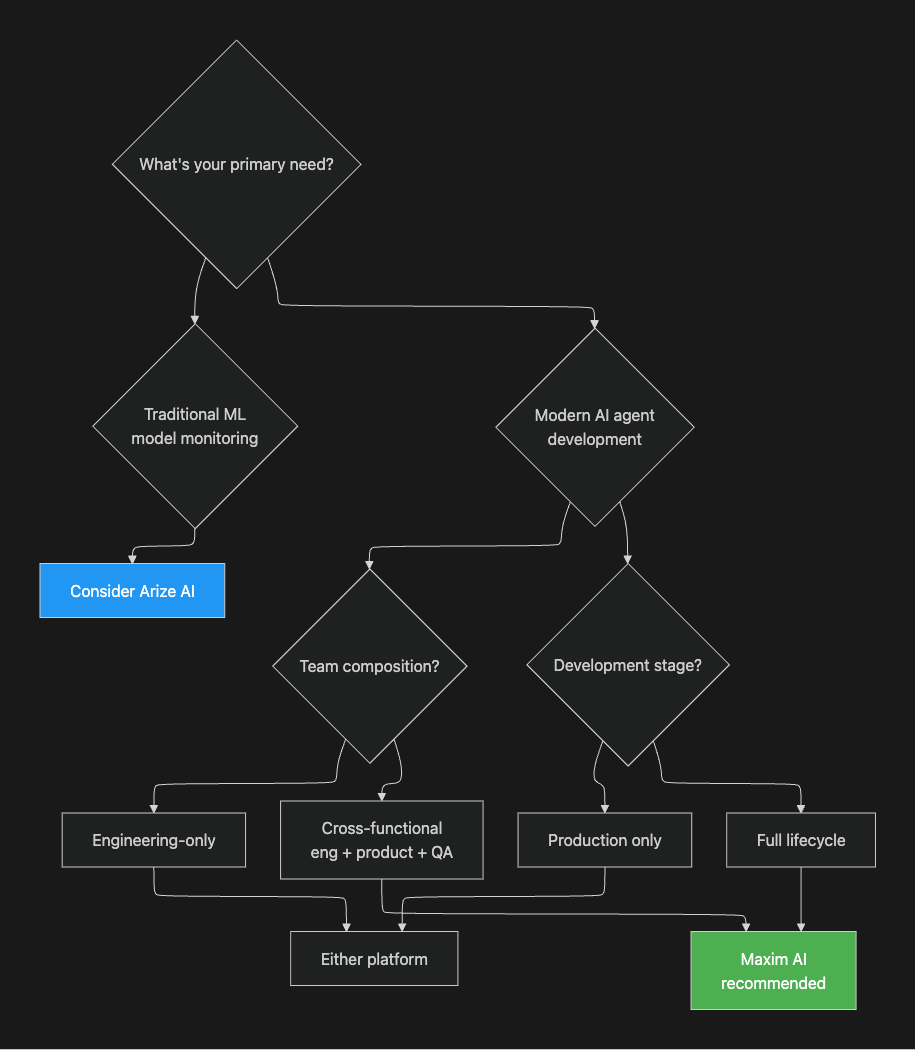

Decision Framework

Choose Arize if:

- You primarily work with traditional ML models requiring drift detection

- Your focus is exclusively post-deployment monitoring

- Your team consists only of ML engineers

Choose Maxim if:

- You're building modern AI agents with LLMs

- You need integrated workflows from experimentation to production

- Cross-functional teams need to collaborate on AI quality

- You want to simulate and evaluate agents before deployment

- You need flexible, granular evaluation capabilities

Getting Started with Maxim

Maxim offers a straightforward onboarding process:

- Sign Up: Start with a 14-day free trial requiring no credit card

- Integrate: Use SDKs in Python, TypeScript, Java, or Go for quick setup

- Experiment: Test prompts and models in the Playground++

- Simulate: Run agent simulations across diverse scenarios

- Evaluate: Configure evaluators through the UI or SDK

- Deploy: Enable production observability with one line of code

- Iterate: Use production insights to continuously improve agents

For teams with custom requirements, Maxim's enterprise plan includes dedicated customer success management, in-VPC deployments, and custom SSO integration.

Conclusion

The AI observability landscape demands more than traditional ML monitoring. As teams shift from predictive models to autonomous agents, they need platforms that support the complete development lifecycle with cross-functional collaboration built in.

Arize AI offers strong capabilities for traditional ML observability, particularly for teams focused on model drift detection and post-deployment monitoring. However, organizations building modern AI agents benefit from Maxim's comprehensive approach spanning experimentation, simulation, evaluation, and observability.

Maxim's unified platform eliminates tool sprawl, accelerates iteration cycles through no-code workflows, and empowers cross-functional teams to ship reliable AI agents with confidence. For teams serious about agent quality and velocity, Maxim represents the evolution beyond monitoring-only solutions.

Ready to experience the difference? Start your free trial or compare Maxim with Arize in detail to see how the platforms stack up for your specific needs.