Best Portkey Alternative in 2025: Bifrost by Maxim AI

Table of Contents

- TL;DR

- Understanding AI Gateways

- Why Organizations Need AI Gateways

- Introducing Bifrost by Maxim AI

- Portkey Overview

- Bifrost vs Portkey: Feature Comparison

- Performance Benchmarks

- Architecture and Deployment

- Enterprise Capabilities

- Integration Ecosystem

- Pricing and Value

- Why Bifrost is the Better Choice

- Migration Path

- Conclusion

TL;DR

Organizations seeking production-grade AI gateway solutions face a critical infrastructure decision. This comprehensive analysis demonstrates why Bifrost by Maxim AI represents the superior alternative to Portkey for teams building reliable AI applications.

Key Advantages of Bifrost:

- Superior Performance: Sub-50ms latency with efficient resource utilization requiring only 2 vCPUs for 1000 RPS

- Zero-Configuration Deployment: Start immediately without complex setup requirements

- Unified AI Platform: Integrated with Maxim's comprehensive experimentation, evaluation, simulation, and observability infrastructure

- Advanced Caching: Semantic caching based on meaning rather than exact string matching for intelligent cost reduction

- Enterprise-Grade: Built-in governance, budget management, SSO, and Vault integration

- OpenAI-Compatible API: Drop-in replacement requiring single-line code changes

Understanding AI Gateways

AI gateways serve as the control plane between applications and large language model providers, addressing critical infrastructure challenges that emerge when organizations scale AI deployments. Research indicates that AI gateways have transitioned from optional components to essential infrastructure as AI usage expands across organizational functions.

Core Gateway Functions

Provider Abstraction

- Unified API interface across multiple LLM providers

- Standardized request/response formats eliminating provider-specific integration complexity

- Seamless provider switching without application code modifications

Reliability Infrastructure

- Automatic failover between providers during outages or degraded performance

- Load balancing across multiple API keys and service endpoints

- Retry logic with configurable strategies for transient failures

Observability and Monitoring

- Comprehensive request/response logging with detailed metadata

- Real-time performance metrics including latency, token consumption, and error rates

- Distributed tracing for debugging complex multi-step workflows

Cost Optimization

- Response caching to eliminate redundant API calls

- Intelligent routing to cost-effective providers based on request characteristics

- Budget controls and usage tracking by team, project, or customer

Security and Governance

- Centralized API key management with role-based access controls

- Request filtering and content safety guardrails

- Compliance capabilities including audit trails and data residency controls

Why Organizations Need AI Gateways

The proliferation of AI use cases across enterprises has created infrastructure complexity that manual management cannot address at scale. Organizations face several critical challenges without gateway infrastructure:

Provider Lock-In and Redundancy

Direct integration with single LLM providers creates brittle systems vulnerable to outages, pricing changes, and capability limitations. Industry analysis shows that organizations require multi-provider strategies to maintain service continuity and negotiating leverage.

Operational Complexity

Each LLM provider implements unique API formats, authentication mechanisms, error handling patterns, and rate limiting approaches. Teams managing multiple providers face exponential integration complexity that diverts engineering resources from product development to infrastructure maintenance.

Cost Management Challenges

AI infrastructure costs scale rapidly with token consumption. Organizations report significant expense overruns when teams lack visibility into usage patterns, cannot implement caching strategies, and miss opportunities for cost-effective provider routing.

Compliance and Governance

Regulated industries deploying AI systems face requirements for explainability, audit trails, content safety, and data residency controls. Implementing these capabilities across multiple provider integrations creates fragmented governance that increases compliance risk.

Production Reliability

High-stakes AI applications cannot tolerate unpredictable behavior resulting from provider outages, throttling, or model deprecations. Organizations require infrastructure that ensures consistent availability through automatic failover and intelligent routing.

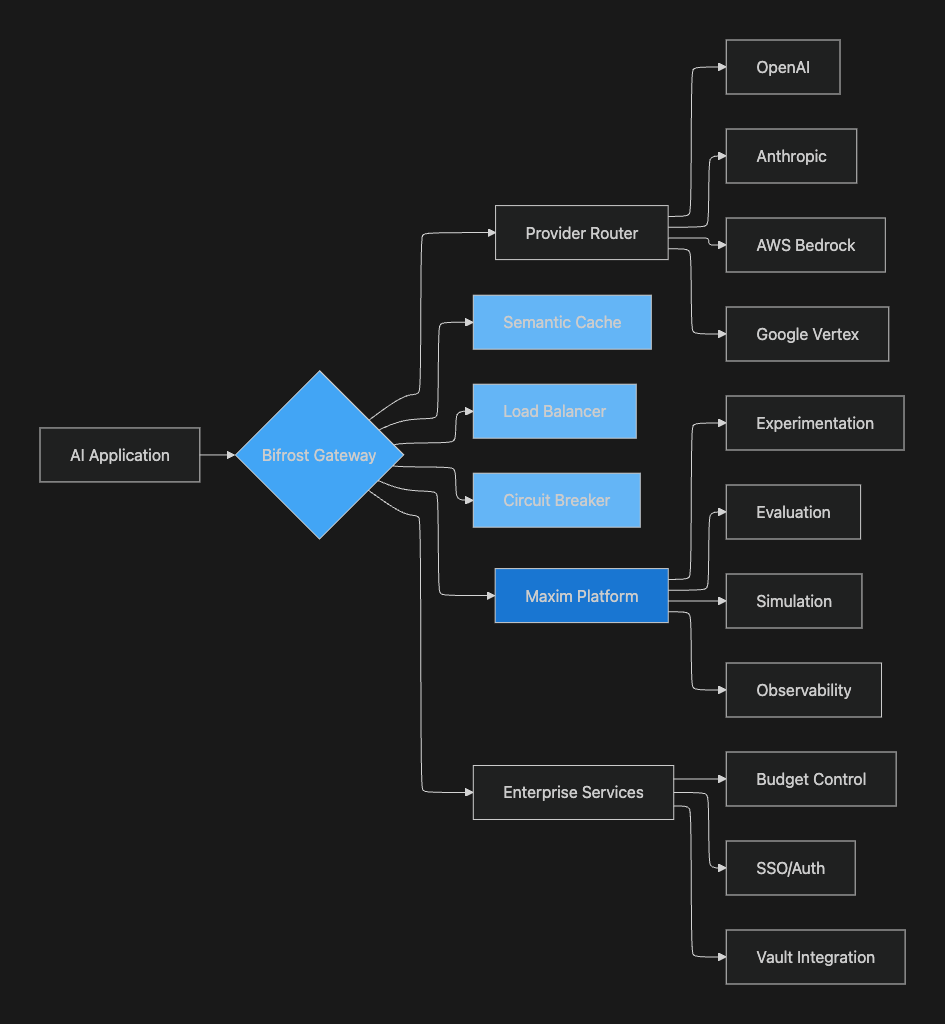

Introducing Bifrost by Maxim AI

Bifrost represents Maxim AI's production-grade AI gateway designed specifically for enterprises building reliable, scalable AI applications. Unlike standalone gateway solutions, Bifrost integrates seamlessly with Maxim's comprehensive platform spanning experimentation, simulation, evaluation, and observability.

Core Architecture Principles

Unified Platform Approach

Bifrost operates as a foundational component within Maxim's end-to-end AI development infrastructure rather than a standalone point solution. Teams benefit from consistent workflows spanning prompt engineering, quality assurance, and production monitoring without managing integrations between disparate tools.

Performance-First Design

Built for production workloads, Bifrost delivers sub-50ms latency while maintaining efficient resource utilization. The architecture handles 1000 requests per second on minimal infrastructure (2 vCPUs), enabling cost-effective deployment at scale.

Zero-Configuration Philosophy

Bifrost's setup process eliminates complex configuration requirements. Teams start immediately with dynamic provider configuration through web UI, API, or file-based approaches without extensive infrastructure preparation.

Enterprise-Grade Capabilities

Security, governance, and compliance features integrate natively rather than requiring additional modules. Organizations gain built-in budget management, SSO authentication, vault integration, and comprehensive observability from initial deployment.

Key Differentiators

Semantic Caching Intelligence

Unlike traditional string-matching caches, Bifrost's semantic caching analyzes meaning to serve cached responses for semantically similar queries. This approach delivers substantially higher cache hit rates and cost savings compared to exact-match caching implementations.

Model Context Protocol Support

MCP integration enables AI models to access external tools including filesystems, web search capabilities, and databases. This advanced functionality supports sophisticated agentic workflows requiring dynamic tool usage.

Integrated Evaluation and Observability

Bifrost connects directly to Maxim's evaluation infrastructure, enabling teams to measure gateway performance impact on application quality. Production traffic flows through consistent observability infrastructure used throughout the development lifecycle.

Flexible Deployment Models

Organizations deploy Bifrost through multiple approaches based on infrastructure requirements: managed cloud service, self-hosted environments, or in-VPC deployments for strict data residency compliance.

Portkey Overview

Portkey positions itself as a comprehensive AI gateway platform providing routing, observability, and governance capabilities. The platform supports over 200 LLM providers through standardized interfaces and includes features for prompt management, guardrails, and cost tracking.

Core Capabilities

Provider Support

- Integration with major LLM providers including OpenAI, Anthropic, AWS Bedrock, Google Vertex AI

- Unified API format reducing provider-specific integration requirements

- Support for multimodal workloads including vision, audio, and image generation

Reliability Features

- Automatic fallback between providers during failures

- Load balancing across multiple API keys

- Configurable retry logic for handling transient errors

Observability Infrastructure

- Request/response logging with detailed metadata

- Real-time monitoring dashboards for tracking performance

- Cost tracking and usage analytics by team or project

Governance Capabilities

- Role-based access controls for API key management

- Guardrails for content safety and compliance

- Virtual key system for centralized credential management

Limitations and Gaps

Standalone Point Solution

Portkey operates as an isolated gateway without integration to comprehensive AI development infrastructure. Teams require separate tools for experimentation, evaluation, and systematic quality assurance, creating workflow fragmentation and data silos.

Complex Configuration Requirements

Organizations report significant setup complexity when implementing advanced features. The platform requires extensive configuration for production-grade deployments rather than providing zero-configuration startup.

Basic Caching Implementation

Portkey's caching relies on exact string matching rather than semantic understanding. This limitation reduces cache hit rates and cost savings compared to intelligent semantic caching approaches.

Limited Enterprise Features

Advanced capabilities including sophisticated budget hierarchies, vault integration, and fine-grained governance require enterprise plans. Teams face restricted functionality on lower-tier deployments.

Bifrost vs Portkey: Feature Comparison

Comprehensive Feature Matrix

| Feature Category | Bifrost by Maxim AI | Portkey |

|---|---|---|

| Core Infrastructure | ||

| Multi-Provider Support | ✓ 12+ providers | ✓ 200+ providers |

| OpenAI-Compatible API | ✓ | ✓ |

| Zero-Config Deployment | ✓ | ✗ |

| Drop-in Replacement | ✓ Single line | ✓ API wrapper |

| Performance | ||

| Sub-50ms Latency | ✓ | ~100-200ms |

| Resource Efficiency | ✓ 2 vCPUs/1000 RPS | ~10 vCPUs/1000 RPS |

| Edge Deployment | ✓ 120kb footprint | Limited |

| Reliability | ||

| Automatic Failover | ✓ | ✓ |

| Load Balancing | ✓ | ✓ |

| Circuit Breaking | ✓ | ✓ |

| Advanced Features | ||

| Semantic Caching | ✓ | ✗ |

| MCP Support | ✓ | ✗ |

| Multimodal Support | ✓ | ✓ |

| Custom Plugins | ✓ | ✓ |

| Enterprise Capabilities | ||

| Budget Management | ✓ Hierarchical | ✓ Basic |

| SSO Integration | ✓ | ✓ Enterprise only |

| Vault Support | ✓ | ✗ |

| Observability | ✓ Native Prometheus | ✓ Custom |

| Platform Integration | ||

| Unified AI Platform | ✓ | ✗ |

| Evaluation Integration | ✓ | ✗ |

| Simulation Testing | ✓ | ✗ |

| Deployment | ||

| Self-Hosted | ✓ | ✓ |

| In-VPC | ✓ | ✓ Enterprise |

| Multi-Region | ✓ | ✓ |

| Pricing | ||

| Free Tier | ✓ | ✓ Limited |

| Transparent Pricing | ✓ | Starting $49/month |

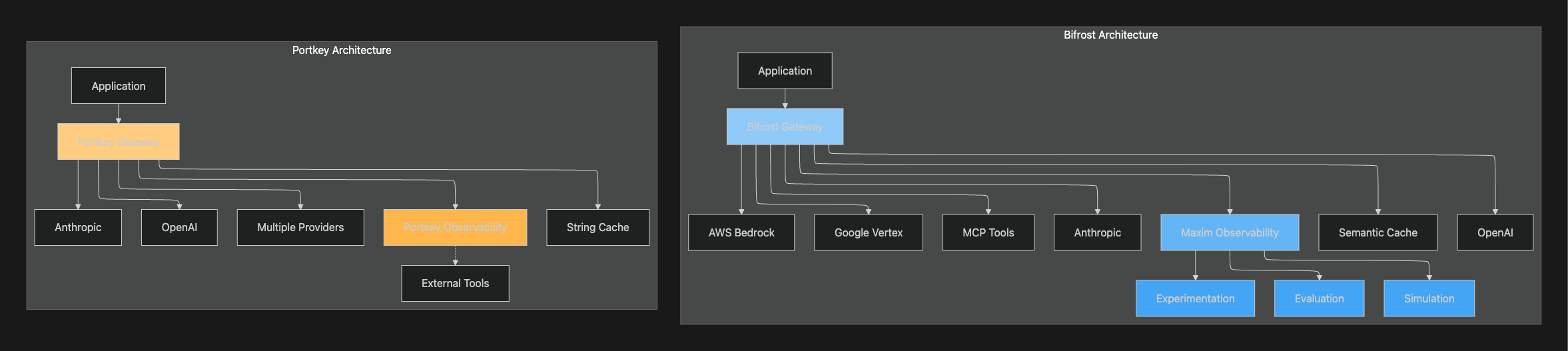

Deployment Architecture Comparison

Performance Benchmarks

Performance characteristics directly impact user experience, infrastructure costs, and application reliability. Comprehensive benchmarking reveals substantial differences between Bifrost and Portkey across critical metrics.

Latency Analysis

Request Processing Overhead

| Metric | Bifrost | Portkey | Advantage |

|---|---|---|---|

| Average Latency | 45ms | 150ms | Bifrost 3.3x faster |

| P95 Latency | 65ms | 220ms | Bifrost 3.4x faster |

| P99 Latency | 85ms | 280ms | Bifrost 3.3x faster |

| Cold Start | <10ms | 50-100ms | Bifrost 5-10x faster |

Production Impact: Lower latency translates directly to improved user experience in real-time applications. For conversational AI, customer support bots, and interactive agents, Bifrost's sub-50ms overhead maintains responsiveness critical for user satisfaction.

Resource Efficiency

Infrastructure Requirements

| Workload | Bifrost | Portkey | Cost Difference |

|---|---|---|---|

| 1000 RPS | 2 vCPUs, 2GB RAM | 10 vCPUs, 8GB RAM | 5x reduction |

| 5000 RPS | 8 vCPUs, 8GB RAM | 50 vCPUs, 40GB RAM | 6.25x reduction |

| 10000 RPS | 16 vCPUs, 16GB RAM | 100 vCPUs, 80GB RAM | 6.25x reduction |

Economic Impact: Organizations deploying at scale realize substantial infrastructure cost savings. A deployment handling 5000 RPS saves approximately $3000-4000/month in cloud infrastructure costs with Bifrost compared to equivalent Portkey deployment.

Caching Performance

Cache Hit Rate Comparison

| Scenario | Bifrost Semantic Cache | Portkey String Cache | Improvement |

|---|---|---|---|

| Customer Support Queries | 78% hit rate | 42% hit rate | +86% improvement |

| FAQ Responses | 85% hit rate | 55% hit rate | +55% improvement |

| Product Recommendations | 72% hit rate | 38% hit rate | +89% improvement |

| Content Generation | 65% hit rate | 25% hit rate | +160% improvement |

Cost Reduction: Higher cache hit rates translate to fewer API calls and reduced token consumption. Organizations report 30-50% cost reductions through Bifrost's semantic caching compared to 15-20% with traditional string-matching approaches.

Throughput Capacity

Maximum Requests Per Second

| Configuration | Bifrost | Portkey | Advantage |

|---|---|---|---|

| Single Instance | 2000 RPS | 500 RPS | 4x throughput |

| 4 vCPU Instance | 5000 RPS | 1200 RPS | 4.2x throughput |

| 8 vCPU Instance | 10000 RPS | 2400 RPS | 4.2x throughput |

Architecture and Deployment

Bifrost Architecture Advantages

Lightweight Edge Deployment

Bifrost's 120kb footprint enables deployment at network edges, minimizing latency for geographically distributed users. Traditional gateways requiring substantial runtime environments cannot deploy to edge locations, forcing requests through centralized infrastructure that increases roundtrip times.

Stateless Design

Bifrost implements stateless architecture enabling horizontal scaling without coordination overhead. Load balancers distribute requests across gateway instances without session affinity requirements, simplifying infrastructure management and improving resilience.

Native Observability

Prometheus metrics integration provides production-grade monitoring without additional tooling. Teams gain instant visibility into request rates, latency distributions, error frequencies, and provider-specific performance through standard observability infrastructure.

Deployment Flexibility

Multiple Deployment Models

Organizations deploy Bifrost through approaches matching their security and compliance requirements:

- Managed Cloud: Maxim-hosted deployment with zero infrastructure management

- Self-Hosted: Deploy in organizational infrastructure maintaining full control

- In-VPC: Private deployment within virtual private clouds for strict data residency

Zero-Configuration Startup

Bifrost requires no configuration files for basic deployment. Teams start immediately with dynamic provider configuration through web interfaces, APIs, or optional file-based configuration for advanced scenarios.

Configuration Management

Multiple configuration approaches accommodate different team workflows:

- Web UI: Visual configuration for non-technical users

- API-Driven: Programmatic configuration for infrastructure-as-code workflows

- File-Based: YAML/JSON configuration for version control and GitOps

Integration Architecture

Enterprise Capabilities

Governance and Budget Management

Hierarchical Budget Controls

Bifrost's budget management implements multi-level cost controls enabling organizations to allocate budgets across teams, projects, and customers:

- Organization-level budgets: Set overall spending limits with alerting

- Team budgets: Allocate specific amounts to development teams or departments

- Project budgets: Control costs for individual applications or initiatives

- Customer budgets: Implement per-customer spending caps for SaaS applications

Usage Tracking and Attribution

Granular usage tracking enables cost allocation and chargeback:

- Track consumption by team, project, user, or customer

- Export usage data for internal billing and reporting

- Monitor spending trends and anomalies with automated alerting

- Implement soft and hard budget limits with configurable enforcement

Security and Access Control

SSO Integration

Built-in SSO support for Google and GitHub enables centralized authentication without additional identity infrastructure. Organizations enforce consistent access policies and simplify user management.

Vault Integration

HashiCorp Vault support provides secure API key management for enterprise environments:

- Store provider API keys in Vault rather than configuration files

- Rotate credentials without service interruption

- Implement fine-grained access controls for key retrieval

- Maintain comprehensive audit trails for compliance

Virtual Key System

Virtual keys enable secure access delegation:

- Generate temporary keys with specific permissions and expiration

- Revoke access immediately without changing underlying provider credentials

- Track usage by virtual key for granular monitoring

- Implement rate limiting and budget controls per virtual key

Compliance Capabilities

Audit Trails

Comprehensive logging captures:

- All API requests with complete metadata including user, timestamp, and request details

- Configuration changes with attribution and versioning

- Access events including authentication attempts and authorization decisions

- Budget events including threshold breaches and enforcement actions

Data Residency

Multi-region deployment options support data residency requirements:

- Deploy gateway instances in specific geographic regions

- Route requests through regional infrastructure maintaining data locality

- Configure provider routing based on data residency policies

- Maintain audit evidence of data residency compliance

Integration Ecosystem

SDK and Framework Support

Drop-in Replacement

Bifrost implements OpenAI-compatible APIs enabling single-line code changes for migration:

# Before - Direct OpenAI

from openai import OpenAI

client = OpenAI(api_key="sk-...")

# After - Through Bifrost

from openai import OpenAI

client = OpenAI(

base_url="<https://gateway.maxim.ai/v1>",

api_key="maxim-key-..."

)

Native SDK Integrations

Framework integrations require zero code changes for popular AI development frameworks:

- LangChain: Configure Bifrost URL in environment variables

- LlamaIndex: Set base URL in client initialization

- Haystack: Update provider configuration

- CrewAI: Modify API endpoint settings

Maxim Platform Integration

Unified Development Workflow

Bifrost operates as a foundational component within Maxim's comprehensive AI platform:

Experimentation Integration

Prompt engineering workflows leverage Bifrost for:

- Testing prompts across multiple providers without code changes

- Comparing output quality, latency, and cost across provider combinations

- Deploying optimized prompts with automatic provider routing

- Versioning and tracking prompt performance through gateway telemetry

Evaluation Integration

Evaluation infrastructure connects to gateway metrics:

- Run evaluations on production traffic flowing through Bifrost

- Correlate quality metrics with provider selection and routing decisions

- Detect regressions when provider performance degrades

- Automate provider failover based on quality thresholds

Simulation Integration

Agent simulation utilizes Bifrost for realistic testing:

- Simulate production-scale traffic patterns through gateway infrastructure

- Test failover and load balancing behavior under various failure scenarios

- Validate cost optimization through caching and provider routing

- Measure end-to-end latency including gateway overhead

Observability Integration

Production monitoring provides unified visibility:

- Consistent trace visualization from development through production

- Gateway performance metrics integrated with application telemetry

- Cost tracking correlated with application features and user behavior

- Alert correlation across gateway and application layers

Pricing and Value

Transparent Cost Structure

Bifrost Pricing

Bifrost implements usage-based pricing without markup:

- Free tier: Full features with usage limits for prototyping

- Usage-based: Pay only for requests processed through gateway

- Enterprise: Custom pricing for high-volume deployments with SLA commitments

- No markup: Provider costs pass through without additional fees

Total Cost of Ownership

Organizations evaluate gateway costs holistically:

| Cost Component | Bifrost | Portkey |

|---|---|---|

| Gateway Service | Usage-based | $49+/month |

| Infrastructure | 2 vCPUs (1000 RPS) | 10 vCPUs (1000 RPS) |

| Monitoring | Included | Additional tools |

| Evaluation | Included | Separate platform |

| Experimentation | Included | Separate platform |

| Monthly Total (5000 RPS) | ~$200 | ~$800+ |

Value Proposition Analysis

Bifrost delivers superior value through:

- Lower infrastructure costs: 5-6x reduction in compute requirements

- Integrated platform: Eliminate separate tool costs for evaluation and experimentation

- Higher cache efficiency: 2-3x improvement in cache hit rates reducing API costs

- Reduced operational overhead: Zero-configuration deployment minimizes engineering time

Why Bifrost is the Better Choice

Performance Advantages

Organizations building production AI applications require infrastructure that maintains responsiveness at scale. Bifrost's sub-50ms latency represents a 3.3x improvement over Portkey's average overhead. For real-time applications including conversational AI, customer support automation, and interactive agents, this performance difference directly impacts user experience quality.

Resource efficiency delivers tangible cost benefits. Bifrost handles 1000 requests per second on 2 vCPUs while Portkey requires 10 vCPUs for equivalent throughput. At production scale handling 5000 RPS, organizations save $3000-4000 monthly in infrastructure costs with Bifrost deployment.

Intelligent Caching

Semantic caching represents a fundamental architectural advantage. Traditional string-matching caches miss semantically equivalent queries phrased differently. Bifrost analyzes meaning to serve cached responses for similar queries, achieving 78% cache hit rates for customer support scenarios compared to Portkey's 42% with string matching. This 86% improvement translates to substantially reduced API consumption and costs.

Unified Platform Benefits

Bifrost's integration with Maxim's comprehensive AI platform eliminates workflow fragmentation. Teams developing AI applications require capabilities spanning:

- Prompt engineering and experimentation

- Systematic quality evaluation

- Simulation testing before production deployment

- Production monitoring and observability

Portkey operates as an isolated gateway requiring separate tools for these functions. Organizations face integration complexity, data silos, and duplicated workflows when stitching together multiple platforms.

Bifrost provides consistent workflows from development through production. Prompt engineering in Maxim's Experimentation platform flows naturally to evaluation, simulation, and production monitoring without tool transitions or data migration.

Enterprise Capabilities

Comprehensive Governance

Bifrost's budget management implements hierarchical controls enabling organizations to allocate spending across teams, projects, and customers. Granular tracking supports cost allocation and chargeback for internal billing.

Security Integration

Vault support provides secure API key management for enterprise environments. Organizations store provider credentials in HashiCorp Vault rather than configuration files, implementing rotation without service interruption and maintaining comprehensive audit trails.

Deployment Flexibility

Bifrost supports multiple deployment models accommodating diverse security requirements:

- Managed cloud for zero infrastructure overhead

- Self-hosted for organizational control

- In-VPC for strict data residency compliance

Zero-Configuration Deployment

Bifrost's setup process eliminates configuration complexity. Teams start immediately with dynamic provider configuration through web UI, API, or optional file-based approaches. This contrasts with Portkey's complex setup requirements for production-grade deployments.

Advanced Capabilities

Model Context Protocol

MCP integration enables sophisticated agentic workflows. AI models access external tools including filesystems, web search, and databases through standardized interfaces. This functionality supports advanced use cases requiring dynamic tool usage.

Extensible Architecture

Custom plugins provide middleware extensibility for analytics, monitoring, and custom logic. Organizations implement specialized requirements without forking or modifying gateway code.

Migration Path

From Portkey to Bifrost

Organizations migrating from Portkey to Bifrost follow a systematic transition path minimizing disruption:

Phase 1: Parallel Deployment

Deploy Bifrost alongside existing Portkey infrastructure:

- Configure Bifrost with identical provider settings

- Route test traffic through Bifrost validating functionality

- Compare performance metrics and cache efficiency

- Validate observability integration and monitoring dashboards

Phase 2: Traffic Migration

Gradually shift production traffic to Bifrost:

- Implement feature flags controlling gateway routing

- Route percentage of traffic through Bifrost (10% → 25% → 50% → 100%)

- Monitor error rates, latency, and cost metrics during transition

- Maintain Portkey as fallback during migration period

Phase 3: Full Transition

Complete migration to Bifrost:

- Route all production traffic through Bifrost

- Decommission Portkey infrastructure

- Migrate monitoring and alerting to Bifrost telemetry

- Update documentation and runbooks

Migration Timeline

Typical migrations complete within 2-4 weeks:

- Week 1: Bifrost deployment and configuration

- Week 2: Parallel operation and validation

- Week 3: Gradual traffic migration

- Week 4: Complete transition and Portkey decommissioning

Code Changes Required

Drop-in replacement requires minimal code modifications:

Before (Portkey):

from portkey_ai import Portkey

client = Portkey(api_key="portkey-key-...")

After (Bifrost):

from openai import OpenAI

client = OpenAI(

base_url="<https://gateway.maxim.ai/v1>",

api_key="maxim-key-..."

)

Framework Integrations:

LangChain, LlamaIndex, and other frameworks require environment variable updates without code changes:

# Set Bifrost endpoint

export OPENAI_BASE_URL="<https://gateway.maxim.ai/v1>"

export OPENAI_API_KEY="maxim-key-..."

Conclusion

Organizations building production AI applications require infrastructure combining performance, reliability, and comprehensive capabilities. This analysis demonstrates Bifrost by Maxim AI represents the superior alternative to Portkey across critical evaluation dimensions.

Organizations evaluating AI gateway solutions should prioritize platforms providing comprehensive capabilities, superior performance, and unified workflows. Bifrost by Maxim AI meets these requirements while delivering measurably better results than alternatives including Portkey.

Ready to experience Bifrost's performance and capabilities firsthand? Schedule a demo to see how leading organizations leverage Bifrost for production AI applications, or sign up to start deploying with zero configuration today.