Best Tools to Monitor and Debug AI Agents in Production

TL;DR

Autonomous agents fail through silent reasoning errors that traditional monitoring cannot detect. Maxim AI offers real-time trace replay and automated root cause analysis. Langfuse provides open-source tracing. LangSmith delivers LangChain debugging. Datadog unifies infrastructure monitoring. Weights & Biases Weave specializes in multi-agent workflows.

Table of Contents

Why Agent Debugging Differs

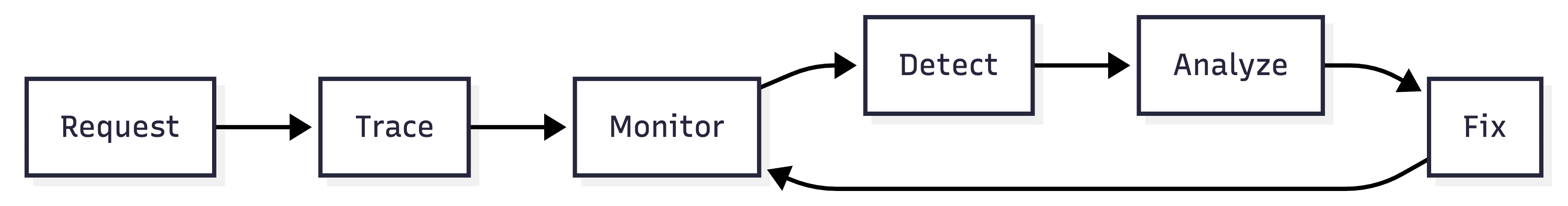

Debugging autonomous agents requires fundamentally different approaches than traditional software. According to TrueFoundry's observability research, agents fail through logical errors rather than technical crashes. They make incorrect decisions that execute successfully, select inappropriate tools with valid syntax, and follow reasoning chains that appear coherent but lead to wrong conclusions.

Silent Logic Errors: An agent successfully calls all APIs, receives valid responses, and completes execution without errors while making fundamentally wrong decisions. Traditional monitoring shows green status while users receive incorrect outputs.

Tool Selection Mistakes: Agents choose database queries when web searches would be appropriate, pass technically valid but semantically wrong parameters, or create infinite loops through repeated tool calls that each succeed individually.

Reasoning Chain Breakdown: Early reasoning errors cascade through multi-step workflows. An agent misinterprets retrieved context, generates accurate summaries of the wrong information, and completes tasks successfully while solving the wrong problem.

As Microsoft Azure research explains, traditional observability tracks what systems do. Agent observability must reveal why and how agents make decisions.

Production Debugging

Best Tools

1. Maxim AI

Maxim AI provides comprehensive production debugging built specifically for autonomous agents, enabling teams to identify and resolve issues rapidly.

Real-Time Observability: Agent observability captures every interaction with complete context. Monitor individual agent actions, tool calls, reasoning steps, and decision points across distributed systems. Real-time visibility enables catching issues as they occur rather than discovering them through user complaints.

Trace Replay: Replay agent executions step-by-step. Rewind to any point in the workflow, inspect reasoning at each decision, examine tool inputs and outputs, and identify exactly where logic breaks. This distributed tracing capability proves essential for debugging complex multi-agent systems.

Automated Root Cause Analysis: Analysis engine identifies failure patterns automatically, surfaces common error modes across requests, and recommends specific fixes based on patterns. Teams spend less time investigating and more time fixing.

Production Data Curation: Automatically transform failures into evaluation test cases. Capture interactions with full context, enrich with correct outcomes through human review, and add to evaluation datasets. This closes the loop between debugging and systematic quality improvement.

Cost and Performance: Comprehensive dashboards break down token usage by user, feature, and conversation. Bifrost, Maxim's LLM gateway, adds semantic caching, reducing costs by 30% while maintaining quality.

Node-Level Granularity: Attach metrics to specific nodes in multi-agent workflows. Isolate which component causes failures in distributed systems.

Best For: Teams running complex multi-agent systems, organizations requiring rapid issue resolution, and companies needing systematic improvement from production failures.

Compare Maxim vs LangSmith | Compare Maxim vs Langfuse

2. Langfuse

Langfuse provides comprehensive open-source observability with deep tracing capabilities and flexible deployment for teams requiring infrastructure control.

Session-Level Visibility: Capture multi-turn agent interactions with user-level attribution. Debug issues reported by individual customers with complete conversation history. Essential for conversational agents and support applications.

Detailed Execution Traces: Log LLM calls, tool usage, reasoning steps, and intermediate outputs across workflows. Nested operation visibility enables debugging failures occurring deep within execution chains.

Production Cost Tracking: Monitor token consumption, API costs, and execution time per user, feature, and environment. Set per-user or per-feature budgets with alerting.

Prompt Management: Version control for agent prompts with A/B testing capabilities. Track which prompt versions produce the best results. Deploy updates without code changes.

Deployment Flexibility: Self-host via Docker or Kubernetes for complete data control, or use a managed cloud service for simplified operations.

Best For: Teams requiring self-hosting for data security, open-source priority organizations, and development teams comfortable managing infrastructure.

3. LangSmith

LangSmith provides observability purpose-built for LangChain applications with seamless integration and detailed execution visibility.

Native LangChain Integration: Automatic tracing for chains and agents built with LangChain. Add a few lines of code and all workflows are instrumented. See every step including retrieval, LLM calls, tool usage without manual instrumentation.

Execution Path Visualization: Debug complex chains by following execution paths visually. Understand branching logic, conditional flows, and tool selection patterns.

Evaluation Framework: Run evaluations with datasets and LLM-as-judge scorers. Test prompts in playground environment. Compare different approaches side-by-side.

Production Monitoring: Track costs and latency per request with detailed breakdowns. User feedback collection ties directly to traces for rapid issue identification.

Best For: Teams building exclusively with LangChain, organizations wanting framework-native tooling, development teams prioritizing minimal setup friction.

4. Datadog

Datadog brings enterprise infrastructure monitoring to AI agents, providing unified visibility across systems, applications, and AI workloads.

End-to-End System Visibility: Monitor infrastructure (CPU, memory, network), application metrics (response times, errors), and AI telemetry (token usage, model latency, tool calls) in a single platform. Correlate AI performance with underlying infrastructure health.

Agentic Workflow Tracking: Datadog LLM Observability captures hard failures (exceptions during tool calls) and soft failures (incorrect behaviors without errors). Track functional correctness, cost, latency, and safety mechanisms.

Integration Ecosystem: 900+ integrations enable correlating agent performance with databases, APIs, caching layers, and external services. Identify whether failures originate from agents or dependent systems.

Alerting Infrastructure: Configure sophisticated alerts on quality metrics, cost thresholds, and performance degradation. Integration with PagerDuty, Slack, and incident management tools enables rapid response.

Best For: Enterprises with existing Datadog infrastructure, teams managing both traditional services and AI agents, and organizations requiring comprehensive system-wide monitoring.

5. Weights & Biases Weave

Weights & Biases Weave specializes in monitoring multi-agent LLM systems where multiple agents interact, make decisions, and pass data across complex workflows.

Hierarchical Agent Tracking: Understand nested agent calls and dependencies. Track which agents initiate sub-tasks and how information flows through agent hierarchies.

Individual Agent Performance: Monitor each agent's call frequency, success rates, and latency. Identify which agents create bottlenecks in pipelines. Optimize resource allocation based on actual usage patterns.

Input-Output Flow Tracking: Monitor data transformations through agent chains. View inputs, outputs, and modifications at each step. Understand how information evolves across multiple agent interactions.

Agent Collaboration Debugging: Debug failures in multi-agent coordination. Track delegation patterns, information handoffs, and collaborative problem-solving.

Cost Attribution: Break down token usage and API costs per agent in multi-agent systems. Understand which specialized agents drive expenses.

Best For: Teams building multi-agent systems, organizations with specialized agent architectures, development teams requiring visibility into agent collaboration.

Platform Comparison

| Feature | Maxim | Langfuse | LangSmith | Datadog | Weave |

|---|---|---|---|---|---|

| Primary Focus | Real-time debug | Open-source | LangChain | Infrastructure | Multi-agent |

| Trace Replay | Step-by-step | Standard | Paths | System | Hierarchical |

| Root Cause Analysis | Automated | Manual | Visual | Correlation | Agent-level |

| Production Alerts | Real-time quality | Configurable | Standard | Enterprise | Custom |

| Cost Tracking | Granular + caching | Detailed | Per-request | Infrastructure-wide | Per-agent |

| Multi-Agent Support | Node-level | Session-level | Chain-level | Workflow-level | Native |

| Data Curation | Automated | Manual | Datasets | N/A | Limited |

| Framework Support | All major | Agnostic | LangChain-native | All | Multiple |

| Best For | Complex debugging | Self-hosting | LangChain users | Enterprise | Multi-agent systems |

Implementation

Phase 1: Comprehensive Instrumentation - Instrument all agent workflows with complete trace capture, including LLM calls, tool usage, reasoning steps, and intermediate state. Ensure context preservation across distributed operations. Use native SDKs for minimal overhead.

Phase 2: Baseline Metrics - Establish normal operating ranges for task completion rates, reasoning quality scores, tool usage patterns, cost per conversation, and response latency. Baselines enable automatic anomaly detection.

Phase 3: Alert Configuration - Set up alerts for quality degradation, cost spikes, performance regression, and error rate increases. Configure thresholds based on baseline metrics. Route alerts to appropriate teams via Slack, PagerDuty, or email.

Phase 4: Debug Workflows - Establish systematic debugging processes. Use trace replay to understand failures, identify root causes methodically, document common patterns, and share learnings across teams. Maxim's agent tracing guide provides structured approaches.

Phase 5: Continuous Improvement - Transform insights into improvements. Add failures to evaluation datasets automatically, validate fixes in staging, monitor that fixes prevent recurrence, and iterate on prompts and configurations based on patterns.

Reference Maxim's AI reliability framework for systematic reliability approaches.

Key Resources: Agent Observability | Agent Evaluation Tools | LLM Observability | Quality Evaluation | Evaluation Metrics | Maxim vs Arize | Comm100 Case Study | Thoughtful Case Study | Atomicwork Case Study

Conclusion

The five platforms represent different approaches to production debugging. Maxim provides real-time trace replay and automated root cause analysis. Langfuse offers open-source flexibility with deep tracing. LangSmith delivers native LangChain debugging. Datadog unifies infrastructure and AI monitoring. Weights & Biases Weave specializes in multi-agent workflows.

Maxim's comprehensive solution combines real-time observability with automated data curation, enabling teams to debug rapidly through trace replay, identify root causes automatically, and transform failures into evaluation test cases. Unlike traditional monitoring focused on errors and latency, Maxim addresses agent-specific concerns like reasoning quality, tool selection logic, and multi-step workflow execution.

Ready to implement comprehensive production debugging? Book a demo with Maxim to see how real-time trace replay and automated root cause analysis accelerate issue resolution.