Top 20 LLM Related Terms for 2025

AI agents are transforming the landscape of artificial intelligence, moving beyond simple request-response models to autonomous systems capable of complex reasoning, planning, and execution. As 2025 emerges as the breakout year for AI agents, understanding the terminology surrounding these systems has become essential for AI engineers and product managers.

This guide covers the 20 most important terms related to AI agents, providing clear definitions and context to help you navigate conversations about agentic AI systems with confidence.

1. AI Agent

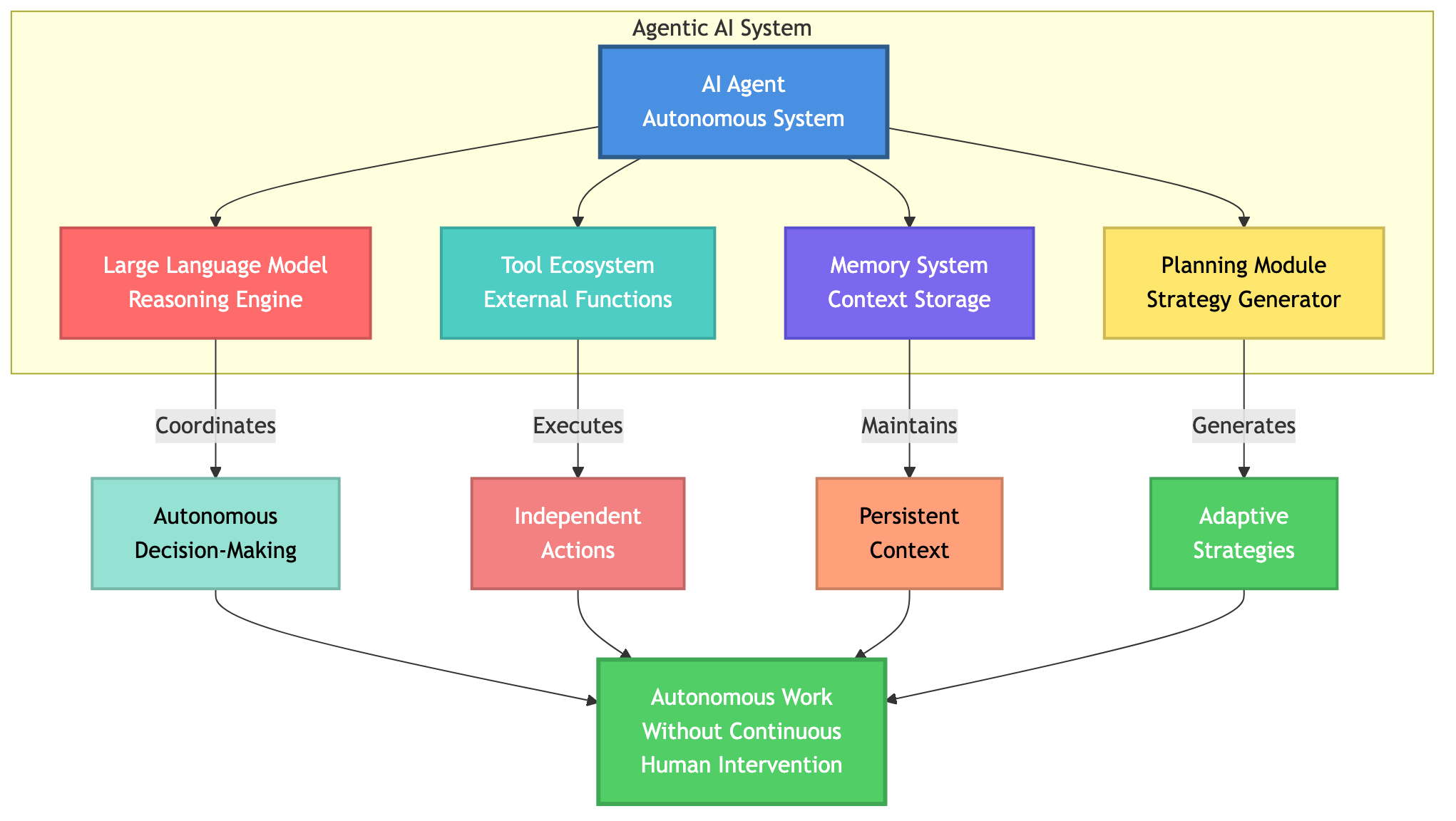

An AI agent is an autonomous system designed to perceive its environment, make decisions, and execute tasks toward specific goals. Unlike traditional AI systems that follow predefined rules, AI agents use large language models (LLMs) to coordinate decision-making, select appropriate tools, and manage access to necessary data.

AI agents run tools in a loop to achieve goals, distinguishing them from simple chatbots or automation scripts. They can adapt their approach based on new information, handle exceptions, and maintain context throughout multi-step processes.

2. Agentic AI

Agentic AI refers to a type of AI that uses AI agents to accomplish work autonomously. According to Salesforce, agentic AI extends beyond traditional automation by enabling systems to make independent decisions about task execution, resource allocation, and problem-solving strategies.

The key distinction is autonomy, agentic AI systems can operate without continuous human intervention, making them suitable for complex workflows that require adaptive decision-making.

3. Large Language Model (LLM)

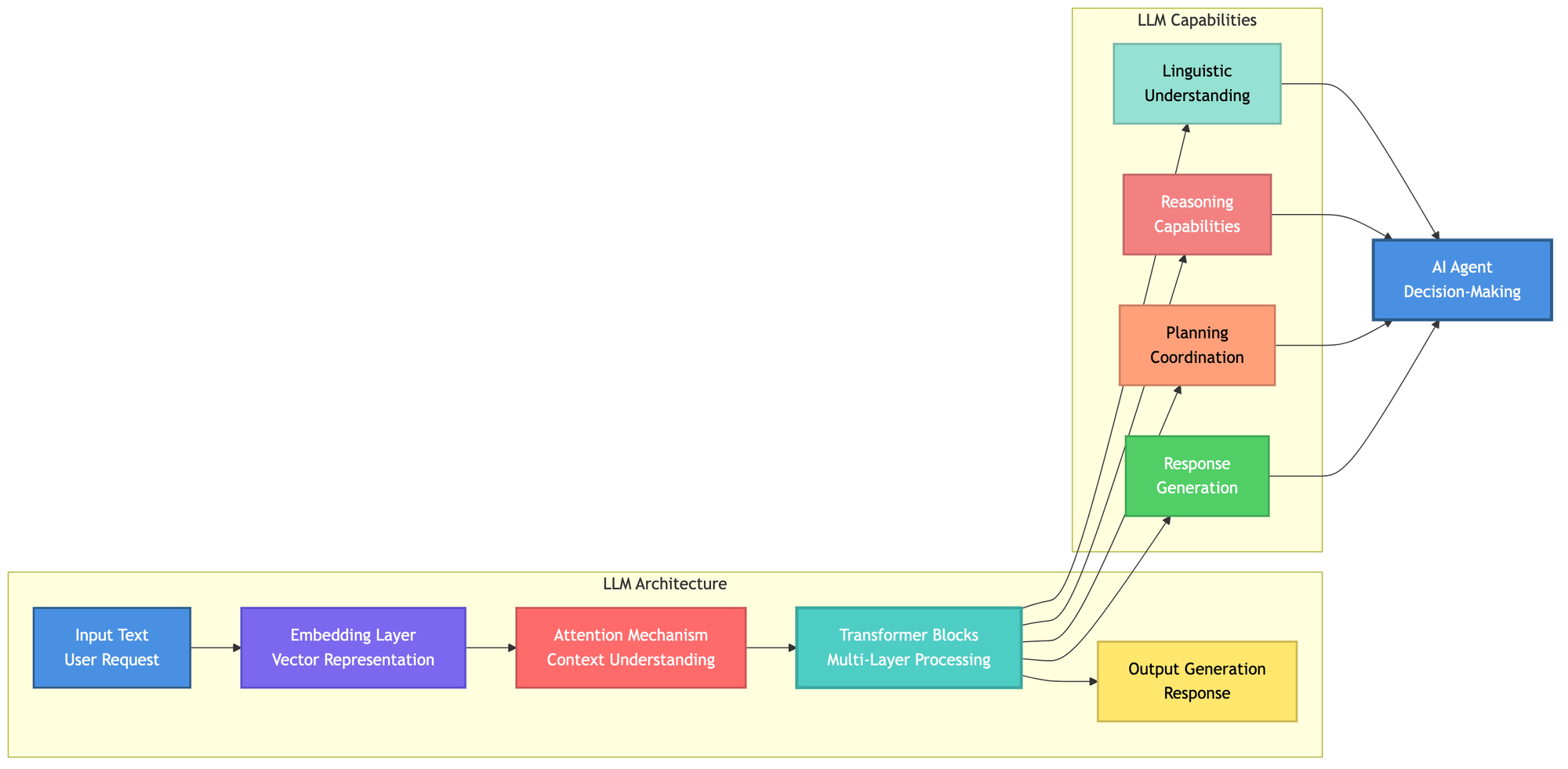

A Large Language Model serves as the "brain" of an AI agent. LLMs are sophisticated AI systems trained on vast amounts of text data, typically exceeding billions of parameters, enabling them to understand nuanced requests and generate coherent responses.

In the context of AI agents, LLMs provide the reasoning capabilities and linguistic understanding necessary for interpreting user intent, planning actions, and generating appropriate responses. They coordinate the entire decision-making process within agentic systems.

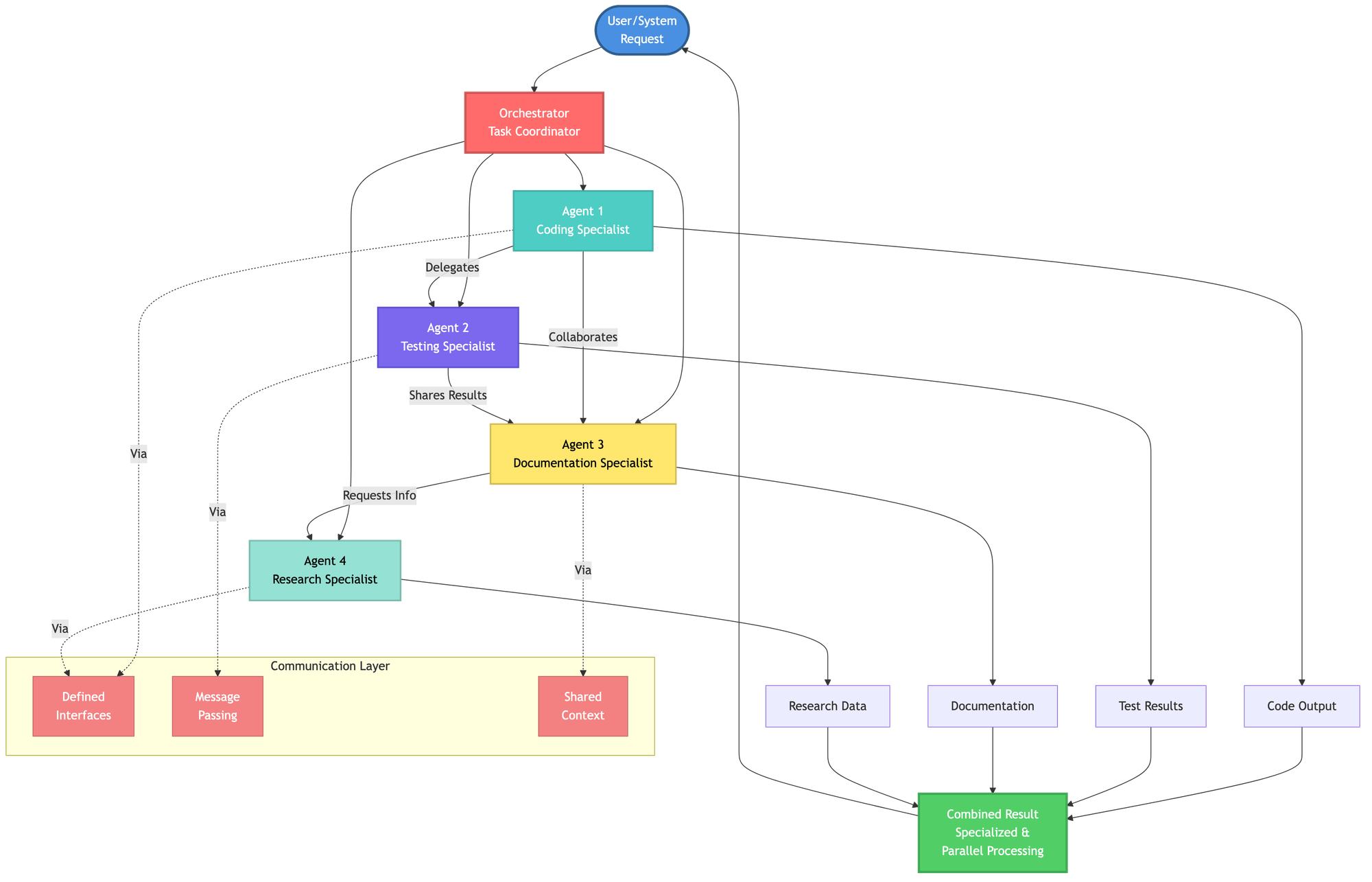

4. Multi-Agent System (MAS)

A Multi-Agent System consists of multiple AI agents working collectively to perform tasks on behalf of a user or system. According to IBM research, multi-agent systems often outperform single generalist agents by allowing specialization and parallel processing.

For example, in software development, different agents might handle coding, testing, and documentation, collaborating through defined interfaces. This approach mirrors human team collaboration and enables more sophisticated problem-solving.

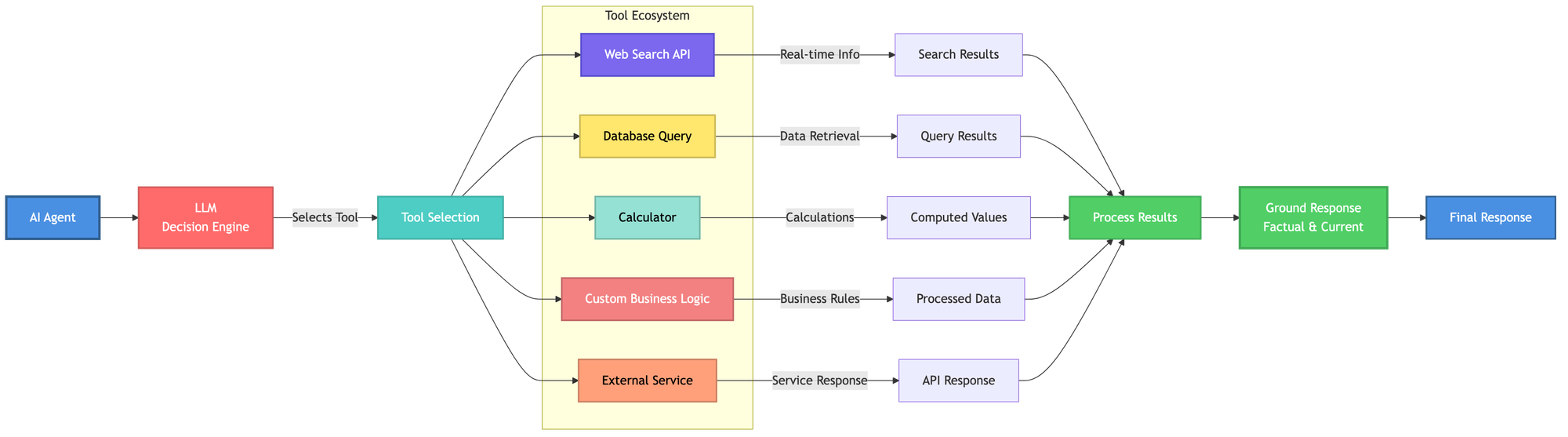

5. Tool Use (Tool Calling)

Tool use refers to an AI agent's ability to access and execute external functions, APIs, databases, or services. This capability extends an agent beyond its training data, allowing it to retrieve real-time information, perform calculations, or interact with external systems.

Tool calling enables agents to access everything from web search APIs to custom business logic, dramatically expanding their functional capabilities. This is essential for grounding agent responses in current, accurate information.

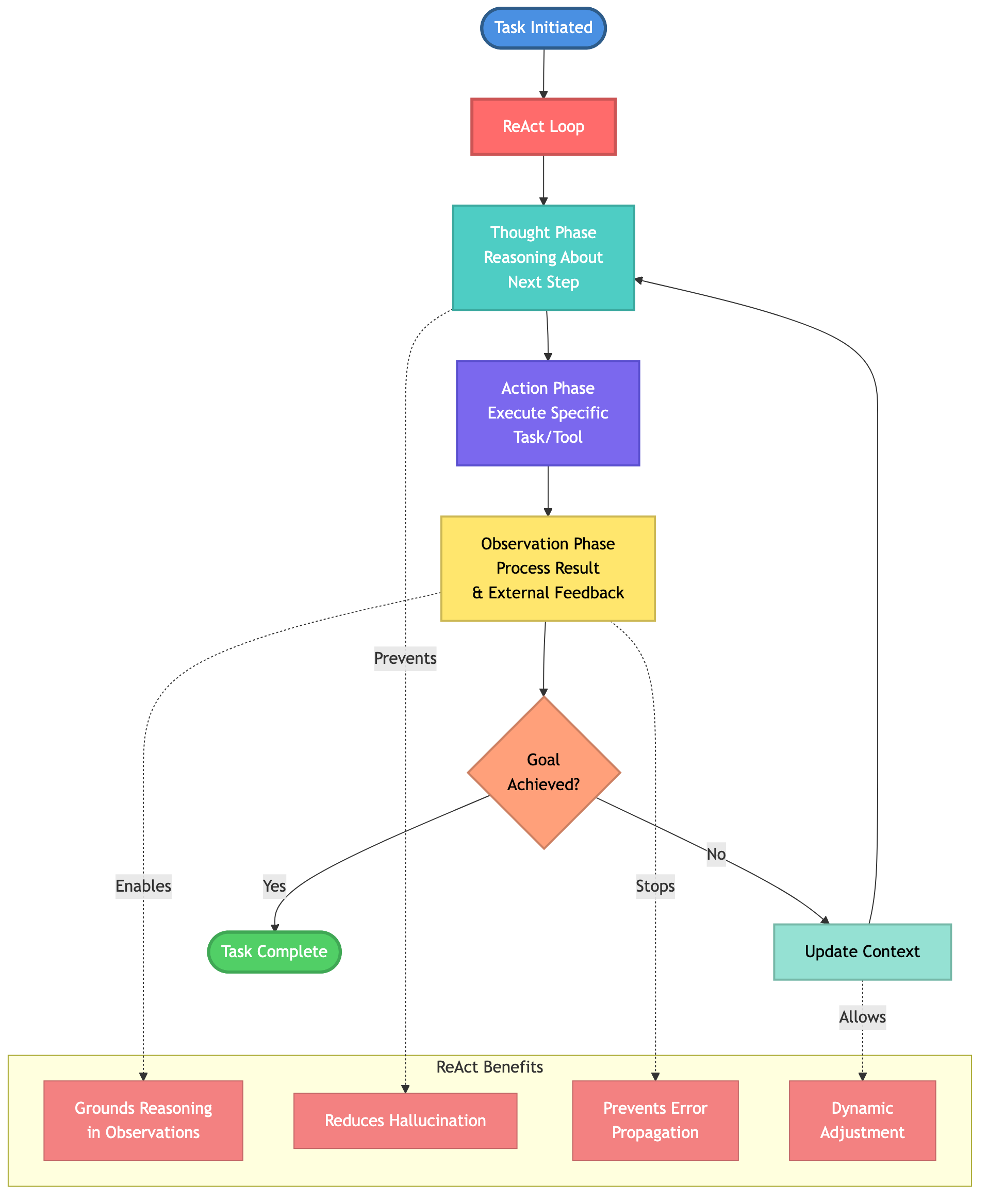

6. ReAct (Reasoning + Action)

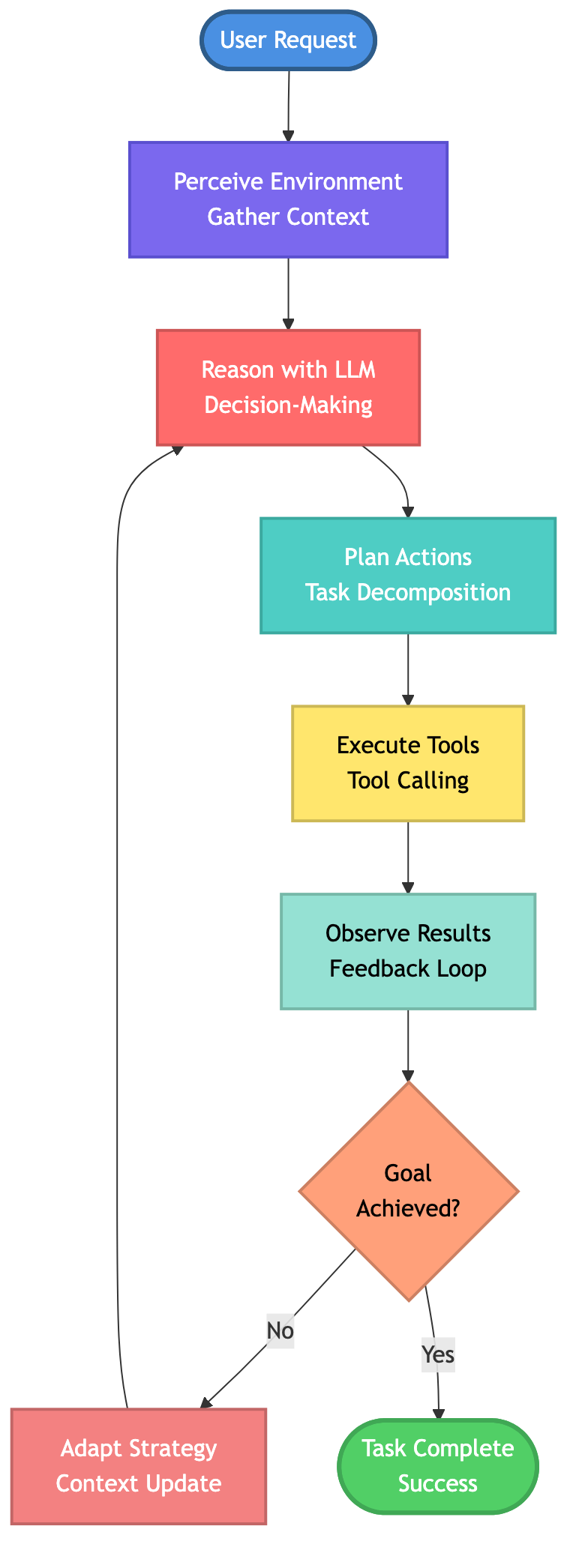

ReAct is a framework introduced in the 2023 paper by Yao et al. that synergizes reasoning and acting in language models. The approach enables AI agents to generate both verbal reasoning traces and task-specific actions in an interleaved manner.

According to IBM's definition, ReAct agents alternate between three phases: Thought (reasoning about the next step), Action (executing a specific task), and Observation (processing the result). This cycle continues until the goal is achieved, allowing agents to dynamically adjust their approach based on feedback.

ReAct addresses critical issues like hallucination and error propagation by grounding reasoning in external observations rather than relying solely on internal model representations.

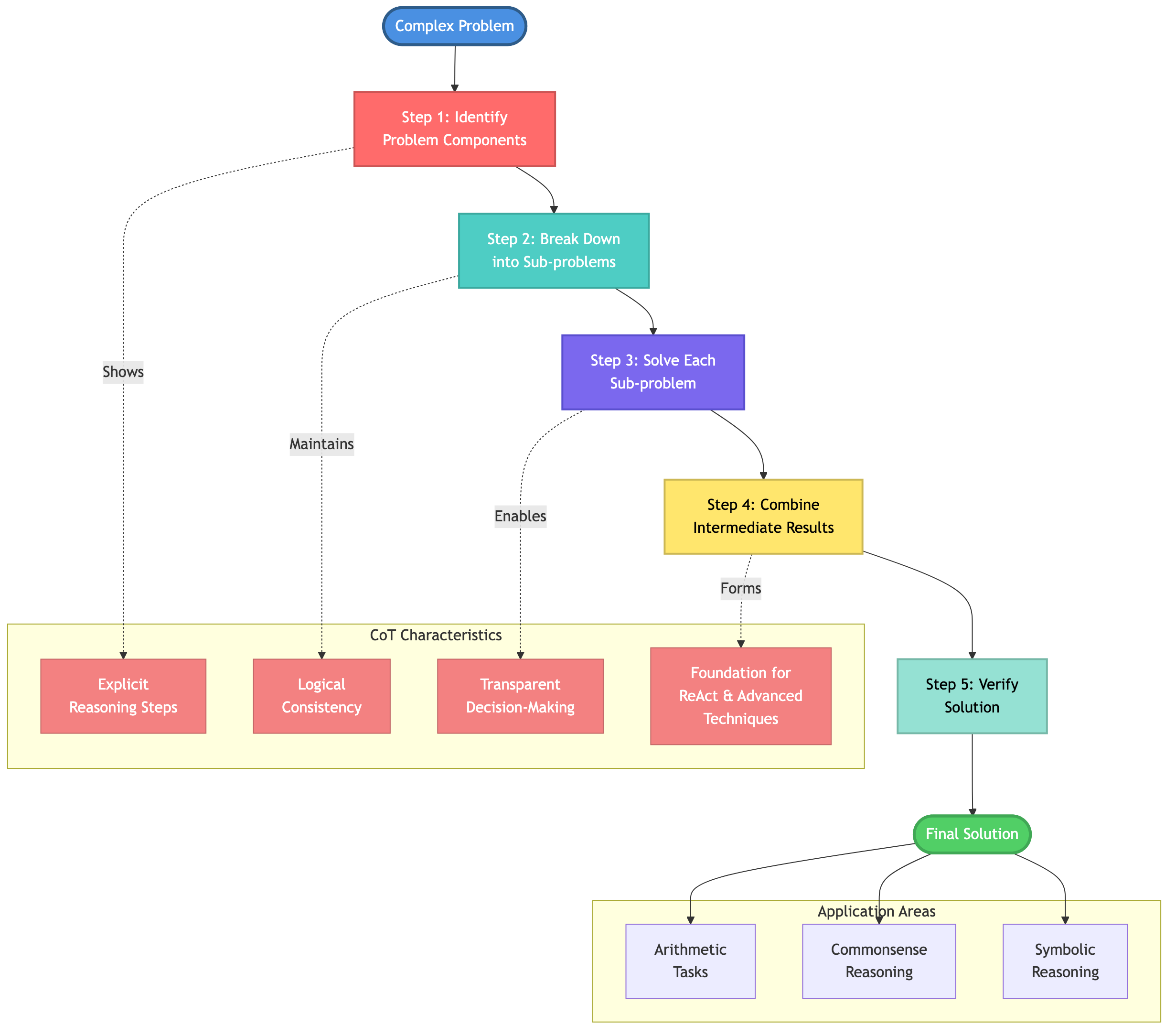

7. Chain of Thought (CoT)

Chain of Thought prompting is a technique that guides LLMs to break down complex problems into intermediate reasoning steps. This approach improves performance on arithmetic, commonsense, and symbolic reasoning tasks by making the model's thinking process explicit.

While CoT focuses purely on reasoning without external actions, it forms the foundation for more advanced techniques like ReAct. In agent systems, CoT helps maintain logical consistency and enables transparent decision-making processes.

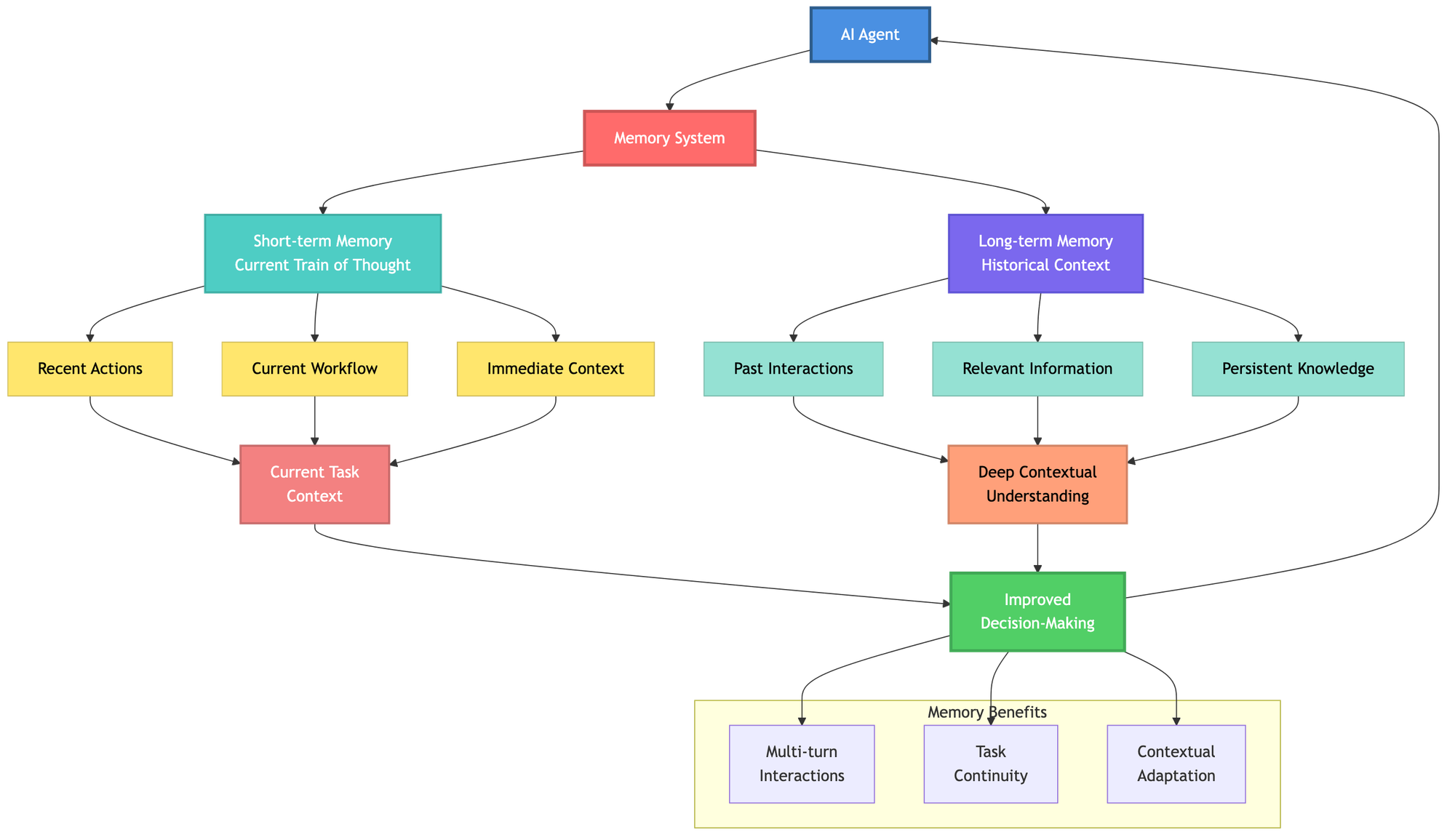

8. Memory (Short-term and Long-term)

AI agents rely on memory systems to maintain context and adapt to ongoing tasks. According to NVIDIA, agent memory operates at two levels:

Short-term memory tracks the agent's current "train of thought" and recent actions, ensuring context preservation throughout the current workflow.

Long-term memory retains historical interactions and relevant information, enabling deeper contextual understanding and improved decision-making over extended periods.

Effective memory management is crucial for agents handling complex, multi-turn interactions or returning to previously started tasks.

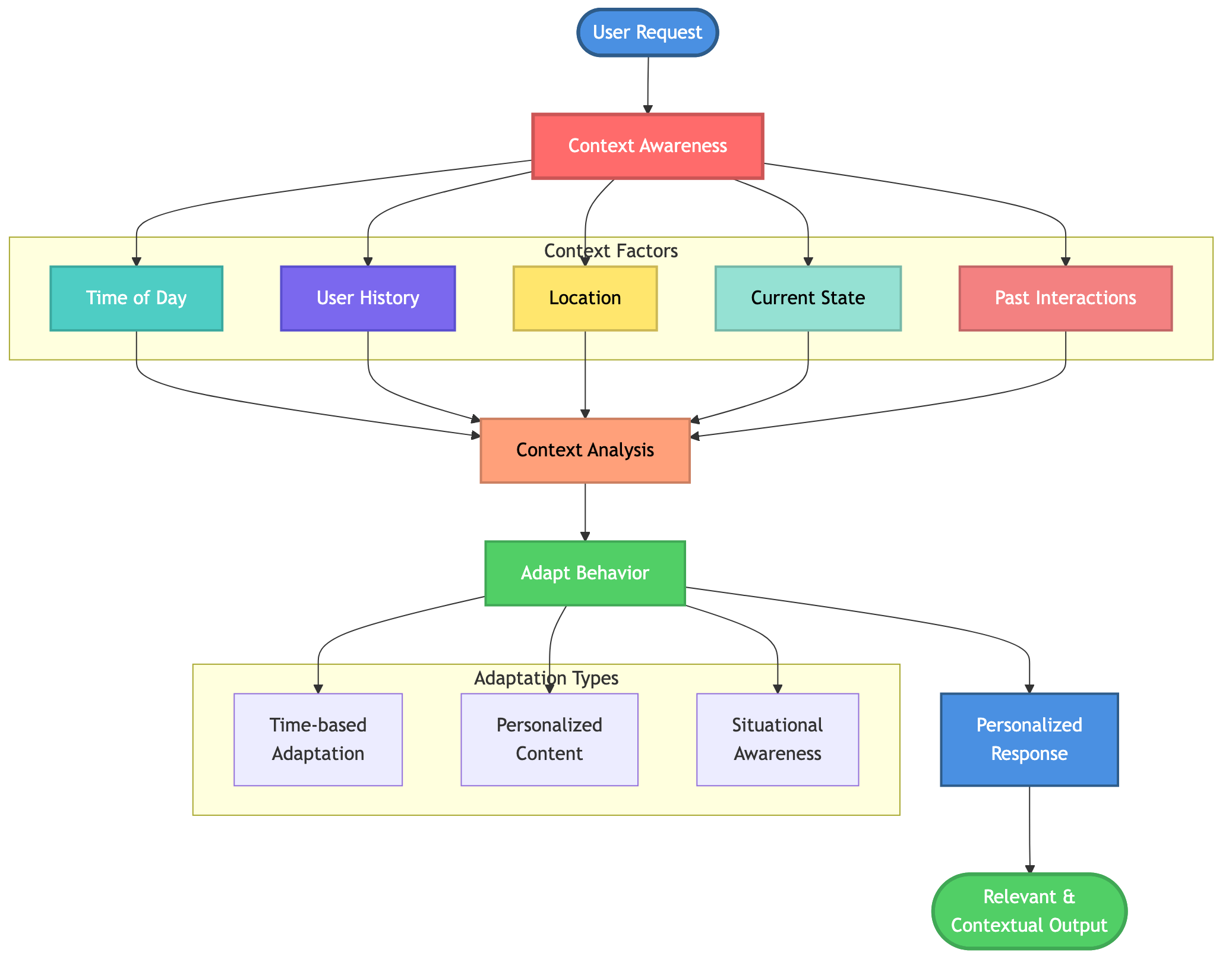

9. Context Awareness

Context awareness enables AI agents to use past interactions and real-time data to understand and respond to a user's unique environment and situation. Context-aware agents can consider factors like time of day, user history, location, and current state to deliver more relevant and personalized responses.

This capability differentiates intelligent agents from simple rule-based systems, allowing them to adapt their behavior based on situational factors rather than following rigid scripts.

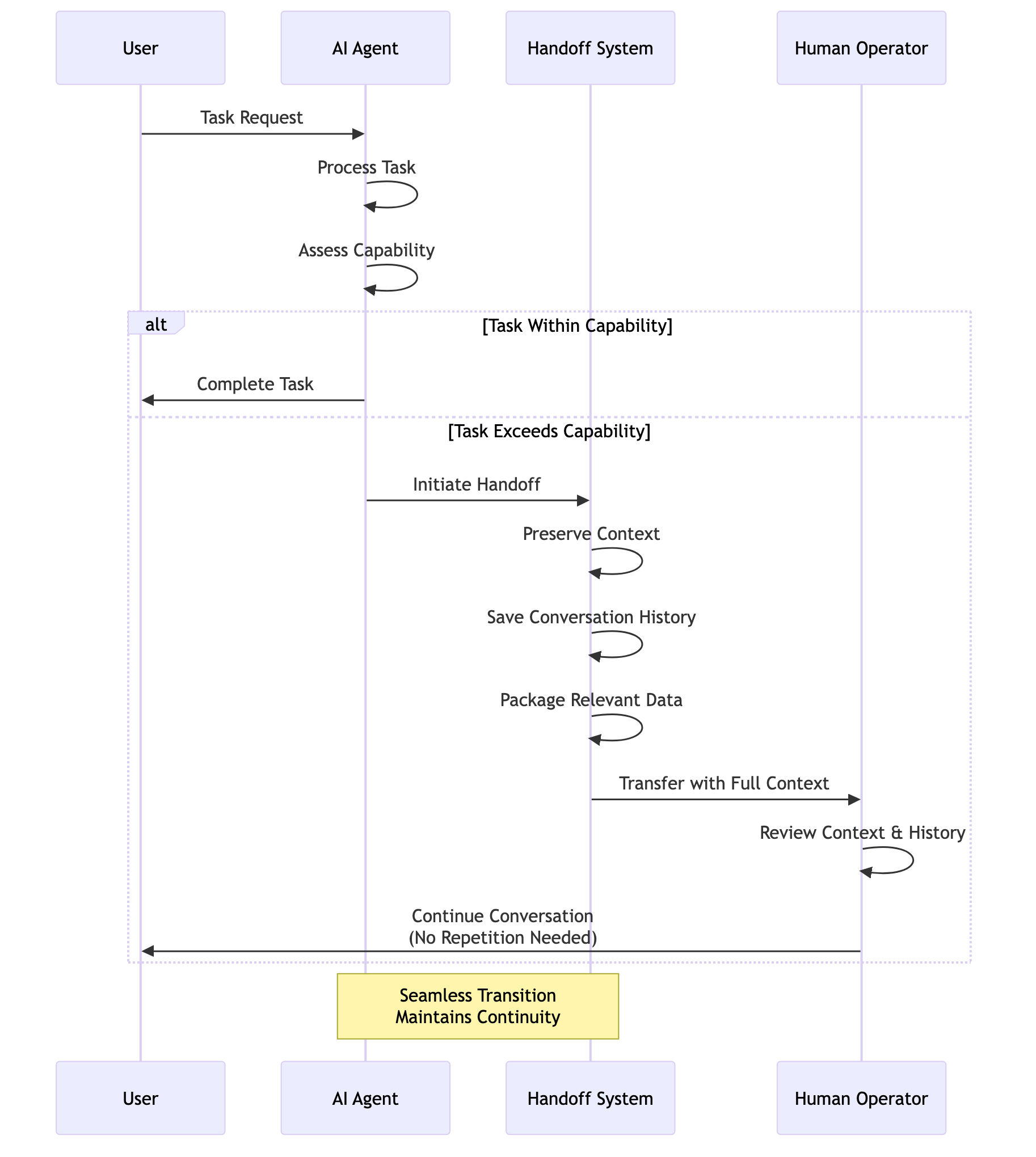

10. Agent-to-Human Handoff

Agent-to-human handoff describes the process of transferring control from an AI agent to a human operator when a task exceeds the agent's capabilities. Successful handoffs preserve context, conversation history, and all relevant data so customers don't need to repeat themselves.

This concept recognizes that even advanced agents have limitations and establishes protocols for seamless transitions when human judgment or intervention becomes necessary.

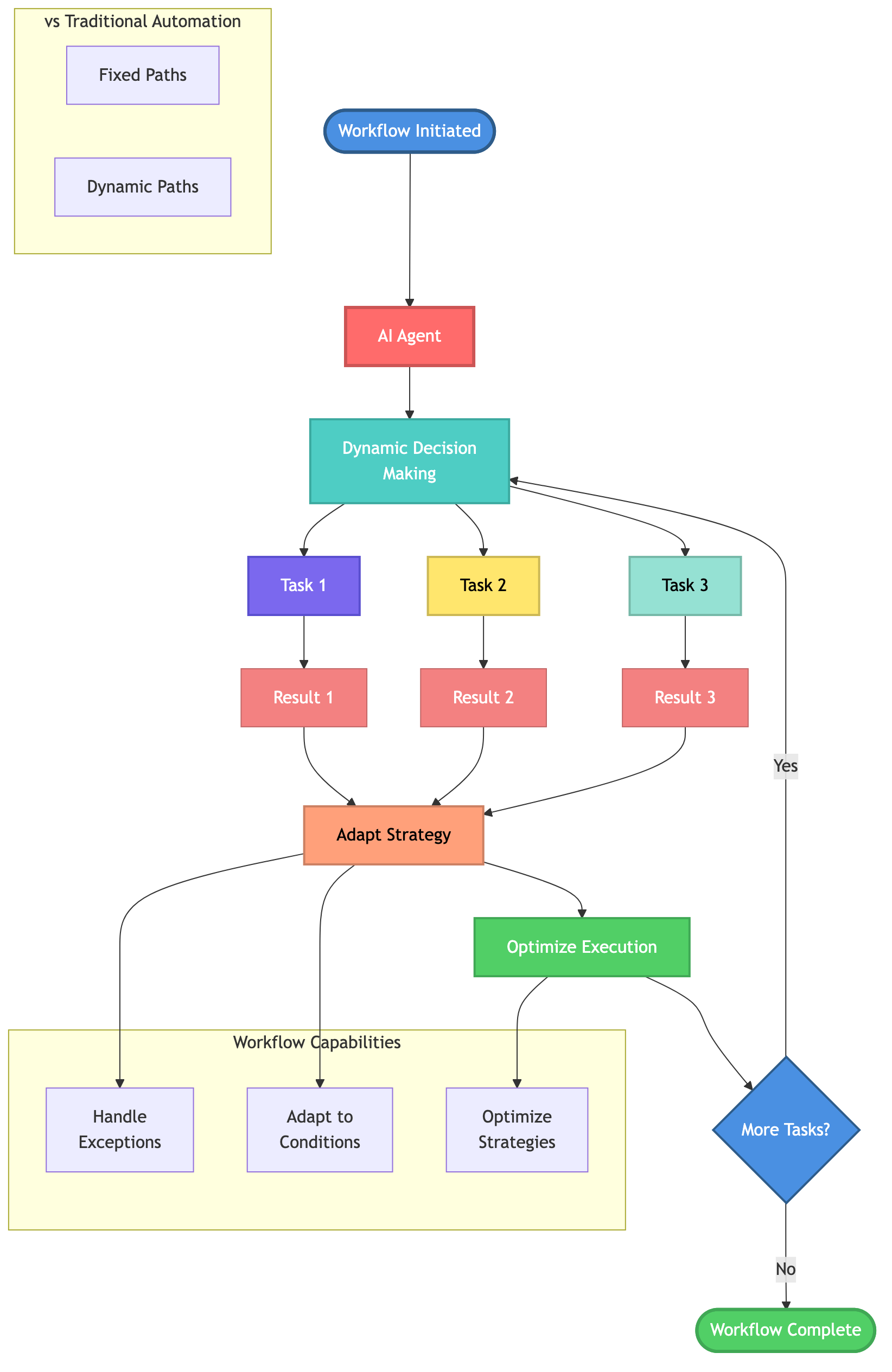

11. Agentic Workflow

An agentic workflow is an AI-driven process that uses one or more agents to accomplish work autonomously. Unlike traditional automation that follows fixed paths, agentic workflows allow agents to make dynamic decisions about task sequencing, resource allocation, and error handling.

These workflows can adapt to changing conditions, handle exceptions, and optimize execution strategies based on intermediate results. For teams building AI applications, Maxim's Agent Simulation Evaluation enables testing agentic workflows across hundreds of scenarios before deployment.

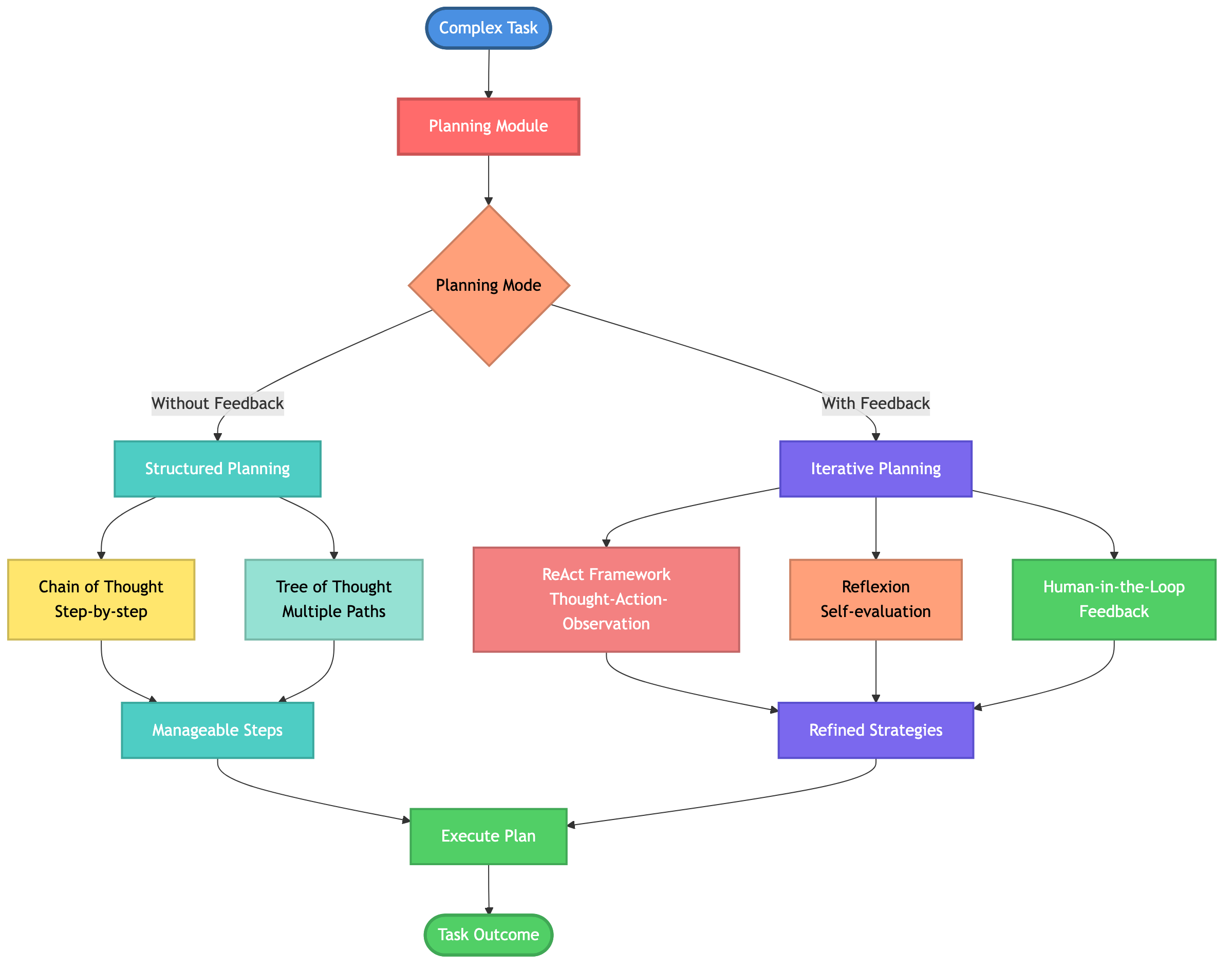

12. Planning Modules

Planning modules enable AI agents to decompose complex tasks into actionable steps. According to NVIDIA's research, these modules operate in two modes:

Without Feedback: Using structured techniques like Chain of Thought or Tree of Thought to break down tasks into manageable steps.

With Feedback: Incorporating iterative improvement methods like ReAct, Reflexion, or human-in-the-loop feedback for refined strategies and outcomes.

Planning is essential for agents tackling multi-step problems where the solution path isn't immediately obvious.

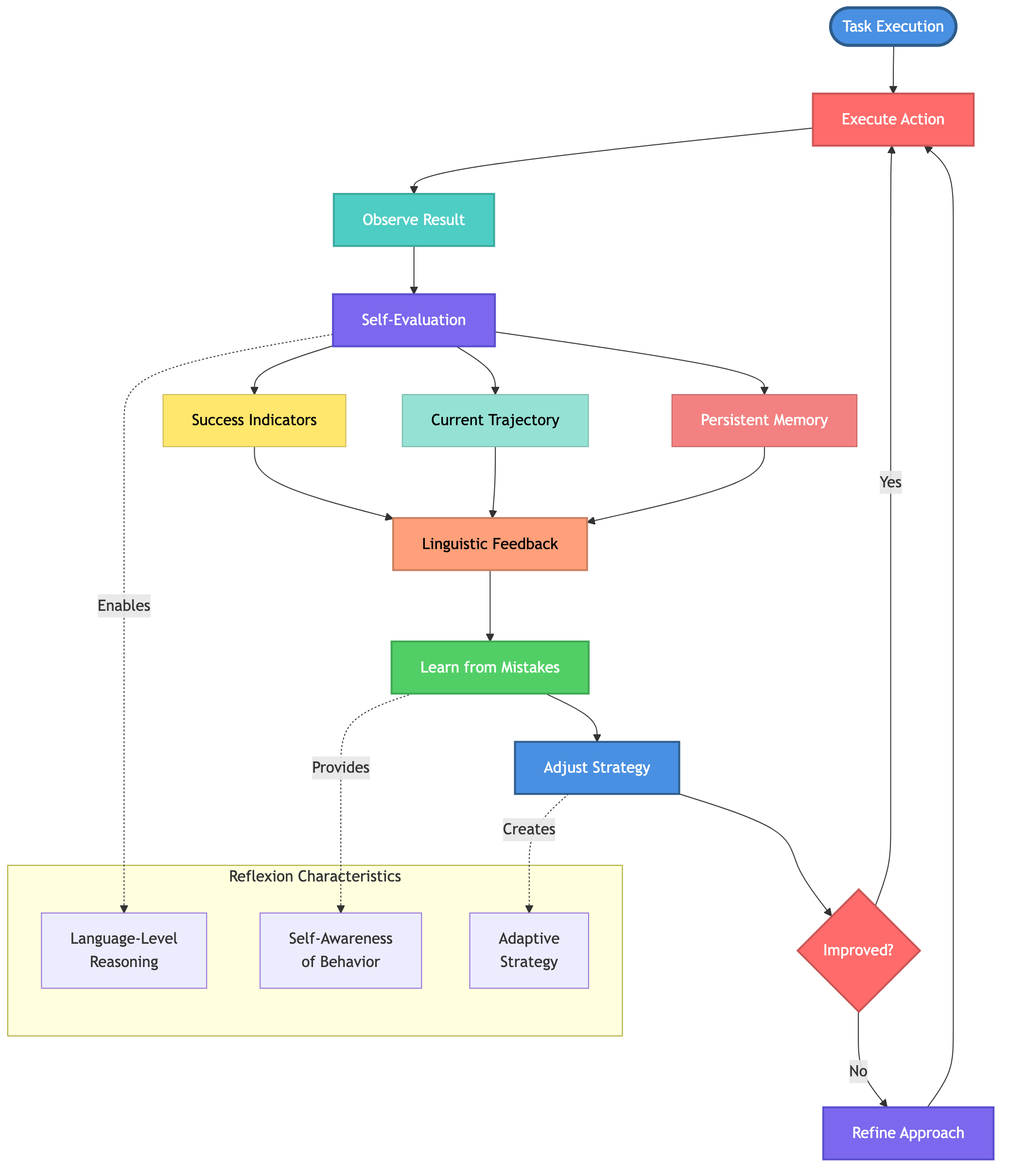

13. Reflexion

Reflexion is a technique that enables AI agents to learn from their mistakes through self-evaluation and linguistic feedback. The approach uses metrics such as success indicators, current trajectory, and persistent memory to provide precise and relevant feedback for improving agent performance.

Unlike traditional reinforcement learning, Reflexion operates at the language level, allowing agents to reason about their own behavior and adjust strategies accordingly.

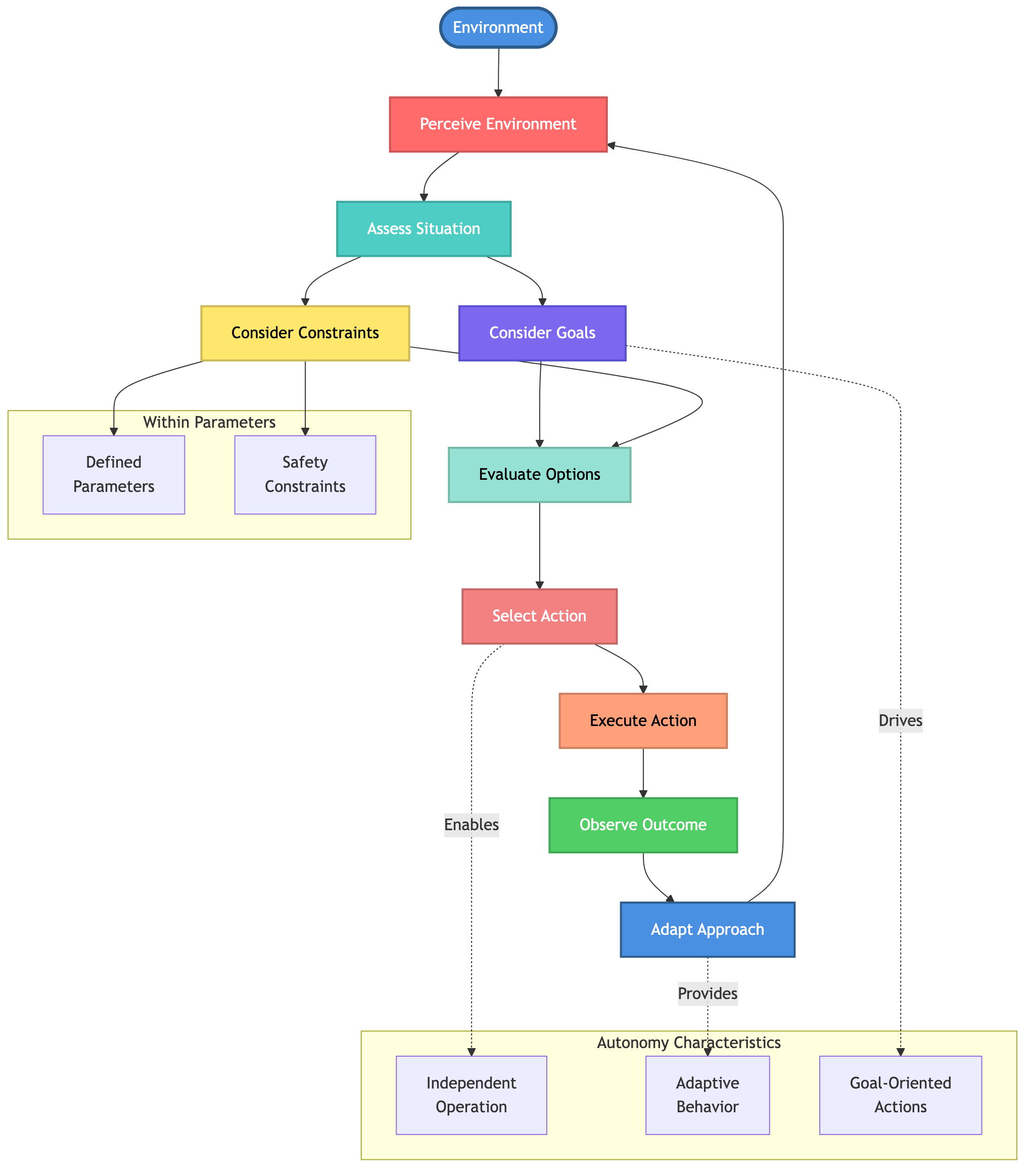

14. Autonomous Decision-Making

Autonomous decision-making refers to an AI agent's ability to independently evaluate options and select actions without continuous human supervision. This capability is central to what makes a system "agentic" rather than simply automated.

Autonomous agents can perceive their environment, assess situations based on goals and constraints, choose appropriate actions, and adapt their approach based on outcomes, all while operating independently within defined parameters.

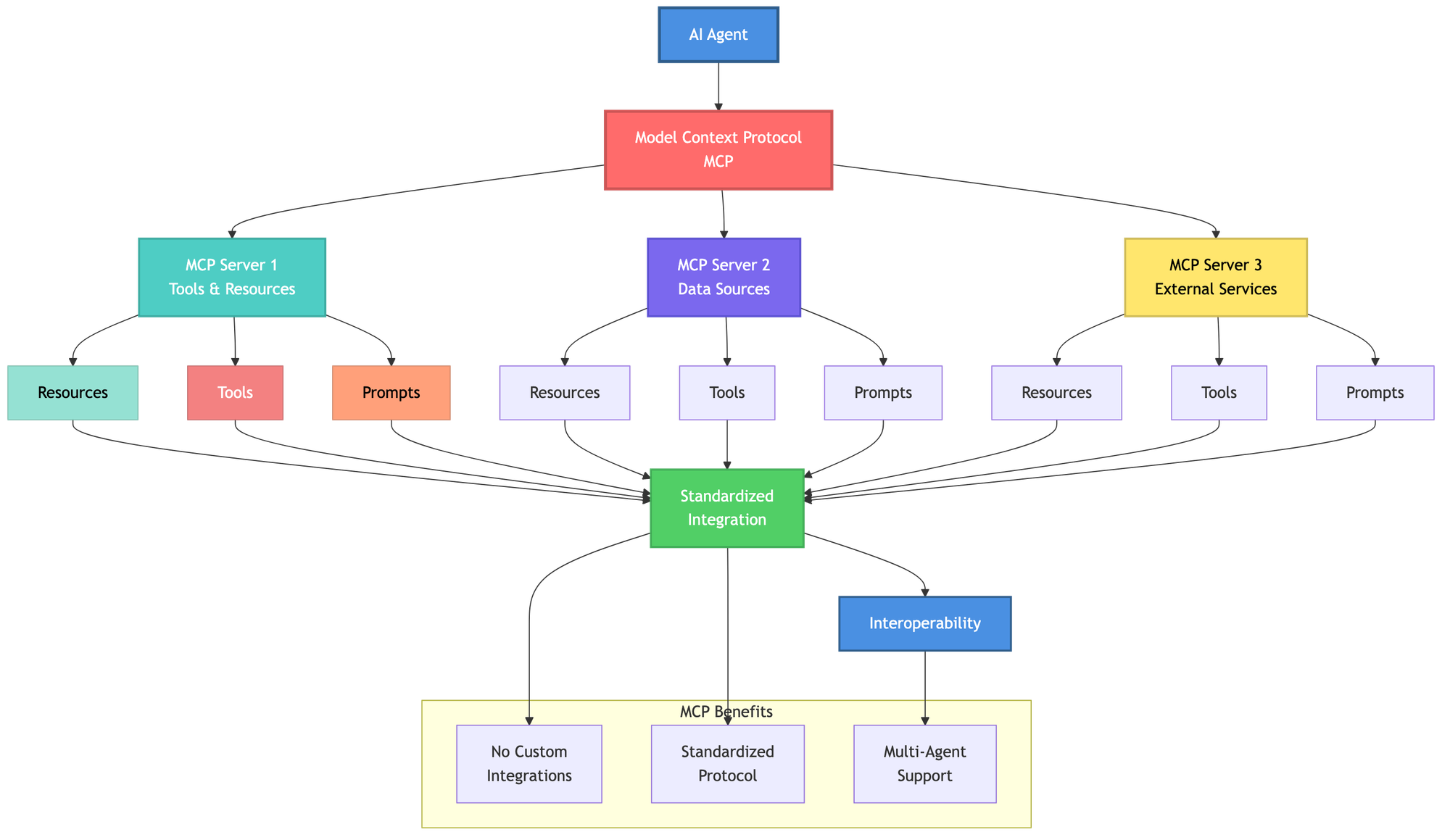

15. Model Context Protocol (MCP)

Model Context Protocol (MCP) is an open protocol that enables AI agents and language models to connect with external tools, data sources, and services in a standardized way. By exposing resources, prompts, and tools through MCP servers, agents can expand their capabilities without custom integrations.

MCP is increasingly used in multi-agent systems and development environments to simplify interoperability. Bifrost, Maxim's AI gateway, provides native support for MCP, enabling seamless tool integration across multiple providers.

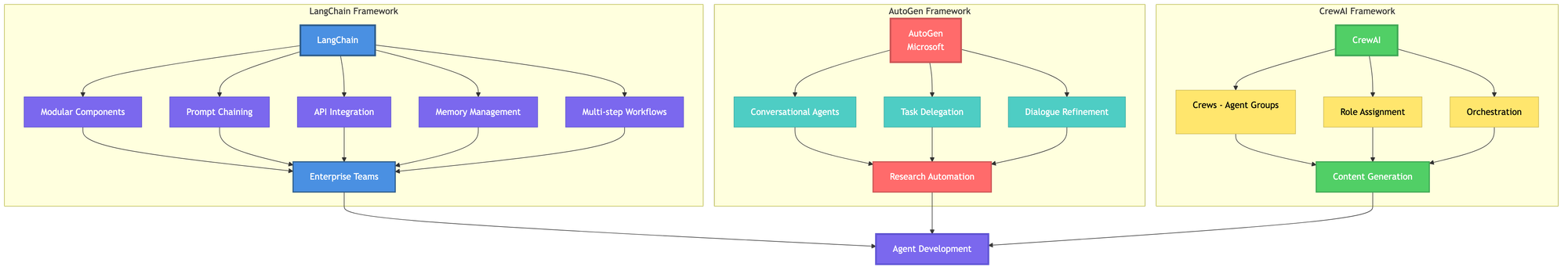

16. Agent Frameworks

Agent frameworks provide the building blocks for developing, deploying, and managing AI agents. Several frameworks have emerged as industry standards:

LangChain is a widely adopted framework providing modular components for chaining prompts, integrating APIs, managing memory, and designing multi-step workflows. Its ecosystem of community integrations makes it suitable for enterprise teams.

AutoGen, Microsoft's open-source framework, enables LLM-based agents to collaborate conversationally. Agents delegate tasks and refine results through dialogue, making it effective for research automation and collaborative coding.

CrewAI fosters multi-agent collaboration through "crews", specialized agent groups coordinated for shared goals. The framework emphasizes role assignment and orchestration, ideal for content generation and multi-step data analysis.

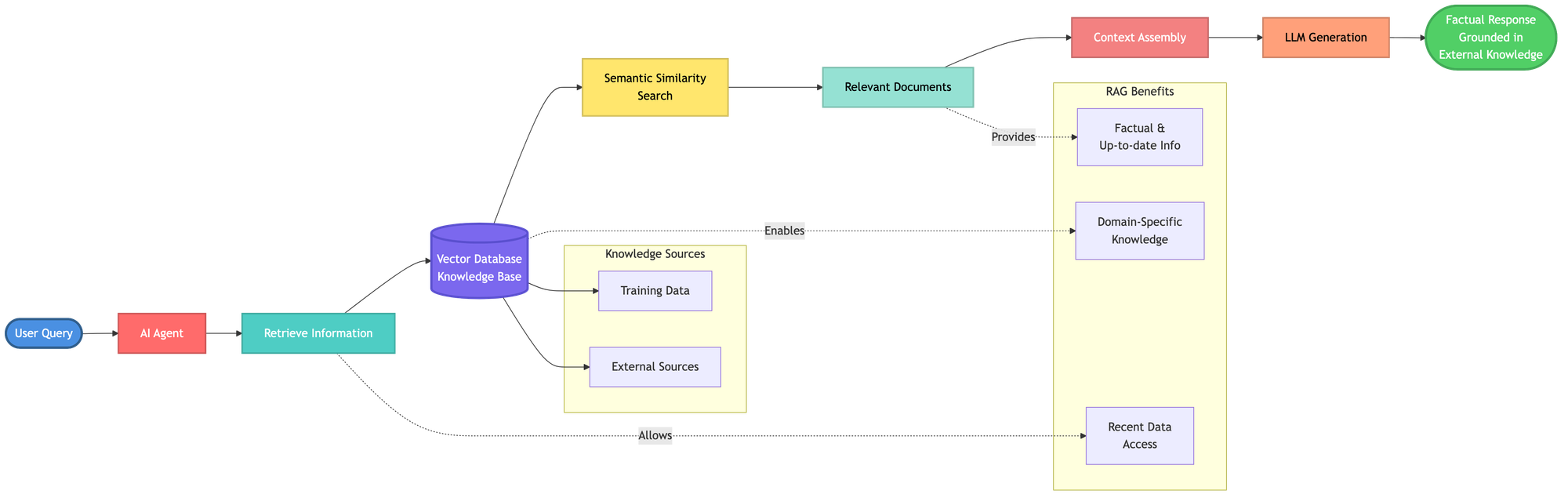

17. Retrieval Augmented Generation (RAG)

RAG is a technique that enhances AI agent responses by retrieving relevant information from external knowledge bases before generating answers. This approach grounds agent outputs in factual, up-to-date information rather than relying solely on training data.

RAG systems typically use vector databases to store and retrieve information based on semantic similarity, allowing agents to access domain-specific knowledge or recent data that wasn't part of their original training.

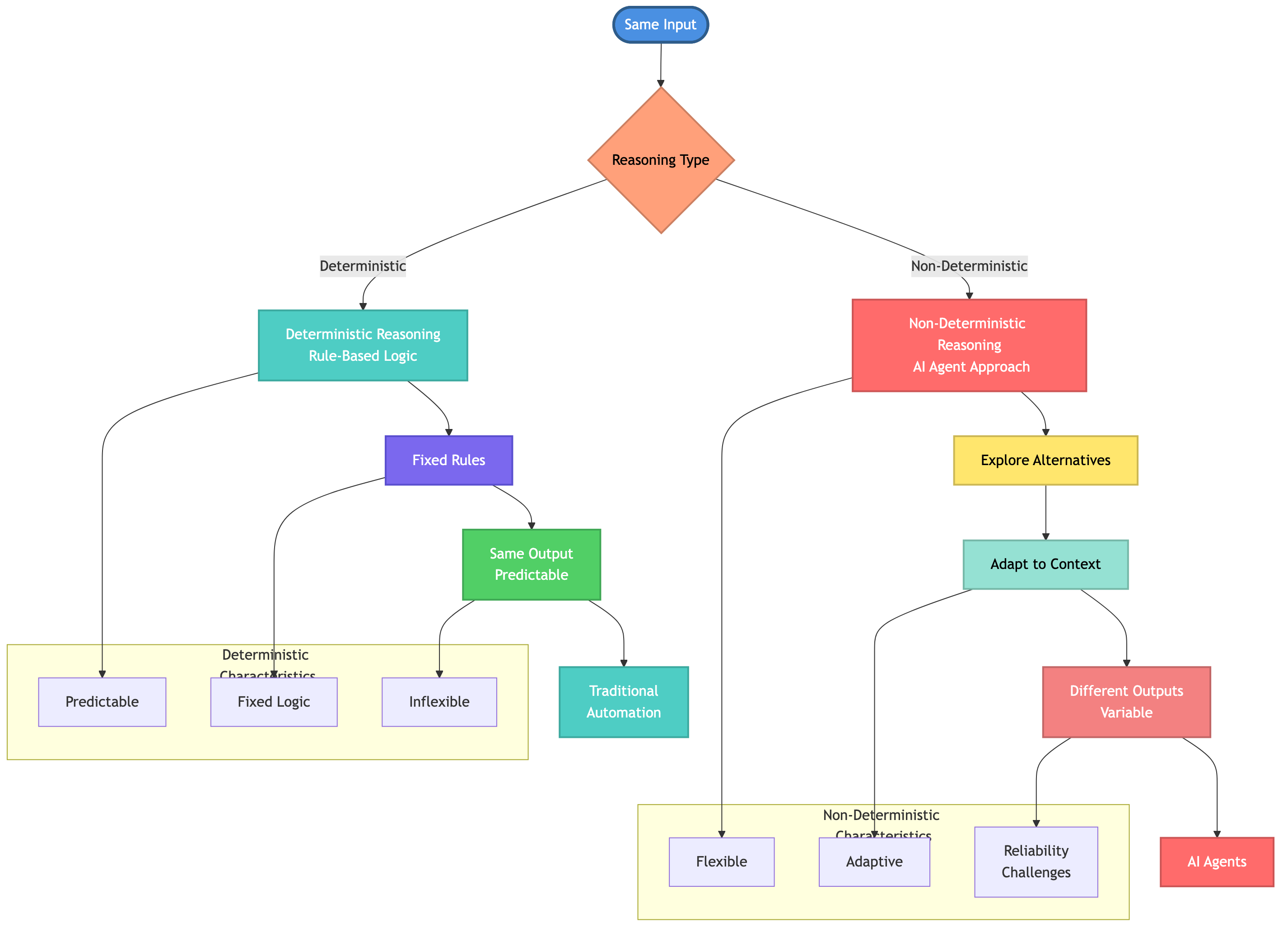

18. Deterministic vs Non-Deterministic Reasoning

According to Salesforce's research, deterministic reasoning uses rule-based logic to guarantee the same output for identical inputs. This approach is predictable but inflexible.

In contrast, AI agents employ non-deterministic reasoning, meaning they can produce different outputs or take different actions even when given the same prompts. This variability allows agents to explore alternative solutions and adapt to context, but it also introduces challenges for reliability and testing.

Understanding this distinction is crucial for AI engineers who need to balance agent flexibility with application requirements for consistency.

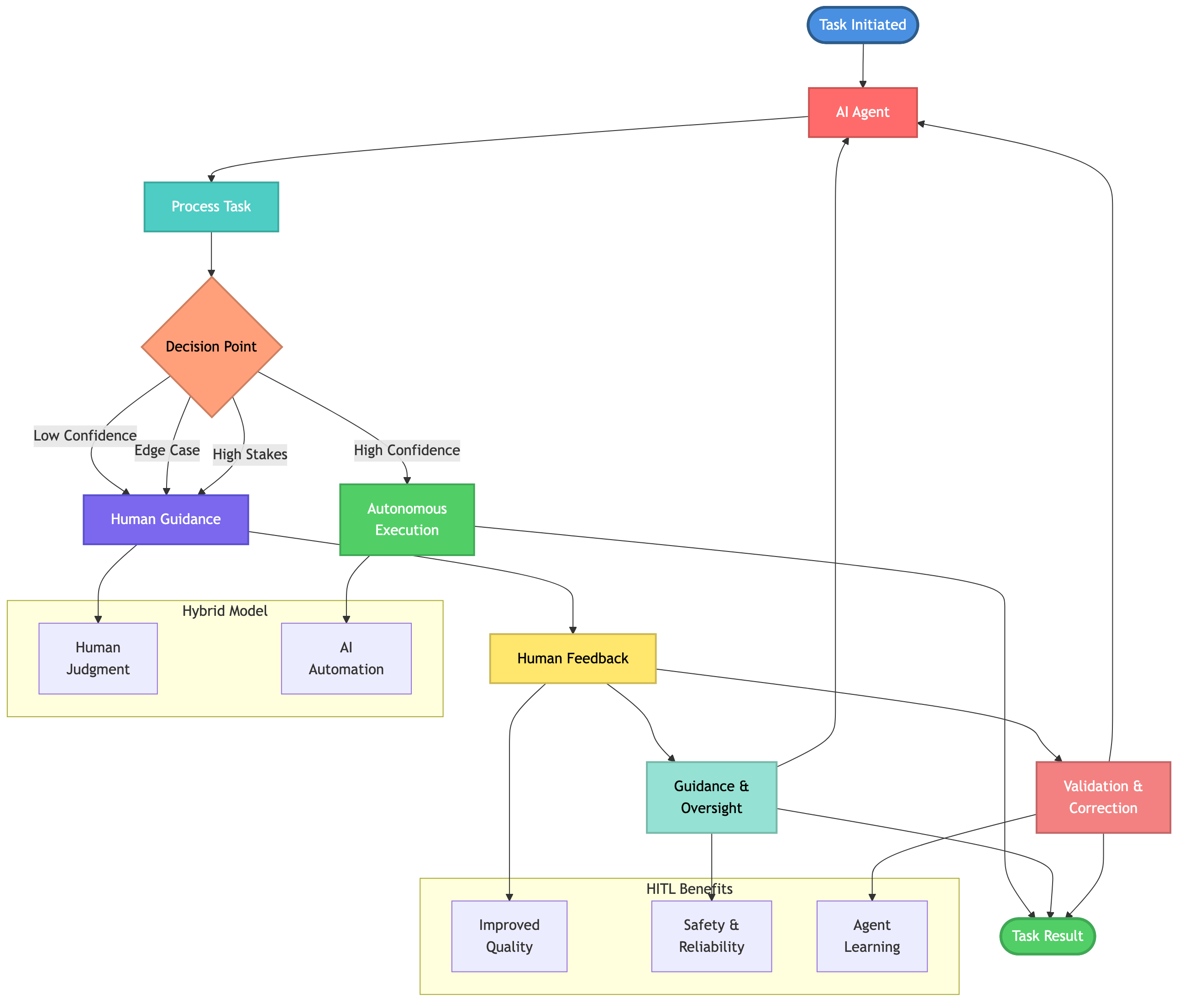

19. Human-in-the-Loop (HITL)

Human-in-the-loop refers to systems where humans provide guidance, oversight, or validation at critical decision points. According to research, incorporating human feedback during task execution can significantly boost agent effectiveness and real-world applicability.

HITL approaches are particularly valuable for high-stakes decisions, edge cases, or situations where agent confidence is low. This hybrid model leverages the strengths of both human judgment and AI automation.

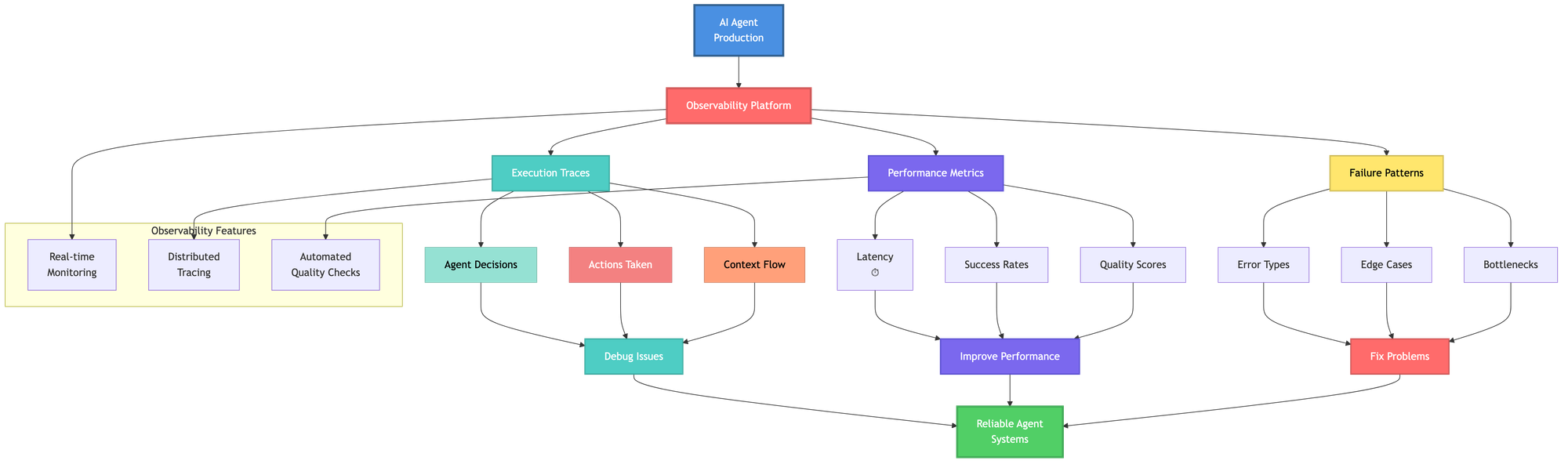

20. Agent Observability

Agent observability encompasses the tools and practices for monitoring, debugging, and understanding AI agent behavior in production environments. This includes tracking execution traces, measuring performance metrics, and identifying failure patterns.

Effective observability is essential for maintaining reliable agent systems at scale. Maxim's Agent Observability platform provides real-time monitoring, distributed tracing, and automated quality checks to ensure agents perform reliably in production.

With comprehensive observability, teams can track agent decisions, debug issues quickly, and continuously improve performance based on production data.

Building Reliable AI Agents

Understanding these 20 terms provides the foundation for effective communication about AI agents. However, building production-ready agentic systems requires more than terminology, it demands rigorous testing, evaluation, and monitoring throughout the development lifecycle.

Maxim AI offers an end-to-end platform, from simulation and evaluation before deployment to comprehensive observability in production for AI Agents. Teams use Maxim to test agents across hundreds of scenarios, measure quality quantitatively, and maintain reliability at scale.

Whether you're building customer service agents, autonomous research assistants, or complex multi-agent systems, Maxim can help you simulate, evaluate, and observe your AI applications more effectively.

Schedule a demo to see how Maxim can help you ship reliable AI agents more than 5x faster, or sign up to start building with confidence today.