Top 3 Observability Platforms for AI Agents in 2026

TL;DR

This guide compares Maxim AI, Arize AI, and LangSmith for AI agent observability in 2026, focusing on features and best-fit scenarios. Maxim AI offers end-to-end simulation, evals, and production-grade observability with multimodal support and cross-functional UX. Arize excels at model observability and ML monitoring for traditional MLOps. LangSmith is strong for LLM app tracing and developer-centric workflows.

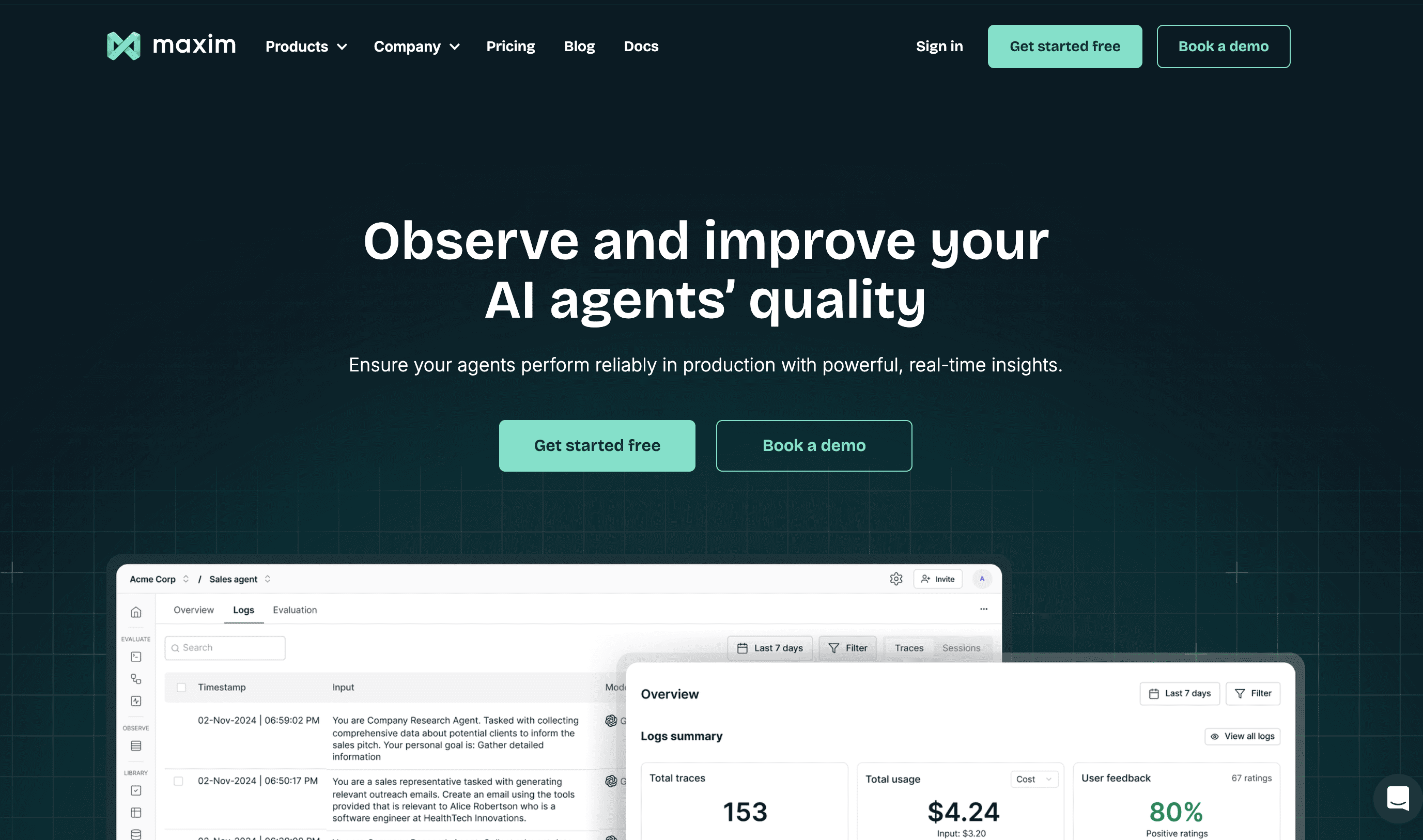

Maxim AI: Full-Stack Observability for Agentic Applications

Maxim AI is an end-to-end platform for simulation, evaluation, and observability, purpose-built for multimodal agents and cross-functional collaboration. It combines distributed tracing, AI evals, human-in-the-loop review, and custom dashboards to measure and improve agent quality across pre-release and production.

Features

- Agent Observability: Real-time production logging, distributed tracing, automated quality checks, and alerts for live issues across applications. See the observability suite and capabilities on the Maxim product page: Agent Observability.

- Simulation & Evaluation: AI-powered simulations across scenarios and personas, trajectory-level analysis, and re-runs from any step to reproduce issues. Explore the product: Agent Simulation & Evaluation.

- Unified Evaluations: Off-the-shelf and custom evaluators (deterministic, statistical, LLM-as-a-judge), configurable at session, trace, or span level with human + LLM-in-the-loop. Details on evaluation workflows: Agent Simulation & Evaluation.

- Experimentation & Prompt Management: Advanced prompt engineering with versioning, deployment variables, and side-by-side quality, cost, and latency comparisons. See Experimentation (Playground++).

- Data Engine: Curate and enrich multimodal datasets, build splits for evals and experiments, and evolve data from production logs.

- LLM Gateway (Bifrost): High-performance AI gateway supporting 12+ providers with unified OpenAI-compatible API, automatic failover, load balancing, semantic caching, observability, and governance. Learn more: Bifrost Features, Fallbacks & Load Balancing, Semantic Caching, Observability.

Best for

- Agent teams needing llm observability, agent tracing, and agent evaluation across pre-release and production.

- Product + Engineering collaboration with custom dashboards, flexible evals, and human review at scale.

- Multimodal agents with complex workflows, where ai monitoring, model tracing, prompt versioning, and ai evals must work together.

- Enterprises requiring governance, SSO, Vault, budget controls, and high SLA support via Bifrost. See gateway governance: Governance & Access Control.

Arize AI: Model Observability for ML Performance Monitoring

Arize AI is widely recognized for model observability and ML performance monitoring, particularly suited to teams focused on traditional MLOps and model quality in production. When your priority is model training-related monitoring and drift detection over agent simulation or conversational tracing, Arize is a strong fit.

Features

- Model Monitoring: Production-grade model observability, drift detection, performance metrics, and dataset analysis to maintain ML model reliability over time.

- Evaluation Signals: Tools to analyze and segment performance by features, cohorts, or slices to diagnose regressions and data quality issues.

- Operational Insights: Dashboards for model evaluation, alerts for anomalies, and workflows tailored to data science and ML engineering teams.

Best for

- Teams whose primary need is model training-related monitoring and production model observability.

- ML engineers/data scientists wanting robust monitoring for predictive models rather than conversational agents.

- Organizations with established MLOps stacks focusing on model evals, model monitoring, and ai reliability in production pipelines.

LangSmith: Tracing and Debugging for LLM Applications

LangSmith focuses on LLM app tracing, prompt workflows, and developer-centric debugging. It offers powerful tools for capturing spans, inputs/outputs, and evaluator results for llm tracing and agent debugging ideal for teams building with LLM frameworks and needing granular visibility into chain/tool execution.

Features

- LLM Tracing: Detailed capture of spans, prompts, tool calls, and outputs to debug complex LLM workflows and chains.

- Eval Integration: Support for evaluator runs and quality checks within development loops for llm evaluation and chatbot evals.

- Developer Experience: Tight integration with popular LLM SDKs, strong prompt engineering workflows, and instrumentation for debugging llm applications.

Best for

- Developers building LLM apps needing deep trace-level visibility, prompt management, and agent debugging.

- Use cases centered on copilot evals, rag evaluation, and ai debugging during build-run cycles rather than enterprise-scale agent simulation.

Comparison Summary

- Choose Maxim AI for a full-stack approach that unifies simulation, evals, observability, datasets, and the Bifrost gateway optimized for cross-functional teams and agent observability at scale. Explore: Experimentation, Simulation & Evaluation, Observability, and Bifrost docs: Unified Interface, Fallbacks, Observability.

- Choose Arize AI if your primary need is model observability and training-related monitoring, with strong capabilities for drift detection and model performance analysis across datasets and cohorts.

- Choose LangSmith if you want llm tracing, prompt versioning, and developer-oriented agent debugging for LLM applications and tool/chain orchestration.

Conclusion

In 2026, observability for AI agents requires coverage across pre-release experimentation, simulation, evals, and production monitoring. Maxim AI stands out for comprehensive agent observability with multimodal support, human + LLM-in-the-loop evaluation, and enterprise-grade gateway features via Bifrost. Arize AI remains strong for model monitoring and training-focused MLOps, while LangSmith provides deep llm tracing and developer workflows. Teams scaling agentic systems and seeking reliable ai quality improvements across the lifecycle will benefit most from Maxim’s integrated stack and cross-functional UX. See product pages: Experimentation, Agent Simulation & Evaluation, Agent Observability, and Bifrost docs: Semantic Caching, Governance.

Ready to evaluate your agent stack with enterprise-grade observability? Book a demo: Maxim AI Demo or start now: Sign up.