Top 5 Braintrust Alternatives in 2025

TLDR

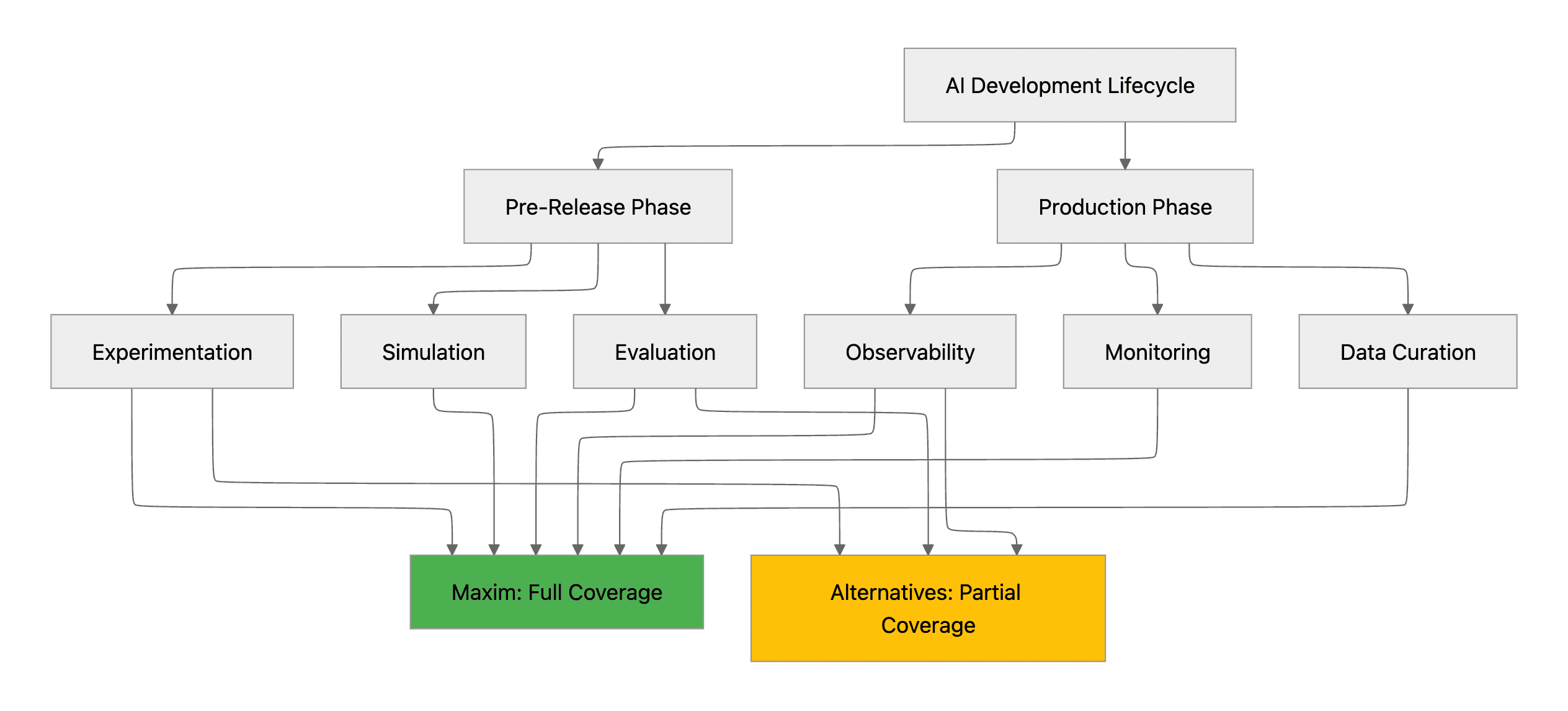

Braintrust focuses on evaluation infrastructure for AI applications, but teams building production multi-agent systems increasingly require platforms covering the full development lifecycle. Maxim AI provides end-to-end tooling spanning experimentation, agent simulation, evaluation, and production observability, with cross-functional workflows enabling both engineering and product teams to work independently. This guide examines five Braintrust alternatives, including Maxim AI, Langfuse, Arize Phoenix, Helicone, and LangSmith, comparing their capabilities across lifecycle coverage, agent simulation support, evaluation flexibility, and integration ecosystems to help teams select the platform that matches their technical requirements and organizational workflows.

Table of Contents

- Introduction

- Why Teams Evaluate Braintrust Alternatives

- Top 5 Braintrust Alternatives

- Platform Comparison

- Selection Framework

Introduction

Braintrust is an AI evaluation platform providing experiment tracking, evaluation infrastructure, and GitHub Actions integration. The platform focuses on evaluation workflows with features including prompt testing, dataset versioning, and automated evaluation scoring.

Teams building production AI applications require platforms that support the complete development lifecycle, from pre-release experimentation and simulation through production observability and cross-functional collaboration. While Braintrust centers on evaluation infrastructure, many teams need comprehensive tooling that spans the full AI development lifecycle.

This guide examines five alternatives to Braintrust in 2025, analyzing their capabilities across experimentation, simulation, evaluation, and observability. The analysis focuses on platforms designed for cross-functional AI teams building multi-agent systems and complex LLM applications.

Why Teams Evaluate Braintrust Alternatives

Organizations building production AI applications evaluate alternatives to Braintrust based on several factors:

- Cross-Functional Workflow Requirements: Braintrust's design centers on engineering workflows. Teams where product managers, QA engineers, and domain experts need independent access to evaluation and experimentation workflows require platforms that support no-code workflows alongside SDK-based integration.

- Lifecycle Coverage: Braintrust focuses on evaluation infrastructure. Teams building complex multi-agent systems require platforms covering the full development lifecycle: pre-release simulation and experimentation, comprehensive evaluation frameworks, production observability, and data management capabilities.

- Integration Ecosystem: Organizations using diverse orchestration frameworks (LangChain, LangGraph, OpenAI Agents, Crew AI) and multiple LLM providers require platforms with broad integration support. Teams building framework-agnostic applications need an evaluation infrastructure that works across their entire technology stack.

- ,Data Management: Production AI systems require continuous dataset evolution based on real-world usage. Teams need platforms that support synthetic data generation, dataset curation from production logs, and human-in-the-loop feedback collection for iterative improvement.

Top 5 Braintrust Alternatives

1. Maxim AI

Maxim AI is an end-to-end AI evaluation and observability platform designed for cross-functional teams building production-grade AI agents. The platform provides comprehensive tooling across the entire AI development lifecycle, from pre-release simulation and experimentation through production monitoring and data management.

Experimentation

Maxim's Experimentation platform serves as an advanced prompt engineering environment enabling rapid iteration without code changes:

- Prompt Management: Organize and version prompts directly from UI for iterative improvement

- Deployment Flexibility: Deploy prompts with different variables and experimentation strategies without modifying application code

- Seamless Integration: Connect with databases, RAG pipelines, and prompt tools

- Comparison Framework: Compare output quality, cost, and latency across different combinations of prompts, models, and parameters

The experimentation workflow accelerates development velocity by enabling product teams to iterate on prompts independently while engineers focus on core application logic.

Agent Simulation and Evaluation

Maxim's simulation capabilities enable teams to test AI agents across diverse scenarios and user personas before production deployment:

- Multi-Turn Simulation: Simulate customer interactions across real-world scenarios and monitor agent responses at every step

- Conversational-Level Evaluation: Analyze agent trajectories, assess task completion, and identify failure points

- Step-by-Step Debugging: Re-run simulations from any step to reproduce issues and identify root causes

- Parallel Testing: Evaluate across thousands of scenarios, personas, and prompt variations simultaneously

The simulation infrastructure helps teams identify edge cases and failure modes in controlled environments, significantly reducing production incidents.

Unified Evaluation Framework

Maxim provides flexible evaluation capabilities supporting multiple methodologies:

- Pre-Built Evaluators: Access evaluators through the evaluator store for common use cases

- Custom Evaluators: Create custom evaluators using AI, programmatic, or statistical approaches

- Human Evaluation Workflows: Set up human evaluations for subject matter expert review

- Multi-Level Configuration: Configure evaluations at session, trace, or span level for granular quality measurement

The evaluation framework enables teams to quantify improvements or regressions with confidence before deploying changes to production.

Production Observability

Maxim's observability suite provides comprehensive monitoring for production AI applications:

- Real-Time Tracing: Monitor multi-step agent workflows using distributed tracing

- Custom Dashboards: Create deep insights across agent behavior and custom dimensions

- Automated Quality Checks: Run evaluations on production traffic using rule-based approaches

- Live Issue Tracking: Get real-time alerts and track issues for immediate response

The observability suite enables teams to identify and resolve production issues quickly while continuously learning from real-world usage patterns.

Data Engine

Maxim's data management capabilities support the complete data lifecycle:

- Multi-Modal Import: Import datasets, including images with simple workflows

- Synthetic Data Generation: Generate synthetic datasets for testing and evaluation

- Production Data Curation: Continuously curate and evolve datasets from production logs

- Data Enrichment: Access in-house or Maxim-managed data labeling and feedback

- Targeted Evaluations: Create data splits for specific evaluation scenarios and experiments

These capabilities ensure teams can continuously evolve their datasets based on real-world learnings and maintain high-quality evaluation benchmarks.

Bifrost LLM Gateway

Bifrost is Maxim's high-performance AI gateway providing unified access to 12+ providers through a single OpenAI-compatible API:

- Unified Interface: Access OpenAI, Anthropic, AWS Bedrock, Google Vertex, Azure, Cohere, Mistral, Ollama, Groq through one API

- Automatic Failover: Seamless failover between providers and models with zero downtime

- Semantic Caching: Intelligent response caching based on semantic similarity to reduce costs and latency

- Model Context Protocol: MCP support for external tool integration (filesystem, web search, databases)

- Enterprise Features: Budget management, SSO integration, and comprehensive observability

Bifrost provides enterprise-grade infrastructure for production AI applications with minimal configuration overhead.

Technical Integration

Maxim provides production-grade SDKs in Python, TypeScript, Java, and Go, supporting diverse technology stacks. The platform integrates natively with popular orchestration frameworks including LangChain, LangGraph, OpenAI Agents, Crew AI, and LiteLLM, enabling teams to maintain existing development workflows while adding comprehensive evaluation capabilities.

The platform supports both cloud deployment and self-hosted options for organizations with strict data residency requirements, with SOC 2 Type 2 compliance and role-based access controls for enterprise security.

Cross-Functional Collaboration

A distinctive characteristic of Maxim is its design for cross-functional collaboration. While powerful SDKs enable engineering teams to integrate evaluation deeply into their workflows, the entire platform is accessible through a no-code UI. Product managers can independently define, run, and analyze evaluations without engineering dependencies, accelerating iteration cycles and enabling data-driven product decisions.

This design philosophy addresses a common pain point where evaluation insights remain siloed within engineering teams, preventing product and business stakeholders from directly accessing the information needed for strategic decisions.

Ideal Use Cases

Maxim excels for:

- Organizations building complex multi-agent systems requiring comprehensive simulation and evaluation

- Cross-functional teams where product managers need independent access to evaluation workflows

- Companies requiring end-to-end lifecycle management from experimentation through production monitoring

- Teams deploying multiple AI applications requiring centralized observability and data management

- Enterprises with strict compliance requirements needing self-hosted deployment options

2. Langfuse

Langfuse is an open-source LLM engineering platform providing observability, prompt management, and evaluation capabilities. The platform builds on OpenTelemetry standards and supports native integrations with LangChain, LlamaIndex, and OpenAI SDK.

Core Capabilities:

- Comprehensive tracing for LLM and non-LLM operations including retrieval, embeddings, and API calls

- Centralized prompt management with version control and deployment without code changes

- LLM-as-a-judge evaluation with execution tracing for debugging

- Dataset management for systematic testing

- Manual annotation and human feedback collection

Technical Characteristics: Langfuse uses a centralized PostgreSQL database architecture and provides SDK-based integration requiring instrumentation of application code. The platform is fully open-source under MIT license with self-hosting support via Docker and Kubernetes.

Limitations: Limited agent simulation capabilities compared to specialized platforms. SDK-based integration requires more setup than proxy-based alternatives. Evaluation features are less comprehensive than platforms purpose-built for evaluation.

Best For: Teams heavily invested in open-source tooling who prioritize observability and prompt management alongside basic evaluation capabilities.

3. Arize Phoenix

Arize Phoenix is an open-source AI observability platform backed by Arize AI, built on OpenTelemetry and OpenInference standards. The platform focuses on model performance monitoring with LLM support.

Core Capabilities:

- OpenTelemetry-based instrumentation for standardized tracing

- Embeddings visualization and analysis for understanding model behavior

- Model performance monitoring tracking accuracy, drift, and data quality

- Framework support for LlamaIndex, LangChain, and DSPy

Technical Characteristics: Phoenix can be deployed locally with a single Docker command and integrates with CI/CD through custom Python scripts. The platform provides both observability and evaluation features, though with primary emphasis on monitoring.

Limitations: No native CI/CD action, requiring custom pipeline orchestration. More observability-focused than evaluation-specific. Limited agent simulation capabilities.

Best For: Teams prioritizing open standards and embeddings analysis, or organizations already using Arize's broader ML observability platform.

4. Helicone

Helicone is an open-source LLM observability platform emphasizing ease of integration and comprehensive analytics. The platform uses a proxy-based architecture for one-line integration.

Core Capabilities:

- One-line proxy integration requiring only baseURL change

- Response caching for cost and latency reduction

- Cost tracking and analytics across users and features

- Session tracing for multi-step workflows

- Automatic failover between LLM providers

Technical Characteristics: Helicone uses a distributed architecture with Cloudflare Workers, ClickHouse, and Kafka, adding approximately 50-80ms latency. The platform supports both cloud usage and self-hosting via Docker or Kubernetes.

Limitations: Primarily observability-focused with limited evaluation features. No agent simulation capabilities. Evaluation features require integration with external tools.

Best For: Teams seeking quick integration for production observability and cost optimization without requiring comprehensive evaluation capabilities.

5. LangSmith

LangSmith is the LangChain-native development platform providing testing, monitoring, and evaluation for LangChain applications.

Core Capabilities:

- Native LangChain integration with minimal configuration

- Trace visualization for debugging LangChain workflows

- Dataset management and testing capabilities

- Evaluation framework for quality measurement

- Playground for prompt iteration

Technical Characteristics: LangSmith provides seamless integration for LangChain applications but offers limited benefits for teams using other frameworks. The platform includes both cloud and self-hosted deployment options.

Limitations: Limited value for non-LangChain applications. Less comprehensive evaluation capabilities than specialized platforms. No agent simulation features.

Best For: Development teams building primarily with LangChain who want seamless integration without framework-agnostic requirements.

Platform Comparison

| Feature | Maxim AI | Langfuse | Arize Phoenix | Helicone | LangSmith |

|---|---|---|---|---|---|

| Lifecycle Coverage | Full (Simulation, Evaluation, Experimentation, Observability) | Observability, Prompt Management, Basic Evaluation | Observability, Basic Evaluation | Observability Only | Observability, Basic Evaluation |

| Agent Simulation | ✅ Advanced | ❌ No | ❌ No | ❌ No | ❌ No |

| Cross-Functional UI | ✅ No-code for all features | ⚠️ Limited | ⚠️ Limited | ⚠️ Limited | ⚠️ Limited |

| Integration Type | SDK + No-code | SDK-based | SDK-based | Proxy-based | Framework-specific |

| Evaluation Methods | AI, Programmatic, Statistical, Human | LLM-as-judge, Human, Custom | Custom, OpenTelemetry-based | Limited | Basic |

| Multi-Modal Support | ✅ Text, Images, Audio | ⚠️ Limited | ⚠️ Limited | ⚠️ Limited | ⚠️ Limited |

| Data Management | ✅ Advanced (Synthetic gen, Curation) | ⚠️ Basic | ⚠️ Basic | ❌ No | ⚠️ Basic |

| Deployment Options | Cloud, Self-hosted, In-VPC | Cloud, Self-hosted | Self-hosted | Cloud, Self-hosted | Cloud, Self-hosted |

| Enterprise Features | SOC 2, SSO, RBAC, Custom rate limits | Self-hosted options | Open-source | SOC 2, GDPR | Enterprise plans |

| LLM Gateway | ✅ Bifrost (12+ providers) | ❌ No | ❌ No | ⚠️ Basic | ❌ No |

Selection Framework

Selection criteria depend on organizational requirements and technical context:

Choose Maxim AI if:

- Building complex multi-agent systems requiring comprehensive simulation and evaluation

- Cross-functional teams need independent access to evaluation workflows

- Requirements span the full lifecycle from experimentation through production monitoring

- Multi-modal support is critical for your applications

- Need enterprise-grade deployment options with compliance certifications

Consider Langfuse if:

- Open-source tooling is a hard requirement

- Primary need is observability with basic evaluation

- LangChain integration is important but not exclusive

Consider Arize Phoenix if:

- OpenTelemetry standardization is a priority

- Already using Arize's ML monitoring platform

- Embeddings analysis is a core requirement

Consider Helicone if:

- Need quick integration with minimal configuration

- Primary focus is cost optimization and observability

- Evaluation requirements are minimal

Consider LangSmith if:

- Building exclusively with LangChain

- Framework-specific integration provides sufficient value

- Evaluation needs are basic

Get Started with Maxim AI

Maxim AI provides comprehensive tooling for teams building production-grade AI agents. The platform supports the entire development lifecycle with specialized capabilities for cross-functional collaboration, enabling product and engineering teams to ship reliable AI applications faster.

Sign up for free to start building with Maxim, or schedule a demo to explore how Maxim can accelerate your AI development workflow.

For technical documentation and integration guides, visit the Maxim documentation.