Top 5 LLM Gateways for 2026: A Comprehensive Comparison

Table of Contents

- TL;DR

- Quick Comparison Table

- Overview > What is an LLM Gateway

- Detailed Feature Matrix

- Gateway Profiles

- Selection Guide > How to Choose The Right Gateway?

- Conclusion

TL;DR

The LLM gateway landscape has matured significantly. The top gateways combine reliability, observability, governance, and cost optimization (routing traffic to cheaper models) across multi-provider deployments. Bifrost excels in enterprise-grade governance, observability, and performance, Cloudflare AI Gateway for unified management, LiteLLM in developer-friendly open-source compatibility, Vercel AI Gateway in developer experience and framework integration, and Kong AI Gateway in API management integration. Teams should evaluate gateways on failover capabilities, latency, cost efficiency, observability, policy controls, and integration depth with evaluation frameworks and agent debugging tools.

Quick Comparison Table

| Gateway | Latency Overhead | Open Source | Enterprise Ready | Best For |

|---|---|---|---|---|

| Bifrost | ~11µs | ✓ | ✓ | Governance & Performance |

| Cloudflare | Medium | ✗ | ✓ | Unified Management |

| LiteLLM | Medium | ✓ | Partial | Portability & Flexibility |

| Vercel | <20ms | ✗ | ✓ | Frontend Teams & Next.js |

| Kong | Medium | ✗ | ✓ | API Management Integration |

Overview > What is an LLM Gateway

An LLM gateway unifies multiple model providers behind a single API, enabling policy enforcement, automatic failover, load balancing, semantic caching, usage governance, and centralized observability. With 2025 ending and enterprise AI adoption accelerating into 2026, LLM gateways have evolved from nice-to-have tools to mission-critical infrastructure. Mature teams rely on gateways to maintain AI reliability, reduce latency and cost, and standardize prompt management, LLM routing, and agent tracing across environments.

Overview > What is an LLM Gateway > Key Capabilities

- Reliability and failover: Routes traffic across providers and models to avoid downtime

- Cost and latency optimization: Uses caching, routing heuristics, and budgets to control spend and latency

- Security and governance: Centralizes credentials, enforces rate limits, and sets per-team access controls

- Observability and tracing: Captures spans, sessions, and LLM tracing for agent monitoring

- Evals integration: Connects logs to test suites, enabling LLM evaluation, agent evaluation, and hallucination detection pre- and post-release

- Guardrails and safety: Centralized safety layer for policy enforcement, content moderation, and output validation with auto-fallbacks and tracing

KEY INSIGHT: Modern LLM gateways are no longer just proxies, they're reliability platforms that handle the operational complexity of multi-model AI systems.

Detailed Feature Matrix

| Feature Category | Bifrost | Cloudflare | LiteLLM | Vercel | Kong |

|---|---|---|---|---|---|

| Performance & Latency | |||||

| Latency Overhead | ~11µs | Medium | Medium | <20ms | Medium |

| High RPS Support | 5K+ RPS | High | Medium | High | High |

| Semantic Caching | ✓ | ✓ | ✓ | ✓ | ✓ |

| Multi-Provider Support | |||||

| Number of Providers | 11,000+ models | 350+ models | 100+ providers | Hundreds | Multiple |

| Automatic Failover | ✓ | ✓ | ✓ | ✓ | ✓ |

| Load Balancing | Adaptive | ✓ | Router-based | ✓ | ✓ |

| Observability & Monitoring | |||||

| Built-in Dashboard | ✓ | ✓ | Limited | ✓ | ✓ |

| Real-time Logs | ✓ | Within 15s | ✓ | ✓ | ✓ |

| Prometheus Metrics | ✓ | ✗ | Via callbacks | Limited | ✓ |

| OpenTelemetry | ✓ | ✗ | Via integrations | Limited | ✓ |

| Request/Response Inspection | ✓ | ✓ | ✓ | ✓ | ✓ |

| Governance & Security | |||||

| Virtual Keys | ✓ | ✗ | ✓ | Limited | ✓ |

| SSO Support | ✓ (Google, GitHub) | ✓ | Limited | ✓ | ✓ |

| Vault Integration | ✓ | ✗ | Limited | Limited | ✓ |

| Granular Budgets | ✓ (per-team, customer, project) | Limited | ✓ | Limited | ✓ |

| Rate Limiting | Configurable | ✓ | ✓ | ✓ | Advanced |

| MCP Tool Filtering | ✓ | ✗ | ✗ | ✗ | ✓ |

| Deployment Options | |||||

| Self-Hosted | ✓ (NPX, Docker) | ✗ | ✓ | ✗ | ✓ |

| Cloud-Hosted | ✓ | ✓ | ✓ | ✓ | ✓ |

| P2P Clustering | ✓ | ✗ | ✗ | ✗ | ✓ |

| Developer Experience | |||||

| OpenAI API Compatible | ✓ | ✓ | ✓ | ✓ | ✓ |

| Visual Provider Setup | ✓ | ✓ | Limited | ✓ | Via UI |

| Plugin Architecture | ✓ | Limited | Limited | Limited | Extensive |

| Framework Integration | Multiple | Cloudflare Workers | Multiple | Next.js/React | Kong ecosystem |

| Pricing Model | |||||

| Open Source | ✓ | ✗ | ✓ | ✗ | ✗ |

| Free Tier | ✓ | ✓ | ✓ | ✓ | Limited |

| Enterprise | ✓ | ✓ | ✓ | ✓ | ✓ |

Gateway Profiles

Gateways > Bifrost by Maxim AI

Provider: Maxim AI

Repository: GitHub

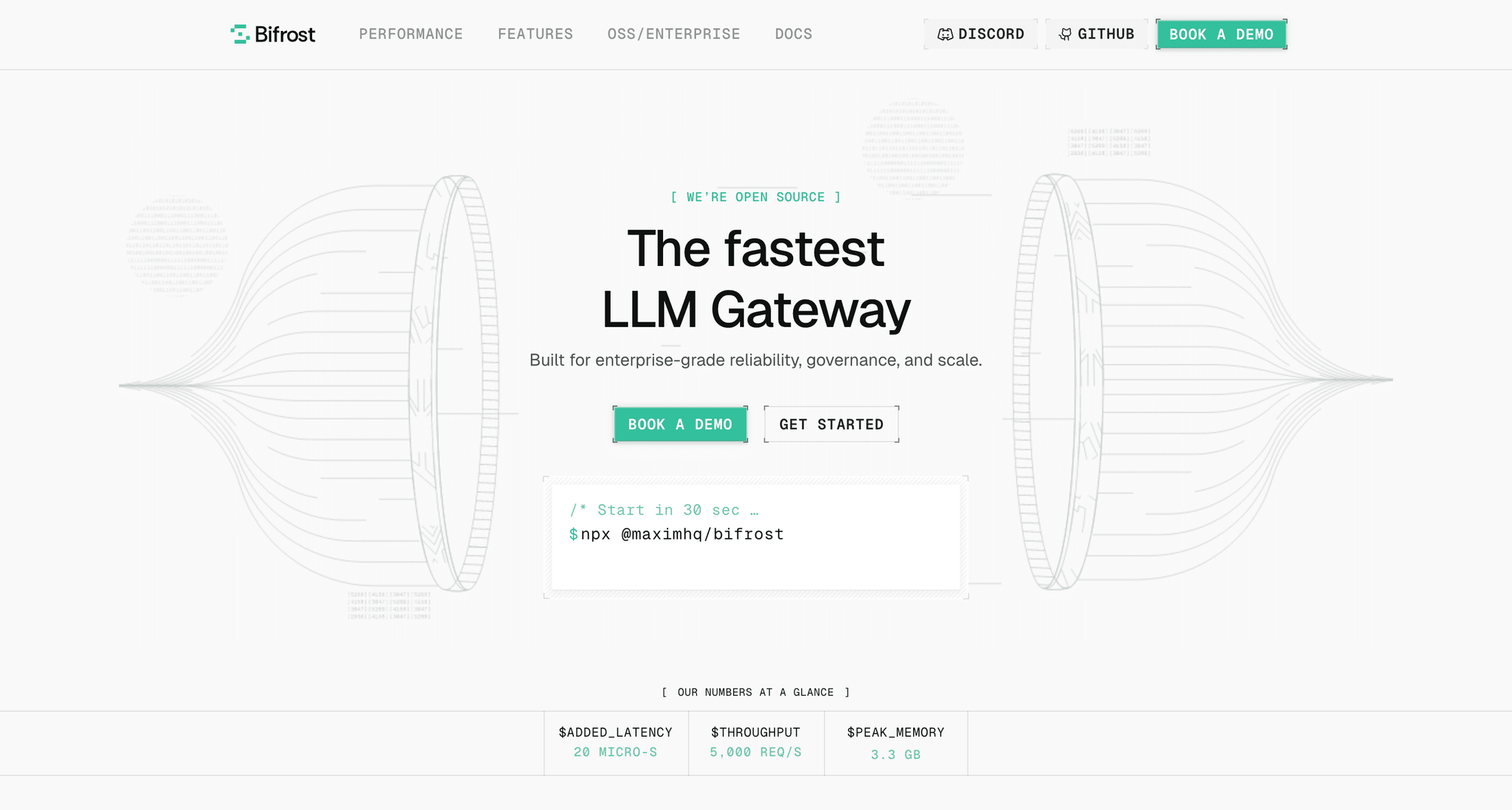

Documentation: docs.getbifrost.ai

Bifrost is a high-performance and the fastest open-source AI gateway written in Go, offering a unified interface for 1,000+ models including OpenAI, Anthropic, Mistral, Ollama, Bedrock, Groq, Perplexity, Gemini and more. It delivers automatic fallbacks, intelligent load balancing, semantic caching, guardrails for content filtering and security, and enterprise-grade governance, with native observability and integrations for Model Context Protocol (MCP). The gateway can be deployed via NPX for instant setup or through Docker for containerized environments, making it accessible for teams of all sizes.

Bifrost > At a Glance

┌─────────────────────────────────┐

│ BIFROST QUICK SPECS │

├─────────────────────────────────┤

│ Type: Open-source │

│ Language: Go │

│ Deployment: NPX, Docker │

│ Latency: ~11µs overhead │

│ Models: 11,000+ │

│ RPS Tested: 5K+ │

│ License: Open-source │

└─────────────────────────────────┘

Bifrost > Core Capabilities

- Ultra-low latency: Adds just ~11µs overhead at 5K RPS under sustained load

- Multi-provider support: Automatic failover with zero downtime across major providers

- Plugin-first architecture: Clean, extensible middleware for custom logic

- Drop-in replacement: Change only the base URL in existing OpenAI SDK connections

- Visual provider setup: Add API keys through UI without code changes

- Smart key distribution: Intelligent load balancing with model-aware key filtering and weighted distribution

- Centralized observability: Built-in dashboard with real-time log monitoring UI, advanced filtering, request/response inspection, token/cost analytics, and out-of-the-box Prometheus metrics and OpenTelemetry traces

- Governance and budget: Virtual keys for secure access control, intelligent routing policies, granular budgets (per-team, per-customer, per-project), configurable rate limits, and MCP tool filtering to control agent tool access

Bifrost > Enterprise Features

- Alerts: Real-time notifications for budget limits, failures, and performance issues on Email, Slack, PagerDuty, Teams, Webhook and more.

- Log Exports: Export and analyze request logs, traces, and telemetry data from Bifrost with enterprise-grade data export capabilities for compliance, monitoring, and analytics.

- Audit Logs: Comprehensive logging and audit trails for compliance and debugging.

- Guardrails: Automatically detect and block unsafe model outputs with real-time policy enforcement and content moderation across all agents.

- Cross-node synchronization: Temporarily removes poorly performing keys from rotation

- Governance: SAML support for SSO and Role-based access control and policy enforcement for team collaboration.

- Vault Support: Secure API key management with HashiCorp Vault, AWS Secrets Manager, Google Secret Manager, and Azure Key Vault integration. Store and retrieve sensitive credentials using enterprise-grade secret management.

- Adaptive load balancing: Automatically optimizes traffic distribution across provider keys and models based on real-time performance metrics.

- Cluster Mode: High availability deployment with automatic failover and load balancing. Peer-to-peer clustering where every instance is equal.

Bifrost > Best For

Teams requiring enterprise-grade governance, strong observability, ultra-low latency, and deep integration with Maxim’s evaluation and simulation platform.

Gateways > Cloudflare AI Gateway

Cloudflare AI Gateway provides a unified interface to connect with major AI providers including Anthropic, Google, Groq, OpenAI, and xAI, offering access to over 350 models across 6 different providers.

Cloudflare AI Gateway > Features

- Multi-provider support: Works with Workers AI, OpenAI, Azure OpenAI, HuggingFace, Replicate, Anthropic, and more

- Performance optimization: Advanced caching mechanisms to reduce redundant model calls and lower operational costs

- Rate limiting and controls: Manage application scaling by limiting the number of requests

- Request retries and model fallback: Automatic failover to maintain reliability

- Real-time analytics: View metrics including number of requests, tokens, and costs with insights on requests and errors

- Comprehensive logging: Stores up to 100 million logs in total (10 million logs per gateway, across 10 gateways) with logs available within 15 seconds

- Dynamic routing: Intelligent routing between different models and providers

Cloudflare AI Gateway > Best For

Organizations already using Cloudflare services who want unified management of traditional and AI traffic.

Gateways > LiteLLM

LiteLLM is an open-source gateway that translates requests to the OpenAI API format for 100+ providers including Bedrock, Huggingface, VertexAI, Azure, Groq, and more. It standardizes outputs across providers, handles retry/fallback logic through its Router, and provides budget and rate limiting controls via its Proxy Server.

LiteLLM > Features

- OpenAI API compatibility: Minimal refactoring required for provider/model swaps

- Simple routing primitives: Retries and basic caching patterns

- Authentication and key management: Budget limits and rate controls

- Observability integrations: Pre-defined callbacks for Lunary, MLflow, Langfuse, Helicone, and other platforms

LiteLLM > Best For

Teams prioritizing portability, early-stage experimentation, and open-source flexibility who can manage operational complexity.

Gateways > Vercel AI Gateway

Vercel AI Gateway, now generally available, provides a single endpoint to access hundreds of AI models across providers with production-grade reliability. The platform emphasizes developer experience, with deep integration into Vercel's hosting ecosystem and framework support.

Vercel AI Gateway > Features

- Multi-provider support: Access to hundreds of models from OpenAI, xAI, Anthropic, Google, and more through a unified API

- Low-latency routing: Consistent request routing with latency under 20 milliseconds designed to keep inference times stable regardless of provider

- Automatic failover: If a model provider experiences downtime, the gateway automatically redirects requests to an available alternative

- OpenAI API compatibility: Compatible with OpenAI API format, allowing easy migration of existing applications

- Observability: Per-model usage, latency, and error metrics with detailed analytics

Vercel AI Gateway > Best For

Teams already using Vercel for hosting who want seamless integration with Next.js, React, and modern frameworks, or developers prioritizing rapid experimentation with multiple models and minimal infrastructure management.

Gateways > Kong AI Gateway

Kong AI Gateway extends Kong's proven API gateway platform to support LLM routing, providing features including observability, semantic security and caching, and routing.

Kong AI Gateway > Features

- Multi-provider routing: Support for OpenAI, Anthropic, Cohere, Azure OpenAI, and custom endpoints through Kong's plugin architecture

- Request/response transformation: Normalize formats across different providers

- Rate limiting and quota management: Token analytics and cost tracking

- Enterprise security: Authentication, authorization, mTLS, API key rotation

- MCP support: Centralized MCP server management

- Extensive plugin marketplace: Rich ecosystem of extensions and integrations

Kong AI Gateway > Best For

Organizations already using Kong for API management who want to consolidate traditional API and AI gateway management.

Selection Guide > How to Choose The Right Gateway?

Selecting a gateway depends on reliability needs, governance requirements, integration depth, and team workflows.

- Reliability and failover:

- Evaluate automatic fallbacks, circuit breaking, and multi-region redundancy. For mission-critical apps, prioritize proven failover like Bifrost’s fallbacks.

- Observability and tracing:

- Ensure distributed tracing, span-level visibility, and metrics export. Bifrost’s observability integrates Prometheus and structured logs.

- Cost and latency:

- Seek semantic caching to cut costs and tail latency; ensure budgets and rate limits per team/customer.

- Security and governance:

- Confirm SSO, Vault support, scoped keys, and RBAC.

- Developer experience:

- Prefer OpenAI-compatible drop-in APIs and flexible configuration. •

- Lifecycle integration:

- Align with prompt versioning, evals, agent simulation, and production ai monitoring. Maxim’s full-stack approach supports pre-release to post-release needs.

Conclusion

Enterprises need LLM gateways that deliver reliability, observability, governance, and excellent developer experience. Each gateway serves distinct needs:

- Bifrost: Choose for ultra-low latency (11µs overhead), enterprise-grade governance, strong observability, and deep integration with Maxim's evaluation and simulation stack

- Cloudfare: for unified management

- LiteLLM: Ideal for open-source flexibility, portability, and teams comfortable managing infrastructure

- Vercel: for frontend-focused teams, and Next.js/React integration with AI SDK

- Kong AI Gateway: Perfect for teams with existing Kong infrastructure wanting unified API and AI management

The LLM gateway you choose should align with your infrastructure, team expertise, and reliability requirements. As AI systems become more complex with multi-agent workflows and increased production usage, investing in robust gateway infrastructure becomes essential for maintaining reliability, controlling costs, and ensuring governance.

Ready to implement enterprise-grade guardrails for your AI applications? Start with Bifrost to get comprehensive protection with minimal implementation overhead, or schedule a demo to learn how Maxim AI's platform can help you build, evaluate, and monitor AI systems with confidence.