Top 5 Prompt Versioning Tools for Reliable AI Workflows

As AI applications transition from experimental prototypes to production systems, the gap between success and failure often hinges on prompt management. Organizations deploying large language models (LLMs) face a critical challenge: how do you systematically track, test, and deploy prompt changes without introducing regressions that impact thousands of users? Without proper versioning infrastructure, teams struggle with unpredictable outputs, difficult rollbacks, and deployment failures that contribute to the industry's stark reality. Research shows that 95% of AI pilot programs fail to deliver measurable impact.

Prompt versioning has evolved from basic change tracking into a comprehensive development infrastructure that spans experimentation, evaluation, deployment, and production monitoring. The non-deterministic nature of LLMs means that even minor prompt modifications can cause significant output degradation, making systematic version control essential for maintaining reliability at scale.

This guide examines the five leading prompt versioning platforms in 2025, analyzing their capabilities across version management, integration with evaluation, deployment workflows, and production observability. Whether you're managing a handful of prompts or orchestrating complex multi-agent systems, understanding these tools helps you build the foundation for systematic prompt development and continuous quality improvement.

Table of Contents

- What is Prompt Versioning?

- Why Prompt Versioning is Critical for AI Workflows

- Top 5 Prompt Versioning ToolsMaxim AI - End-to-End Platform for AI Lifecycle ManagementPromptLayer - Registry-Based Prompt ManagementHelicone - LLM Observability with Version ControlLangSmith - LangChain-Native VersioningBraintrust - GitHub-Integrated Development Platform

- Feature Comparison

- Workflow Integration: From Development to Production

- Best Practices for Prompt Versioning

- Conclusion

What is Prompt Versioning?

Prompt versioning is the systematic practice of tracking, managing, and documenting changes to prompts used in AI applications. Similar to how software engineers use Git for code version control, prompt versioning enables AI teams to maintain reproducibility, facilitate collaboration, and ensure production reliability.

According to research on AI engineering best practices, organizations that implement systematic prompt management reduce deployment failures by identifying regressions before they reach production. Every prompt modification receives a unique version identifier, complete with metadata including author, timestamp, and change descriptions.

Why Prompt Versioning is Critical for AI Workflows

The non-deterministic nature of large language models (LLMs) makes prompt versioning essential for production AI systems. A 2024 analysis of AI pilot programs revealed that 95% fail to deliver measurable impact, with unmanaged prompt changes contributing significantly to this failure rate.

Key challenges without systematic versioning:

- Quality regressions: Minor prompt modifications can cause unpredictable output degradation across thousands of user interactions

- Audit trail gaps: Inability to trace which prompt version produced specific outputs complicates debugging and compliance

- Deployment friction: Coupling prompts with application code forces full redeployments for simple prompt iterations

- Collaboration bottlenecks: Engineering teams become gatekeepers for prompt modifications, slowing iteration cycles

- Rollback complexity: Without version history, reverting problematic changes becomes manual and error-prone

Research from LaunchDarkly on prompt management emphasizes that proper versioning addresses these issues by providing transparency, enabling controlled rollbacks, and maintaining compliance audit trails.

Top 5 Prompt Versioning Tools

1. Maxim AI - End-to-End Platform for AI Lifecycle Management

Maxim AI delivers the most comprehensive solution for prompt versioning, integrating experimentation, evaluation, simulation, and observability into a unified platform. Designed for cross-functional collaboration between AI engineers and product teams, Maxim enables teams to iterate more than 5x faster while maintaining production quality.

Comprehensive Versioning and Organization

Maxim's Prompt IDE provides a multimodal playground supporting closed-source, open-source, and custom models with advanced versioning capabilities:

- Automated version tracking: Every prompt change receives automatic versioning with complete metadata including author, timestamp, and optional change descriptions

- Visual diff comparison: Side-by-side comparison of prompt versions highlights changes and performance impacts

- Folder and tag organization: Hierarchical organization maps prompts to projects, teams, or products for efficient retrieval

- Session management: Save and recall entire conversation histories for multi-turn testing and iterative development

- Immutable version history: Published versions remain unchanged, ensuring reproducibility across deployments

The platform maintains comprehensive audit trails for every modification, supporting both compliance requirements and root-cause analysis during debugging.

Integrated Evaluation Framework

Maxim's evaluation suite sets it apart with the deepest integration between versioning and quality assessment:

Prebuilt evaluators:

- Bias and toxicity detection

- Faithfulness and coherence metrics

- RAG-specific evaluation (retrieval precision, recall, relevance)

- Context relevance for retrieval-augmented generation

Custom evaluation support:

- Deterministic rule-based evaluators

- Statistical analysis frameworks

- LLM-as-a-judge implementations

- Human annotation workflows with labeling interfaces

According to the Maxim documentation, teams can configure evaluations at session, trace, or span level, providing granular quality control across multi-agent systems.

CI/CD Native Deployment

Maxim decouples prompt management from application code, enabling rapid iteration without redeployment risks:

- QueryBuilder rules: Deploy prompts based on environment, tags, or folder matching

- Feature flags and A/B testing: Test prompt variants against control groups with statistical confidence

- Gradual rollouts: Deploy changes to user segments progressively, monitoring quality metrics before full deployment

- Automatic rollback: Revert to stable versions when quality degradation is detected

The platform's SDK integrations for Python, TypeScript, Java, and Go enable seamless integration with existing development workflows.

Production Observability

Maxim's observability suite provides real-time monitoring and debugging capabilities:

- Distributed tracing: Track prompt execution across complex agent workflows with OpenTelemetry-compatible tracing

- Performance dashboards: Monitor latency, token usage, and cost metrics per prompt version

- Quality alerts: Automated notifications when evaluation metrics fall below thresholds

- Custom dashboards: Create targeted insights across agent behavior dimensions without code

Research shows that comprehensive observability reduces mean-time-to-resolution for production issues by enabling rapid identification of problematic prompt versions.

AI Agent Simulation

The simulation capabilities enable systematic testing before production deployment:

- Test AI agents across hundreds of scenarios and user personas

- Evaluate conversational trajectories and task completion rates

- Re-run simulations from any step to reproduce issues and validate fixes

- Measure quality improvements between prompt versions at scale

Enterprise Security and Governance

Maxim provides production-grade security features essential for regulated industries:

- SOC 2 Type 2 compliance: Certified security controls and audit processes

- In-VPC deployment: Private cloud options maintaining data sovereignty

- Role-based access control (RBAC): Granular permissions controlling who can modify or deploy prompts

- SSO integration: Enterprise authentication with custom identity providers

- Vault support: Secure API key management through HashiCorp Vault

According to Maxim's feature documentation, these capabilities support auditability requirements while enabling cross-functional collaboration.

Cross-Functional Collaboration

Maxim's UX enables non-technical team members to contribute to prompt engineering:

- Visual prompt editor: Product managers and content teams can iterate on prompts without coding

- Comment and annotation: Inline feedback on prompt versions facilitates team coordination

- Approval workflows: Structured review processes ensure quality gates before deployment

- Change notifications: Automated alerts keep stakeholders informed of prompt modifications

This collaboration model reduces the burden on engineering teams while accelerating iteration cycles.

Integration with Bifrost Gateway

Maxim's Bifrost gateway complements prompt versioning with multi-provider routing:

- Automatic failover: Seamless failover between providers and models ensures zero downtime

- Load balancing: Intelligent request distribution across API keys and providers

- Semantic caching: Reduce costs and latency through intelligent response caching

- Governance features: Usage tracking, rate limiting, and fine-grained access control

The unified platform approach ensures prompt versions remain consistent across different LLM providers.

2. PromptLayer - Registry-Based Prompt Management

PromptLayer specializes in visual prompt management with a registry-based approach. The platform enables teams to edit, A/B test, and deploy prompts through a dashboard without code changes.

Key capabilities:

- Visual prompt editor for non-technical stakeholders

- Release labels for production, staging, and development environments

- Evaluation pipelines with backtesting against historical data

- Usage monitoring and latency tracking

According to industry analysis, PromptLayer suits teams seeking streamlined collaboration, though its evaluation capabilities are less comprehensive than full-stack platforms.

3. Helicone - LLM Observability with Version Control

Helicone focuses on observability-first prompt management, automatically recording changes for A/B testing and performance comparison. The platform provides generous free tier access making it accessible for early-stage projects.

Core features:

- Automatic version recording for every prompt modification

- Dataset tracking for evaluation consistency

- Rollback support for quick recovery from problematic changes

- Multimodal support for text and image models

Research on prompt engineering tools notes that Helicone's parameter tuning capabilities are less extensive than dedicated experimentation platforms.

4. LangSmith - LangChain-Native Versioning

LangSmith provides prompt versioning tightly integrated with the LangChain ecosystem, using commit-based versioning familiar to software engineers.

Notable features:

- Commit hash-based version identification

- LangChain Hub centralized prompt repository

- Tags for release management

- Execution traces for debugging LangChain workflows

According to comparative analysis, LangSmith excels within LangChain environments but requires additional tooling for comprehensive evaluation workflows.

5. Braintrust - GitHub-Integrated Development Platform

Braintrust uniquely connects versioning, evaluation, and deployment with GitHub Actions integration. The platform enables CI/CD workflows where evaluations run automatically on every commit.

Distinctive capabilities:

- Content-addressable IDs ensuring reproducibility

- GitHub Actions for automated evaluation on pull requests

- Threshold-based merge blocking preventing quality regressions

- Comprehensive diff tracking between versions

Research from Braintrust's documentation shows this approach provides strong regression detection for development-focused teams.

Feature Comparison

| Feature | Maxim AI | PromptLayer | Helicone | LangSmith | Braintrust |

|---|---|---|---|---|---|

| Version Control | Automated with metadata | Release labels | Automatic recording | Commit-based | Content-addressable IDs |

| Evaluation Integration | Comprehensive (bias, toxicity, RAG metrics) | Evaluation pipelines | Basic A/B testing | LangChain-focused | GitHub-integrated evals |

| Deployment | SDK + QueryBuilder rules | Visual deployment | Basic rollback | LangChain Hub | CI/CD native |

| Observability | Full distributed tracing | Usage monitoring | Observability-first | Execution traces | Performance tracking |

| Collaboration | Cross-functional UI | Visual editor | Basic dashboard | Developer-focused | GitHub workflows |

| Enterprise Security | SOC 2, in-VPC, RBAC | SOC 2 compliant | Free tier generous | Enterprise available | Self-hosted option |

| Multi-Agent Support | Native simulation | Limited | Limited | Via LangChain | Evaluation focus |

| Custom Evaluators | Flexible (deterministic, statistical, LLM) | Synthetic evals | Basic metrics | Custom chains | Custom scorers |

Best Practices for Prompt Versioning

Based on research by AI engineering teams, implement these practices for reliable workflows:

1. Adopt Semantic Versioning

Structure version identifiers to communicate change significance:

- MAJOR.MINOR.PATCH (e.g., v2.1.3)

- Major: Breaking changes requiring downstream updates

- Minor: New features maintaining backward compatibility

- Patch: Bug fixes and minor adjustments

This convention, detailed in semantic versioning guidelines, helps teams understand change impact at a glance.

2. Document Comprehensive Metadata

Each version should include:

- Change description: What changed and why

- Performance benchmarks: Evaluation scores, latency, cost metrics

- Known limitations: Edge cases or failure modes

- Deployment history: Where and when deployed, user segments affected

Maxim's experimentation platform provides structured interfaces for capturing this documentation alongside prompt content.

3. Implement Regression Testing

Run new versions against established test suites before deployment:

- Automated evaluation: Execute hundreds of test cases measuring quality systematically

- Baseline comparison: Compare new version performance against established benchmarks

- Edge case validation: Ensure improvements don't introduce failures in known scenarios

According to testing best practices, automated evaluation enables rapid validation while maintaining quality standards.

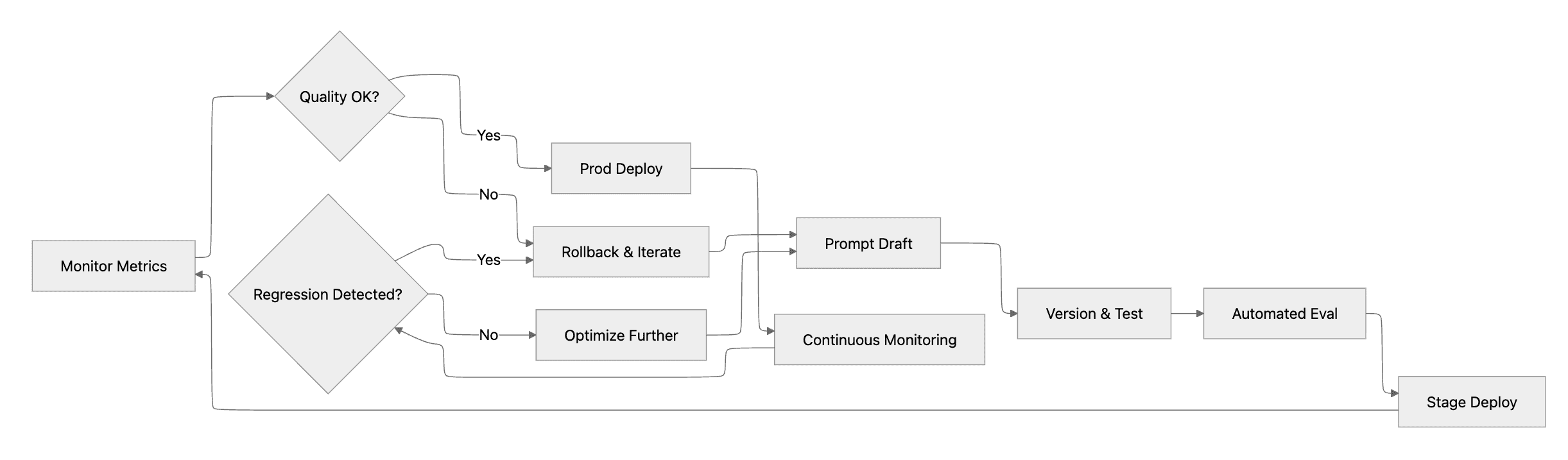

4. Use Staged Deployment Strategies

Deploy changes through controlled processes minimizing risk:

- Environment separation: Test in staging environments mirroring production configurations

- Gradual rollouts: Deploy to small user segments initially, monitoring quality before expansion

- Feature flags: Decouple prompt deployment from code releases enabling rapid iteration

5. Monitor Production Performance

Establish comprehensive monitoring for deployed prompts:

- Quality metrics: Track user satisfaction, completion rates, error frequencies

- Cost tracking: Monitor token usage and API costs per prompt version

- Latency analysis: Identify performance degradation before user impact

- Automated alerts: Configure notifications for unexpected metric changes

Research on observability shows that proactive monitoring enables teams to detect and resolve issues before significant user impact.

This workflow demonstrates how versioning, evaluation, and monitoring work together to maintain reliability throughout the AI lifecycle.

Conclusion

Prompt versioning has evolved from basic change tracking to comprehensive development infrastructure supporting the entire AI lifecycle. Organizations building production AI applications require systematic approaches to prompt management that enable rapid iteration while maintaining quality standards and production reliability.

Among the tools examined, Maxim AI provides the most complete solution for teams seeking end-to-end lifecycle management. The platform uniquely integrates prompt versioning with comprehensive evaluation frameworks, production observability, and AI agent simulation. These capabilities are essential for scaling AI applications reliably. With enterprise-grade security, cross-functional collaboration features, and CI/CD-native deployment, Maxim enables AI teams to iterate faster while maintaining the quality gates necessary for production systems.

For teams already invested in specific ecosystems, PromptLayer offers streamlined registry management, Helicone provides observability-first approaches, LangSmith integrates tightly with LangChain workflows, and Braintrust delivers GitHub-native development experiences. However, as research on AI engineering practices demonstrates, comprehensive platform approaches that unify versioning, evaluation, and monitoring deliver the greatest value for production AI teams.

Ready to implement systematic prompt versioning for your AI workflows? Book a demo to see how Maxim accelerates prompt engineering while maintaining production quality, or sign up now to start versioning prompts systematically and shipping higher-quality AI applications today.