Top 5 Prompt Versioning Tools in 2025: Essential Infrastructure for Production AI Systems

Table of Contents

- TL;DR

- Understanding Prompt Versioning

- Why Prompt Versioning Matters

- Key Capabilities in Prompt Versioning Platforms

- Top 5 Prompt Versioning Tools

- Comparative Analysis

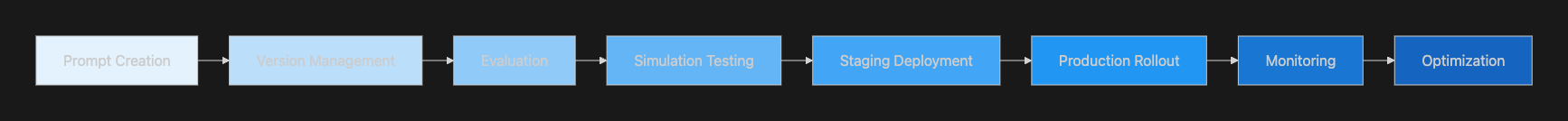

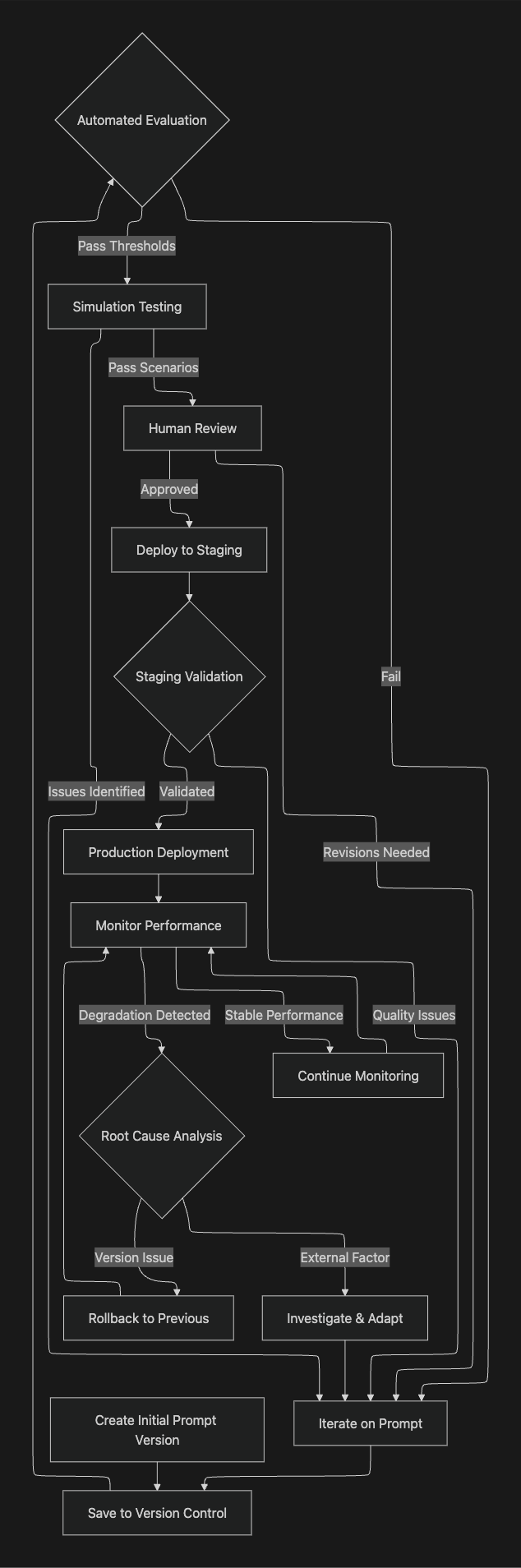

- Version Control Workflow

- Implementation Best Practices

- Conclusion

TL;DR

Prompt versioning has become critical infrastructure for teams building production AI applications. This guide analyzes five leading prompt versioning platforms for 2025:

- Maxim AI: End-to-end platform combining versioning, evaluation, simulation, and observability with cross-functional collaboration

- Langfuse: Open-source prompt CMS with flexible versioning and self-hosting capabilities

- PromptLayer: Git-like version control with visual prompt registry and automated evaluation triggers

- Braintrust: Content-addressable versioning with environment-based deployment and systematic evaluation

- Humanloop: Product-focused prompt management with visual editing and rapid iteration workflows

Organizations should evaluate platforms based on lifecycle coverage, deployment models, collaboration requirements, and integration with existing AI development infrastructure.

Understanding Prompt Versioning

Prompt versioning represents the systematic practice of tracking, managing, and deploying different iterations of instructions provided to large language models. Unlike traditional code versioning, prompt versioning addresses unique challenges inherent in non-deterministic AI systems where identical inputs can produce varying outputs.

The practice encompasses several critical functions:

Change Tracking: Recording modifications to prompt content, structure, parameters, and metadata

Performance Attribution: Linking prompt versions to specific output quality metrics and user feedback

Reproducibility: Ensuring teams can recreate exact prompt configurations from any point in development history

Rollback Capabilities: Reverting to previous versions when new iterations introduce regressions

Collaboration Infrastructure: Enabling multiple team members to iterate on prompts without conflicts

Why Prompt Versioning Matters

The transition from experimental AI prototypes to production systems has made prompt versioning essential for maintaining application reliability and quality.

Non-Deterministic Nature of LLMs

Large language models produce different outputs even with identical inputs due to their probabilistic architecture. Research indicates that results vary based on model settings, temperature parameters, and system messages. Without versioning, teams cannot reliably attribute quality changes to specific prompt modifications or isolate variables during optimization.

Production Reliability Requirements

Organizations deploying customer-facing AI applications cannot tolerate unpredictable behavior resulting from untracked prompt changes. Versioning provides the audit trail and rollback capabilities necessary for maintaining stable production systems.

Cost Optimization Imperatives

Token consumption directly impacts operational expenses at scale. Systematic prompt optimization enables teams to identify more efficient prompt structures that maintain quality while reducing costs.

Regulatory Compliance

Regulated industries deploying AI systems face requirements for explainability, audit trails, and change documentation. Prompt versioning platforms provide the infrastructure necessary for compliance with governance frameworks.

Cross-Functional Collaboration

Modern AI development involves engineers, product managers, domain experts, and QA professionals. Versioning infrastructure enables these stakeholders to collaborate systematically without introducing conflicts or losing work.

Key Capabilities in Prompt Versioning Platforms

Organizations evaluating prompt versioning tools should assess capabilities across multiple dimensions:

Version Management Infrastructure

- Comprehensive change tracking with metadata and attribution

- Immutable version identifiers for reproducibility

- Branching and merging capabilities for parallel experimentation

- Semantic versioning support for major, minor, and patch updates

Deployment and Environment Management

- Environment-based deployment (development, staging, production)

- Gradual rollout capabilities for A/B testing

- Automated deployment rules without code changes

- Rollback procedures for quality issues

Evaluation Integration

- Automated testing triggered on version changes

- Regression detection across prompt modifications

- Performance benchmarking against baseline versions

- Quality metrics tracking over time

Collaboration Features

- Visual editing interfaces for non-technical users

- Role-based access controls and approval workflows

- Change review and comment capabilities

- Team-wide visibility into version history

Integration and Observability

- CI/CD pipeline integration

- Production monitoring linked to deployed versions

- Real-time performance tracking

- Cost analysis by version

Top 5 Prompt Versioning Tools

1. Maxim AI

Platform Overview

Maxim AI provides an end-to-end platform for AI experimentation, simulation, evaluation, and observability that treats prompt versioning as a foundational component of the complete AI development lifecycle. The platform enables teams to ship AI agents reliably and more than 5x faster through unified workflows spanning experimentation through production monitoring.

Key Features

Advanced Versioning and Organization

Maxim's Experimentation platform provides enterprise-grade prompt management:

- UI-based prompt organization: Structure prompts using folders, tags, and custom metadata for logical grouping across projects and teams

- Comprehensive version tracking: Automatically version every prompt modification with detailed change logs and attribution

- Session management: Save, recall, and tag prompt sessions for iterative development without losing experimental context

- Deployment variables: Configure dynamic prompt parameters that change across environments without modifying core prompt content

- Multimodal support: Version prompts with text, images, audio, and structured outputs in unified workflows

Integrated Evaluation Framework

Evaluation capabilities distinguish Maxim's versioning approach:

- Automated evaluation triggers: Run comprehensive test suites automatically when prompts are modified

- Regression detection: Compare new versions against baseline performance to catch quality degradation before deployment

- Multi-level granularity: Configure evaluations at session, trace, or span level for fine-grained assessment

- Custom evaluator library: Create domain-specific evaluators using LLM-as-a-judge, statistical, or programmatic approaches

- Human-in-the-loop validation: Integrate expert reviews into version approval workflows

Deployment and Experimentation Infrastructure

Maxim enables systematic deployment without code changes:

- Deployment rules: Define conditions for automatic prompt version selection based on user attributes, feature flags, or A/B test assignments

- Environment management: Maintain separate prompt versions for development, staging, and production with controlled promotion workflows

- Side-by-side comparison: Evaluate output quality, cost, and latency across prompt-model-parameter combinations

- Context integration: Connect databases, RAG pipelines, and tool definitions directly within versioned prompt configurations

Production Observability

Observability features link prompt versions to production performance:

- Distributed tracing: Track which prompt versions generate specific outputs in production environments

- Automated quality monitoring: Run evaluations continuously on production logs to detect version-specific issues

- Performance analytics: Analyze latency, cost, and quality metrics by prompt version

- Alert configuration: Trigger notifications when specific versions exhibit degraded performance

Simulation Testing

Agent simulation capabilities enable comprehensive version testing:

- Scenario-based evaluation: Test prompt versions across hundreds of realistic scenarios before production deployment

- Conversational trajectory analysis: Assess how different versions perform in multi-turn interactions

- Root cause debugging: Re-run simulations from any step to understand version-specific behavior

- Scale testing: Validate prompt performance across diverse user personas and edge cases

Cross-Functional Collaboration

Maxim's design enables seamless collaboration between technical and non-technical stakeholders:

- No-code workflows: Product managers can create, version, and test prompts without engineering dependencies

- Shared workspaces: Engineering and product teams work in unified environments with consistent data access

- Powerful SDKs: Python, TypeScript, Java, and Go SDKs provide programmatic control for technical workflows

- Intuitive visualization: Clear dashboards enable non-technical stakeholders to understand version performance

Best For

Maxim AI is ideal for:

- Organizations requiring comprehensive lifecycle management spanning experimentation, evaluation, simulation, and production monitoring

- Teams building complex agentic workflows requiring systematic testing before deployment

- Cross-functional environments where product managers actively participate in prompt optimization

- Enterprises prioritizing quality assurance with evaluation-driven version selection

- Applications in regulated industries requiring complete audit trails and compliance documentation

- Teams seeking unified platforms to reduce integration complexity across fragmented tooling

2. Langfuse

Platform Overview

Langfuse provides an open-source prompt CMS for managing and versioning prompts with self-hosting support, emphasizing transparency and infrastructure control.

Key Features

- Prompt CMS: Manage and version prompts through content management system without application redeployment

- Open-source flexibility: Self-host with complete deployment control and data sovereignty

- Non-technical user access: Enable product teams to work with prompts through visual interfaces

- Integration with tracing: Link prompt versions to execution traces for debugging

- Version comparison: Compare outputs across different prompt versions

Best For

- Teams with DevOps capabilities valuing open-source principles

- Organizations requiring complete data sovereignty for privacy-sensitive applications

- Development teams needing transparent, modifiable versioning infrastructure

3. PromptLayer

Platform Overview

PromptLayer focuses on prompt management and versioning through a visual prompt registry, providing Git-like version control with minimal integration friction.

Key Features

- Visual prompt registry: Edit and deploy prompt versions through dashboard without coding

- Automatic capture: Every LLM call creates a version in the registry without manual tracking

- Evaluation triggers: Automatically run tests when prompts are updated

- Usage analytics: Monitor performance metrics and costs by prompt version

- Regression testing: Test new versions against historical data before deployment

Best For

- Teams wanting simple prompt versioning without extensive infrastructure overhead

- Small teams needing shared access with quick setup

- Organizations prioritizing lightweight integration for early-stage development

4. Braintrust

Platform Overview

Braintrust treats prompts as versioned artifacts with content-addressable IDs, integrating versioning with evaluation infrastructure and staged deployment workflows.

Key Features

- Content-addressable versioning: Unique version IDs derived from content ensure reproducibility

- Environment-based deployment: Associate specific versions with development, staging, production environments

- Integrated evaluation: Connect versioning to comprehensive testing infrastructure

- Immutable versions: Loading version X always returns identical content regardless of future changes

- Prompt playground: Rapidly test versions with real-time feedback

Best For

- Teams building production AI applications requiring systematic evaluation-driven version selection

- Organizations needing environment-based deployment with quality gates

- Development teams preventing regressions through comprehensive testing infrastructure

5. Humanloop

Platform Overview

Humanloop provides product-focused prompt management with emphasis on rapid iteration and visual editing for non-technical team members.

Key Features

- Visual prompt editor: Enable product teams to modify and version prompts without code changes

- Rapid iteration: Quick feedback loops for testing prompt modifications

- Environment support: Manage versions across different deployment stages

- Evaluation features: Basic testing capabilities for version comparison

- User-friendly interface: Accessible to non-technical stakeholders

Best For

- Product-led teams where non-technical members drive prompt optimization

- Organizations prioritizing rapid experimentation over comprehensive evaluation

- Teams needing accessible interfaces for cross-functional collaboration

Comparative Analysis

Feature Comparison Matrix

| Feature | Maxim AI | Langfuse | PromptLayer | Braintrust | Humanloop |

|---|---|---|---|---|---|

| End-to-End Platform | ✓ | ✗ | ✗ | ✗ | ✗ |

| Visual Version Management | ✓ | ✓ | ✓ | ✓ | ✓ |

| Automated Evaluation | ✓ | Limited | ✓ | ✓ | Limited |

| Agent Simulation | ✓ | ✗ | ✗ | ✗ | ✗ |

| Environment Deployment | ✓ | Limited | ✗ | ✓ | ✓ |

| Production Observability | ✓ | ✓ | ✓ | ✓ | Limited |

| Content-Addressable IDs | ✓ | ✗ | ✗ | ✓ | ✗ |

| Open Source | ✗ | ✓ | ✗ | ✗ | ✗ |

| Self-Hosting | ✓ | ✓ | ✗ | ✓ | ✗ |

| CI/CD Integration | ✓ | Limited | ✓ | ✓ | Limited |

| Human-in-the-Loop | ✓ | ✓ | Limited | Limited | ✓ |

| Multimodal Support | ✓ | ✓ | Limited | Limited | Limited |

Lifecycle Coverage Comparison

Platform Coverage:

- Maxim AI: Comprehensive coverage across all lifecycle stages with unified workflows

- Langfuse: Strong in version management and production monitoring; limited pre-deployment testing

- PromptLayer: Focused on version management and evaluation; basic deployment features

- Braintrust: Strong in evaluation and environment-based deployment; comprehensive testing infrastructure

- Humanloop: Emphasizes rapid iteration and visual editing; limited production monitoring

Deployment Model Comparison

| Platform | Cloud Hosted | Self-Hosted | In-VPC | Environment Management |

|---|---|---|---|---|

| Maxim AI | ✓ | ✓ | ✓ | Advanced |

| Langfuse | ✓ | ✓ | ✓ | Basic |

| PromptLayer | ✓ | ✗ | ✗ | Basic |

| Braintrust | ✓ | ✓ | ✗ | Advanced |

| Humanloop | ✓ | ✗ | ✗ | Basic |

Integration and Collaboration

| Platform | No-Code UI | SDK Support | Cross-Functional Workflows | Approval Workflows |

|---|---|---|---|---|

| Maxim AI | Advanced | Python, TS, Java, Go | Optimized | ✓ |

| Langfuse | Basic | Python, TS | Developer-centric | Limited |

| PromptLayer | Advanced | Python | Balanced | Limited |

| Braintrust | Advanced | Python, TS | Balanced | ✓ |

| Humanloop | Advanced | Python | Product-centric | ✓ |

Version Control Workflow

Effective prompt versioning requires systematic workflows connecting version management to evaluation, deployment, and monitoring. The following framework represents best practices for production-grade systems:

Workflow Stage Details

Version Creation and Management

- Document version objectives and expected improvements

- Apply semantic versioning (major.minor.patch) for change categorization

- Include metadata: author, timestamp, related issues, performance baselines

- Link versions to specific evaluation datasets and test scenarios

Automated Evaluation

- Run comprehensive test suites on new versions automatically

- Measure quality dimensions: accuracy, relevance, safety, consistency

- Compare against baseline versions to detect regressions

- Generate quantitative performance reports with statistical significance

Simulation Testing

- Test versions in realistic conversational scenarios

- Evaluate multi-turn interaction quality and task completion rates

- Assess tool usage and decision-making patterns across scenarios

- Identify edge cases where versions exhibit unexpected behavior

Human Review and Approval

- Subject matter expert assessment of nuanced outputs

- Domain-specific validation against business requirements

- Stakeholder sign-off before production deployment

- Documentation of approval rationale and concerns

Staged Deployment

- Deploy to staging environment matching production configuration

- Validate performance with production-scale traffic patterns

- Run A/B tests comparing new version to current production baseline

- Monitor for unexpected issues before full rollout

Production Monitoring

- Track version-specific performance metrics continuously

- Link user feedback to deployed versions

- Alert on quality degradation or anomalous behavior

- Maintain rollback readiness for rapid remediation

Conclusion

Prompt versioning has evolved from optional development practice to essential infrastructure for reliable AI applications. Organizations deploying production systems require systematic approaches to version management that ensure reproducibility, enable collaboration, and maintain quality standards.

Ready to implement production-grade prompt versioning for your AI applications? Explore Maxim AI to experience comprehensive versioning, evaluation, simulation, and observability capabilities designed for cross-functional teams building reliable AI systems. Our unified platform helps engineering and product teams collaborate seamlessly across the entire version lifecycle, reducing time-to-production while maintaining rigorous quality standards.

Schedule a demo to see how Maxim's end-to-end platform can transform your prompt versioning workflows and help your team ship AI agents more than 5x faster with confidence and reliability.