Top 5 RAG Observability Tools to Boost Your Agentic Workflow

TLDR

RAG observability demands visibility into retrievals, tool calls, LLM generations, and multi-turn sessions with robust evaluation and monitoring. This guide explores five leading platforms Maxim AI, Langsmith, Arize, RAGAS, and Langfuse comparing their capabilities in tracing, evaluation, and production monitoring. Maxim stands out as an end-to-end platform for agent observability, evaluation, and simulation, designed to help teams ship AI agents reliably. The right observability tool transforms your isolated AI systems into transparent, debuggable pipelines.

Table of Contents

- What is RAG Observability and Why It Matters

- Top 5 RAG Observability Tools

- Comparison Table

- How to Choose the Right RAG Observability Tool

- Further Reading

What is RAG Observability and Why It Matters

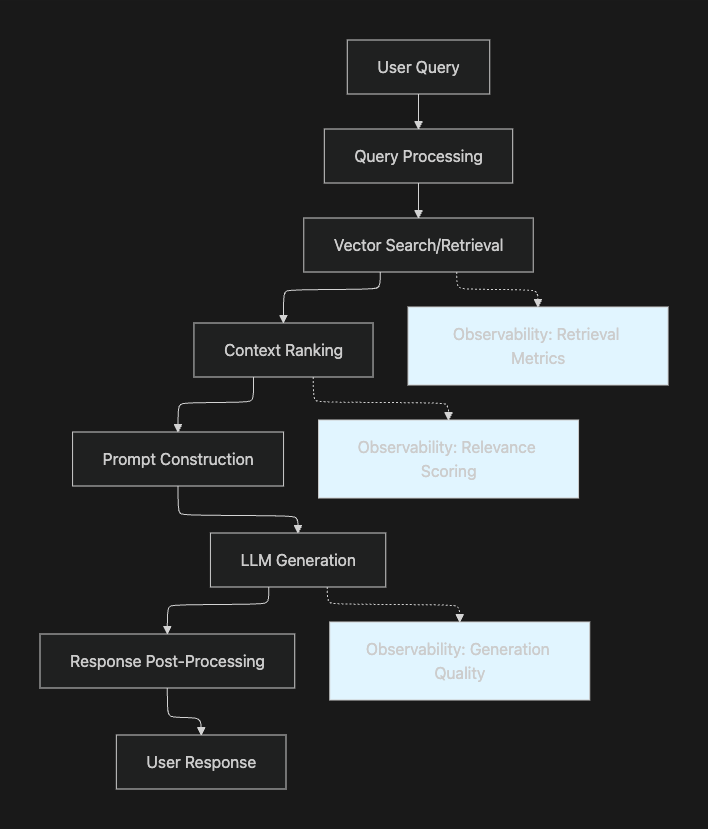

Retrieval-Augmented Generation (RAG) systems combine the power of large language models with external knowledge retrieval, enabling AI applications to provide accurate, context-aware responses. However, the complexity of RAG architectures spanning document retrieval, context ranking, prompt engineering, and generation creates numerous potential failure points that can degrade application quality.

RAG observability provides visibility into every component of your pipeline: retrieval quality, context relevance, LLM generations, tool executions, and multi-turn conversational flows. Without proper observability, debugging RAG applications becomes a guessing game, leaving teams unable to identify whether issues stem from poor retrieval, irrelevant context, or generation failures.

Key RAG Observability Challenges

RAG evaluation requires assessing both retrieval (context relevance, precision, recall) and generation (faithfulness, answer quality, hallucination detection). According to research, evaluating RAG architectures is challenging because multiple dimensions must be considered: the retrieval system's ability to identify relevant context, the LLM's capacity to exploit passages faithfully, and the overall generation quality.

Modern agentic workflows compound these challenges by introducing multi-step reasoning, tool usage, and dynamic decision-making. The right observability tool transforms your isolated AI systems into transparent, debuggable pipelines, enabling teams to iterate faster and deploy with confidence.

Top 5 RAG Observability Tools

1. Maxim AI

Platform Overview

Maxim is an end-to-end platform for agent observability, evaluation, and simulation, designed to help teams ship AI agents reliably. Unlike point solutions focused solely on logging or monitoring, Maxim provides a comprehensive AI quality platform that spans experimentation, simulation, evaluation, and production observability all optimized for cross-functional collaboration between engineering and product teams.

Key Features

Comprehensive Tracing & Debugging

- Real-time distributed tracing across multi-agent systems with span-level granularity

- Automatic capture of retrieval context, tool calls, LLM generations, and multi-turn sessions

- Visual trace inspection with detailed latency breakdowns and cost tracking

- Root cause analysis capabilities to identify bottlenecks in complex agentic workflows

Advanced Evaluation Framework

- Unified evaluation suite supporting deterministic, statistical, and LLM-as-a-judge evaluators

- Pre-built evaluators for RAG-specific metrics: context relevance, faithfulness, answer quality, hallucination detection

- Custom evaluator creation without code through the UI

- Human-in-the-loop evaluation workflows for nuanced quality assessments

- Configurable evaluations at session, trace, or span level for multi-agent systems

AI-Powered Simulation

- Agent simulation capabilities to test RAG pipelines across hundreds of scenarios

- Synthetic data generation for comprehensive test coverage

- User persona simulation to validate agent behavior across diverse use cases

- Conversational-level evaluation to assess task completion and failure points

Production Observability

- Real-time monitoring with automated quality checks on production logs

- Custom dashboards for deep insights across agent behavior

- Alert configuration for production issues with minimal user impact

- Multiple repository support for different applications and environments

Experimentation & Prompt Management

- Advanced playground for prompt engineering and rapid iteration

- Version control and deployment management for prompts

- A/B testing capabilities to compare prompt variations, models, and parameters

- Integration with RAG pipelines and external databases

Data Management

- Seamless dataset curation from production logs

- Multi-modal dataset support including images

- Human review collection and feedback workflows

- Dataset versioning for reproducible experiments

Best For

Maxim excels for teams requiring a full-stack solution that bridges pre-release development and production monitoring. The platform is particularly valuable for:

- AI engineering teams building complex multi-agent systems

- Product teams needing no-code access to evaluation and monitoring capabilities

- Organizations requiring cross-functional collaboration on AI quality

- Companies scaling from experimentation to production with enterprise-grade security

Standout Differentiators:

- End-to-end lifecycle coverage from experimentation to production

- Industry-leading user experience optimized for cross-functional teams

- Flexible evaluation framework configurable at any granularity level

- Native support for multimodal agents and complex agentic workflows

2. Langsmith

Platform Overview

Langsmith is an observability and evaluation platform developed by the LangChain team, designed specifically for applications built with LLMs. According to IBM, it serves as a dedicated platform for monitoring, debugging, and evaluating LLM applications, providing developers with the transparency needed to make applications production-ready.

Key Features

- Native LangChain Integration: Seamless tracing for LangChain applications with minimal setup

- Real-Time Tracing: Capture detailed execution traces including latency, token usage, and cost breakdowns

- Feedback API: Automated prompt evaluation and refinement based on user input

- Production Monitoring: Dashboards for tracking costs, latency, error rates, and usage patterns

- Evaluation Tools: Built-in capabilities for testing prompt versions and performance across edge cases

Best For

Langsmith is ideal for teams heavily invested in the LangChain ecosystem who need quick debugging and monitoring capabilities. The platform works best for prototyping RAG pipelines, chatbots, and lightweight agents where rapid iteration matters more than deep policy enforcement.

3. Arize

Platform Overview

Arize provides a unified platform for LLM observability and agent evaluation through its Phoenix open-source framework and managed AX platform. Built on OpenTelemetry, Phoenix offers vendor-agnostic tracing that works across any framework or language, making it highly flexible for diverse technology stacks.

Key Features

- Open-Source Foundation: Phoenix provides free, open-source tracing and evaluation capabilities

- Multi-Framework Support: Native instrumentation for LlamaIndex, LangChain, DSPy, Haystack, and more

- Comprehensive Evaluation: Automated evaluations using LLM-as-a-judge with support for custom metrics

- Drift Detection: Monitor model drift across training, validation, and production environments

- ML & LLM Unified Platform: Single platform for both traditional ML models and generative AI applications

Best For

Arize is suitable for teams requiring open-source flexibility or those running hybrid workloads combining traditional ML models with LLM applications. The platform excels in scenarios requiring detailed performance analysis, drift detection, and integration with existing observability infrastructure.

4. RAGAS

Platform Overview

RAGAS (Retrieval Augmented Generation Assessment) is an open-source evaluation framework specifically designed for assessing RAG pipeline performance. According to research published on arXiv, RAGAS enables reference-free evaluation of RAG systems, meaning teams can assess quality without requiring ground-truth annotations for every test case.

Key Features

- Reference-Free Evaluation: Assess RAG quality without extensive ground-truth datasets

- Component-Level Metrics: Separate evaluation of retrieval (context precision, recall) and generation (faithfulness, answer relevancy)

- Synthetic Data Generation: Automatically create test datasets covering various scenarios

- Framework Integration: Works seamlessly with LangChain, LlamaIndex, and major observability platforms

- Metric-Driven Development: Continuous monitoring of essential metrics for data-driven decisions

Best For

RAGAS is best suited for teams focused specifically on RAG evaluation rather than full observability. It works well as a complementary tool integrated with platforms like Langfuse or Langsmith for comprehensive evaluation workflows. The framework is particularly valuable for teams needing rigorous, component-level assessment of RAG pipeline quality.

5. Langfuse

Platform Overview

Langfuse is an open-source LLM engineering platform providing integrated features for tracing, prompt management, and evaluation. Initially developed for complex RAG applications, Langfuse emphasizes ease of integration and comprehensive observability for production systems.

Key Features

- One-Click Integration: Minimal setup required for LlamaIndex and LangChain applications

- Session Tracking: Group multiple chat threads into sessions for conversational application monitoring

- Prompt Management: Version control and collaboration features for prompt engineering

- Cost & Latency Tracking: Automatic breakdowns of usage, costs, and performance metrics

- RAGAS Integration: Native support for running RAGAS evaluation suite on captured traces

- Deployment Flexibility: Self-hosted or cloud deployment options

Best For

Langfuse excels for teams requiring open-source flexibility with comprehensive tracing and prompt management capabilities. The platform is particularly effective for conversational AI applications requiring session-level tracking and teams wanting to integrate RAGAS evaluations into their observability workflow.

Comparison Table

| Feature | Maxim AI | Langsmith | Arize | RAGAS | Langfuse |

|---|---|---|---|---|---|

| Deployment | Cloud, Self-Hosted | Cloud, Hybrid, Self-Hosted | Cloud, Open-Source | Open-Source | Cloud, Self-Hosted |

| Primary Focus | End-to-End AI Lifecycle | LangChain Ecosystem | ML + LLM Observability | RAG Evaluation | Tracing + Prompt Management |

| Tracing | ✓ Advanced | ✓ Comprehensive | ✓ OpenTelemetry-based | ✗ Evaluation Only | ✓ Comprehensive |

| Evaluation | ✓ Advanced (Human + AI) | ✓ Built-in | ✓ LLM-as-Judge | ✓ Specialized RAG Metrics | ✓ With RAGAS Integration |

| Simulation | ✓ AI-Powered | ✗ | ✗ | ✗ | ✗ |

| Experimentation | ✓ Advanced Playground | ✓ Prompt Testing | ✓ Experiments | ✗ | ✓ Prompt Management |

| Multi-Agent Support | ✓ Native | ✓ Via LangChain | ✓ Via Frameworks | ✗ | ✓ Via Frameworks |

| Custom Dashboards | ✓ No-Code | ✓ Pre-built | ✓ Pre-built | ✗ | ✓ Pre-built |

| Production Monitoring | ✓ Real-Time | ✓ Real-Time | ✓ Continuous | ✗ | ✓ Real-Time |

| Cross-Functional UX | ✓ Product + Engineering | △ Engineering-Focused | △ Engineering-Focused | △ Technical | △ Engineering-Focused |

| Framework Agnostic | ✓ | △ Optimized for LangChain | ✓ | ✓ | △ Optimized for LlamaIndex/LangChain |

| Enterprise Features | ✓ SSO, RBAC, SOC2 | ✓ Self-Hosting Available | ✓ Self-Hosting Available | ✗ | ✓ Self-Hosting Available |

How to Choose the Right RAG Observability Tool

Selecting the optimal observability platform depends on your specific requirements, team composition, and development stage:

Choose Maxim AI if you need:

- Full-Stack Coverage: End-to-end platform spanning experimentation, simulation, evaluation, and production observability

- Cross-Functional Collaboration: No-code access for product teams alongside powerful SDKs for engineering

- Advanced Evaluation: Flexible evaluators configurable at session, trace, or span level for multi-agent systems

- Enterprise Scale: Self-hosted deployment, SOC2 compliance, and comprehensive security features

- Multimodal Agents: Native support for complex agentic workflows with multiple modalities

Further Reading

Internal Resources

- Building High-Quality Datasets for AI Agents

- Debugging RAG Pipelines: Best Practices

- Multimodal AI Agents: Implementation Guide

- Maxim AI Documentation

Take Your RAG Observability to the Next Level

RAG observability is no longer optional it's essential for shipping reliable AI applications. Whether you're debugging complex multi-agent systems, evaluating retrieval quality, or monitoring production performance, having comprehensive visibility into your RAG pipeline determines success.

Maxim AI provides the industry's most complete platform for agent observability, evaluation, and simulation. Our end-to-end approach helps teams ship AI agents reliably and more than 5x faster, with powerful capabilities for both engineering and product teams.

Ready to transform your RAG pipeline with comprehensive observability? Schedule a demo to see how Maxim can help your team build, evaluate, and monitor AI agents with confidence. Or sign up today to start improving your AI application quality.