Top 5 Tools for Monitoring LLM Applications in 2025

TL;DR:

LLM monitoring requires real-time visibility into prompts, responses, token usage, latency, costs, and quality across production deployments. Effective monitoring platforms provide distributed tracing, automated evaluations, anomaly detection, and alerting to ensure AI applications remain reliable, cost-efficient, and performant at scale. This guide compares the top five monitoring tools: Maxim AI, Langfuse, Datadog, Helicone, and Weights & Biases.

Table of Contents

- Why LLM Monitoring Matters

- Key Capabilities for LLM Monitoring

- The 5 Best LLM Monitoring Tools

- Platform Comparison

- Choosing the Right Tool

- Conclusion

Why LLM Monitoring Matters

Large language models have transitioned from experimental prototypes to production systems powering critical business applications. According to research on LLM operations, AI applications introduce unique reliability challenges absent from traditional software, including non-deterministic outputs, unpredictable failure modes, silent quality degradation, and rapidly escalating inference costs.

Without a comprehensive monitoring infrastructure, teams operate blind to production issues until customers report problems. AI systems fail silently, generating plausible but incorrect responses. Model outputs gradually drift from intended behavior as usage patterns evolve. Token consumption spirals unchecked, transforming manageable costs into budget-breaking expenses. Integration failures cascade through multi-step workflows, creating compound errors invisible in individual components.

Traditional application performance monitoring cannot adequately address LLM-specific challenges. Standard APM tools track request latency and error rates but lack visibility into prompt quality, response relevance, hallucination frequency, or semantic accuracy. LLM monitoring platforms must capture qualitative dimensions alongside quantitative metrics, evaluating whether responses actually achieve intended outcomes rather than merely completing requests successfully.

Key Capabilities for LLM Monitoring

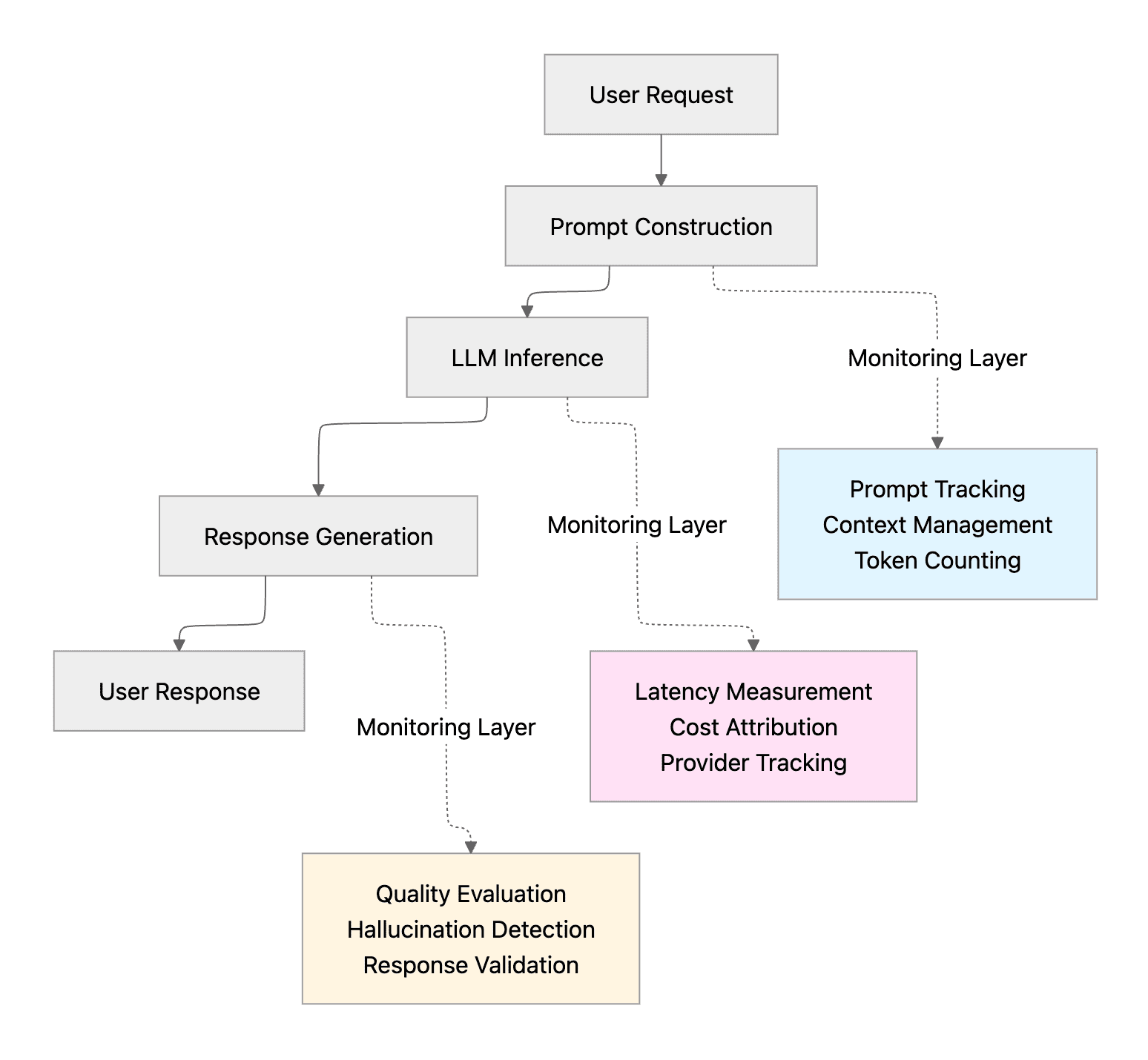

Effective LLM monitoring platforms provide comprehensive coverage across operational health, quality assessment, cost management, and debugging workflows.

Real-Time Observability

Production monitoring requires immediate visibility into system behavior. According to industry analysis on LLM observability, essential capabilities include real-time latency tracking showing time-to-first-token and end-to-end response times, token usage analytics providing cost visibility per request, error monitoring capturing failures across providers and models, and quality assessment evaluating model outputs for relevance and accuracy.

Advanced platforms provide configurable alerting identifying anomalies before user impact. Teams receive notifications when latency exceeds thresholds, costs spike unexpectedly, error rates increase, or quality metrics degrade. Integration with communication tools like Slack enables rapid response workflows.

Distributed Tracing

Multi-step LLM applications require end-to-end visibility across complex workflows. Retrieval-augmented generation systems involve document retrieval, context assembly, prompt construction, model inference, and response formatting. Agent-based systems add tool invocation, external API calls, and multi-turn conversation management.

Distributed tracing captures complete execution paths showing how requests flow through each processing stage. Teams can identify bottlenecks, isolate failure points, and correlate quality issues with specific workflow steps. According to research on production AI systems, comprehensive tracing reduces debugging time by surfacing root causes invisible in aggregate metrics.

Automated Quality Evaluation

Manual quality review cannot scale to production volumes. LLM monitoring platforms provide automated evaluation frameworks running continuous quality checks on production traffic. Evaluators assess multiple quality dimensions including response relevance to user queries, factual accuracy against retrieved sources, hallucination detection for unsupported claims, and instruction following adherence to system prompts.

LLM-as-judge evaluation leverages advanced models to assess response quality using natural language criteria. Custom evaluators encode domain-specific quality requirements. Statistical evaluators measure quantitative properties like response length, toxicity scores, or sentiment indicators.

Cost Attribution and Optimization

Token-based pricing creates variable costs scaling with usage volume and prompt complexity. According to industry benchmarks, unoptimized LLM applications can spend 10x more than necessary through inefficient prompting, excessive context windows, or unnecessary model calls.

Monitoring platforms track costs per request, per user, per feature, and per time period. Teams identify expensive workflows, compare provider pricing, and measure optimization impact. Budget alerts prevent cost overruns before reaching spending limits.

The 5 Best LLM Monitoring Tools

Maxim AI

Platform Overview

Maxim AI is an end-to-end AI simulation, evaluation, and observability platform, designed to help teams ship their AI agents reliably and more than 5x faster. Unlike point solutions focused solely on monitoring, Maxim delivers comprehensive lifecycle management spanning experimentation, simulation, evaluation, and production observability.

Key Features

Production Observability Suite

Maxim's production observability provides real-time monitoring with distributed tracing for LLM applications. Teams can track, debug, and resolve live quality issues with real-time alerts minimizing user impact. The platform supports multiple repositories for multiple applications, enabling organized logging and analysis using distributed tracing across complex agent workflows.

In-production quality measurement uses automated evaluations based on custom rules. Teams configure quality checks running continuously on production traffic, surfacing issues immediately rather than waiting for batch analysis. Integration with alerting systems enables automated incident response workflows.

Multi-Level Evaluation Framework

Maxim enables evaluation at span-level (individual LLM calls or tool invocations), trace-level (complete request-response cycles), and session-level (multi-turn conversations). This granularity allows teams to pinpoint exact failure points across complex workflows. You can determine if poor performance stems from retrieval quality, prompt construction, model reasoning, or response formatting.

The unified evaluation framework combines machine evaluators with human review workflows. Access various off-the-shelf evaluators through the evaluator store or create custom evaluators for specific application needs. Measure prompt and workflow quality using AI, programmatic, or statistical evaluators.

Comprehensive Data Management

The Data Engine provides seamless data management for AI applications. Import datasets including images with a few clicks. Continuously curate and evolve datasets from production data, enriching them using in-house or Maxim-managed data labeling. Create data splits for targeted evaluations and experiments.

Production logs become valuable training assets through systematic curation workflows. Human-in-the-loop annotation enables continuous alignment to user preferences. Dataset versioning ensures reproducible evaluation across development iterations.

Advanced Experimentation

Playground++ enables rapid prompt iteration with version control. Organize and version prompts directly from UI for iterative improvement. Deploy prompts with different deployment variables and experimentation strategies without code changes. Compare output quality, cost, and latency across various combinations of prompts, models, and parameters.

AI-Powered Simulation

AI-powered simulation tests agents across hundreds of scenarios and user personas before production deployment. Simulate customer interactions across real-world scenarios, monitoring agent responses at every step. Evaluate agents at conversational level, analyzing trajectory choices and task completion. Re-run simulations from any step to reproduce issues and identify root causes.

Cross-Functional Collaboration

Maxim emphasizes collaboration between AI engineers, product managers, and QA teams through custom dashboards providing deep insights across agent behavior. Teams can create these insights with just a few clicks, cutting across custom dimensions to optimize agentic systems. Role-based access controls enable enterprise deployment with appropriate governance.

Best For

Maxim AI is ideal for teams requiring end-to-end lifecycle management for LLM applications, comprehensive monitoring with automated quality evaluation, cross-functional collaboration between engineering and product teams, and enterprise deployment with SOC 2 compliance features.

Langfuse

Platform Overview

Langfuse is an open-source LLM engineering platform offering call tracking, tracing, prompt management, and evaluation capabilities. The platform provides basic observability features for teams building LLM applications.

Key Features

Langfuse captures inputs, outputs, tool usage, retries, latencies, and costs through distributed tracing. The platform supports prompt versioning and deployment management. Evaluation capabilities include LLM-as-judge scoring, human annotations, and custom scoring functions. The open-source nature enables self-hosting for teams requiring data sovereignty.

Best For

Langfuse works well for teams prioritizing open-source solutions, requiring basic tracing and evaluation capabilities, and preferring self-hosted deployment options. The platform serves teams with limited observability budgets seeking foundational monitoring infrastructure.

Datadog

Platform Overview

Datadog LLM Observability extends their application performance monitoring platform to cover AI workloads. The platform integrates LLM tracing with existing APM, infrastructure monitoring, and log management.

Key Features

Datadog's SDK auto-instruments OpenAI, LangChain, AWS Bedrock, and Anthropic calls, capturing latency, token usage, and errors without code changes. Built-in evaluations detect hallucinations and failed responses. Security scanners flag prompt injection attempts and prevent data leaks. LLM Experiments enable testing prompt changes against production data before deployment. Traces appear alongside existing APM data, correlating LLM latency with database queries or infrastructure issues.

Best For

Datadog suits enterprises already using Datadog for infrastructure monitoring who want unified observability across traditional and AI workloads. The platform works well for organizations with existing Datadog investments seeking to extend monitoring coverage to LLM applications.

Helicone

Platform Overview

Helicone operates as a gateway platform sitting between applications and LLM providers, adding observability without code changes. The platform focuses on practical production monitoring with minimal integration friction.

Key Features

Helicone requires only URL changes or header additions to begin logging LLM requests. The open-source platform supports 100+ models across OpenAI, Anthropic, Google, Groq, and others through OpenAI-compatible API. Features include unified billing across providers with zero markup, automatic fallbacks when providers fail, and response caching, cutting costs on repeated queries. The dashboard surfaces costs, latency, and usage patterns without configuration.

Best For

Helicone works best for teams needing rapid observability deployment with minimal engineering effort. The gateway approach suits organizations wanting provider-agnostic monitoring without instrumenting application code directly.

Weights & Biases

Platform Overview

Weights & Biases (Weave) extends their machine learning platform to support LLM experimentation, tracing, and evaluation. The platform provides observability integrated with experiment tracking workflows.

Key Features

Weave supports LLM and agent observability with comprehensive tracing capabilities. The platform integrates evaluation metrics, prompt experimentation, and collaboration features through Prompt Canvas UI. Support spans various LLM providers and frameworks. The tool excels for teams already using Weights & Biases for ML workflows seeking unified tracking across traditional models and LLMs.

Best For

Weights & Biases suits ML teams extending into LLM applications who want unified experiment tracking. The platform works well for organizations with existing W&B deployments requiring observability alongside model training workflows.

Platform Comparison

| Feature | Maxim AI | Langfuse | Datadog | Helicone | Weights & Biases |

|---|---|---|---|---|---|

| Full Lifecycle Support | ✅ Complete | ⚠️ Monitoring Focus | ⚠️ Monitoring Focus | ⚠️ Gateway Only | ⚠️ Experiment Focus |

| Real-Time Monitoring | ✅ Production Suite | ✅ Basic | ✅ Enterprise APM | ✅ Gateway Logs | ⚠️ Limited |

| Automated Evaluations | ✅ Extensive | ⚠️ Limited | ✅ Built-In | ❌ No | ⚠️ Limited |

| Distributed Tracing | ✅ Multi-Level | ✅ Basic | ✅ APM Integration | ⚠️ Request-Level | ✅ Experiment Tracking |

| Cost Attribution | ✅ Detailed | ✅ Basic | ✅ Token Tracking | ✅ Unified Billing | ⚠️ Limited |

| Custom Dashboards | ✅ No-Code | ⚠️ Limited | ✅ Datadog UI | ⚠️ Basic | ⚠️ Limited |

| Simulation Capabilities | ✅ AI-Powered | ❌ No | ❌ No | ❌ No | ❌ No |

| Data Curation | ✅ Data Engine | ⚠️ Basic | ❌ No | ❌ No | ⚠️ Limited |

| Cross-Functional Tools | ✅ Full Support | ⚠️ Engineer-Focused | ⚠️ Engineer-Focused | ⚠️ Engineer-Focused | ⚠️ ML-Focused |

| Deployment Options | ✅ Cloud/On-Prem/Air-Gapped | ✅ Self-Hosted | ✅ Cloud | ✅ Cloud/Self-Hosted | ✅ Cloud |

Choosing the Right Tool

Selecting the appropriate LLM monitoring platform depends on your development stage, existing infrastructure, and team requirements.

For Production Deployments: Live applications require comprehensive monitoring with real-time alerting. Maxim's observability suite provides production-grade monitoring with distributed tracing, automated quality checks, and alerting integrated across the full development lifecycle. Datadog suits teams with existing Datadog infrastructure seeking unified monitoring.

For Rapid Deployment: Teams needing immediate monitoring without extensive integration work benefit from gateway approaches. Helicone enables observability in minutes through URL changes. However, gateway solutions provide request-level visibility without deeper application instrumentation.

For End-to-End Workflows: Comprehensive AI development requires monitoring alongside experimentation, evaluation, and simulation. Maxim's platform supports the complete lifecycle from prompt experimentation through AI-powered simulation to production monitoring, eliminating tool sprawl across development stages.

Budget Considerations: Open-source platforms like Langfuse minimize licensing costs but require self-hosting infrastructure and maintenance. Managed platforms provide operational simplicity with usage-based pricing. Gateway solutions like Helicone offer zero-markup unified billing across providers.

Team Structure: Engineering-only teams can work with any platform. Cross-functional teams including product managers benefit from Maxim's custom dashboards and evaluator configuration enabling non-technical stakeholders to define quality criteria and track business metrics without engineering dependencies.

Compliance Requirements: Enterprise deployments with regulatory requirements need SOC 2 compliance, role-based access controls, and audit trail capabilities. Maxim provides enterprise deployment options with comprehensive security features. Self-hosted solutions like Langfuse enable complete data sovereignty.

Conclusion

LLM monitoring has evolved from optional tooling to essential infrastructure for production AI applications. The five platforms reviewed provide different approaches to observability, evaluation, and operational management.

Maxim AI provides the only platform delivering end-to-end support spanning experimentation, simulation, evaluation, and production monitoring. The platform's comprehensive capabilities in automated evaluation, multi-level tracing, data curation, and cross-functional collaboration eliminate tool sprawl that slows AI development.

Langfuse offers open-source flexibility for teams prioritizing self-hosting. Datadog extends enterprise APM infrastructure to AI workloads. Helicone provides rapid gateway-based deployment. Weights & Biases integrates LLM observability with ML experiment tracking.

The optimal choice depends on whether you need comprehensive lifecycle management or point solutions for specific monitoring needs. Teams building production-grade AI applications benefit from Maxim's unified approach, accelerating development through integrated workflows from experimentation to production monitoring while enabling seamless collaboration between engineering and product teams.

Explore Maxim AI's platform to see how end-to-end LLM monitoring can transform your development workflow, or schedule a demo to discuss your specific AI monitoring requirements with our team.

Further Reading

Maxim AI Resources

Industry Research

- LLM Operations and Production Challenges

- Evaluating Large Language Model Applications

- Production AI Systems: Monitoring and Reliability

- Quality Assessment for LLM Applications

Ready to elevate your LLM monitoring infrastructure? Sign up for Maxim AI to start building more reliable AI applications, or book a demo to explore how our platform can help your team ship AI applications 5x faster with confidence.